Nature Newsletter

December 16, 2021

Typically, decision makers draw on formal summaries of research evidence called systematic reviews, but during the pandemic these can become out of date almost immediately.

We need a ‘living evidence’ approach, argue the members of a task force that produces COVID-19 guidelines for clinicians in Australia.

They developed an approach to evidence synthesis that has now been adopted by the World Health Organization and by public-health bodies in Canada and the United Kingdom.

Originally published at

ORIGINAL PUBLICATION

Decision makers need constantly updated evidence synthesis

Fund and use ‘living’ reviews of the latest data to steer research, practice and policy.

Nature

Julian Elliott , Rebecca Lawrence , Jan C. Minx , Olufemi T. Oladapo , Philippe Ravaud , Britta Tendal Jeppesen , James Thomas , Tari Turner , Per Olav Vandvik & Jeremy M. Grimshaw

December 15, 2021

As the world began to respond to the COVID-19 pandemic last year, there was an explosion of guidelines, position statements and protocols — many of low quality and contradictory. In March 2020, several of us approached the Australian National Health and Medical Research Council, worried that the cacophony would create confusion and anxiety among already-stressed clinicians. We argued for key bodies to come together quickly and use robust, evidence-based processes to find signals in the noisy flow of COVID-19 research. Two weeks later, we had formed a task force and produced the first version of national, evidence-based COVID-19 guidelines for Australia. We made a commitment to update the guidelines every week, but this had never been done before. Our challenge was to work out how.

Typically, national guidelines draw on formal summaries of research evidence called systematic reviews, but the pandemic ‘broke the evidence pipeline’.

Take the example of remdesivir, an intravenous treatment originally developed for Ebola virus. In May 2020, weak but promising data suggested it could be used to treat COVID-19. Over the next 18 months, 52 papers from 14 randomized trials were published. Clinicians and policymakers had to make decisions on the basis of this shifting, and often contradictory, body of evidence. To help them, scholars produced systematic reviews — 30 in 2020. Many were out of date before publication because they left out recently published primary studies; most of the rest became out of date within weeks.

Out-of-date systematic reviews are common any time there is a flood of new research. In the absence of up-to-date summaries of accumulating knowledge, decision makers’ attention often jumps from study to study. This muddles policymaking, fuels controversy and erodes trust in science.

A better system would keep summaries of research evidence up to date. That’s the system we built for the Australian National COVID‑19 Clinical Evidence Taskforce, for the World Health Organization’s (WHO’s) Therapeutics and COVID-19: living guideline, and for the COVID-NMA repository of COVID-19 research. The system can be applied beyond COVID-19, and indeed beyond health. Here’s what that will take.

Evidence building

The process of identifying and combining data across studies to create a clear understanding of a body of research is known as evidence synthesis. The resulting publications are generally called systematic reviews, and often include a meta-analysis. Since the 1980s, the practice of evidence synthesis has grown to become the foundation for high-impact decision-making in disease prevention, diagnosis and treatment, and in other aspects of health. Evidence synthesis also helps to tackle questions in education, economics, environment, criminal justice, global development and more. The number of published academic systematic reviews has risen from around 6,000 in 2011 to more than 45,000 in 2021.

University departments, international bodies such as Cochrane (a leading producer of systematic reviews on health topics), and numerous conferences and journals have established scientific methods, conventions and production systems for evidence synthesis. The approach is now routine across many national and global decision-making bodies responsible for policy and practice guidance. For example, rather than relying on single studies, drug regulators and insurers worldwide use syntheses of all relevant studies to evaluate safety and effectiveness and decide whether to approve or pay for a drug.

Evidence synthesis can be applied to the most pressing global challenges: climate change, energy transitions, biodiversity loss, antimicrobial resistance, poverty eradication and so on. But in practice, synthesis projects are often under-resourced, of poor quality, uncoordinated, duplicative and out of date.

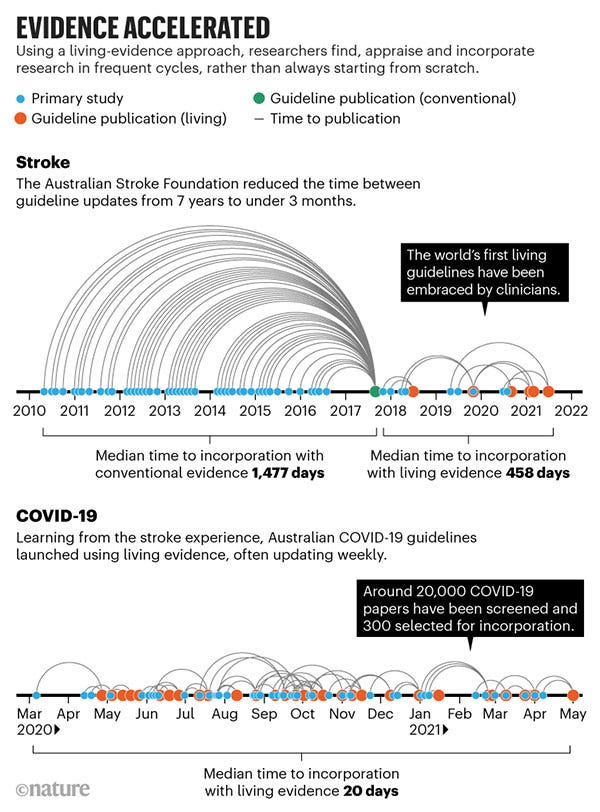

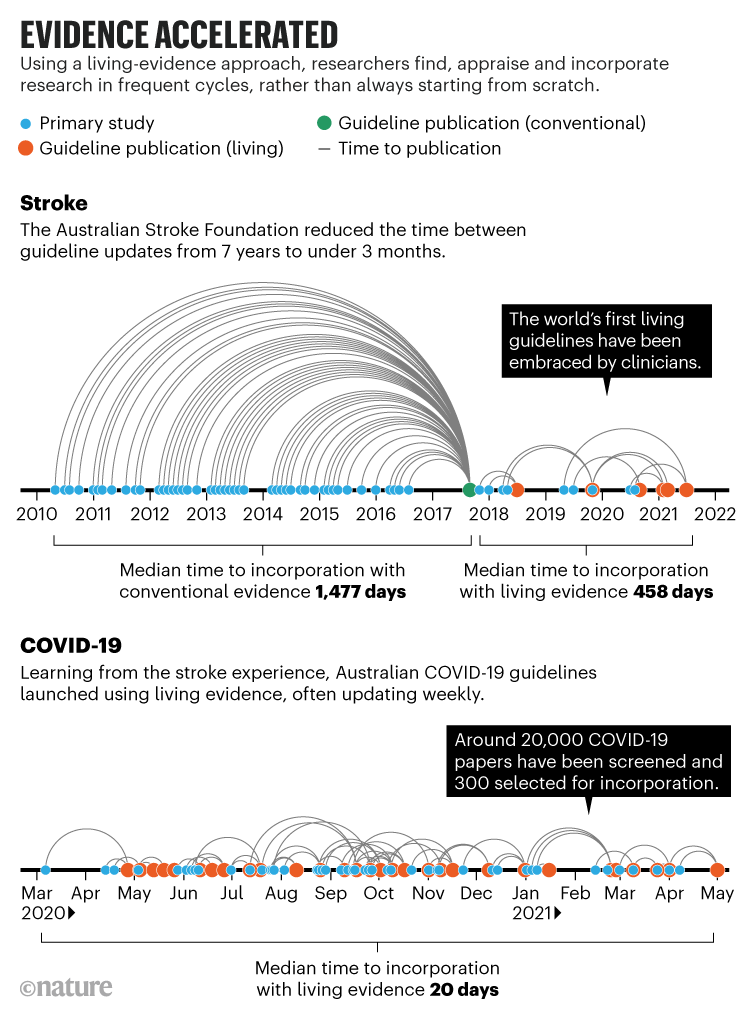

‘Living evidence’ is an approach to synthesis that we and others have developed to address these problems. It produces ‘ready-to-go’ evidence summaries that better serve the needs of decision makers because they are both rigorous (all relevant research has been carefully appraised) and up to date. Living evidence is particularly important in topics for which research evidence is emerging rapidly, current evidence is uncertain and new research might change policy or practice. For example, the Australian national guidelines for treating people who have had a stroke are now updated every 3–4 months instead of every 5–7 years (see ‘Evidence accelerated’). Clinicians can consult the most up-to-date evidence, and this has led to greater trust and use of the guidelines.

Researchers practising living evidence establish protocols that commit to regular updates and continuously monitor bibliographic and other databases to identify new research. These projects are often enabled by technologies such as natural-language processing, machine learning and crowdsourcing and, sometimes, by FAIR data sharing (which ensures the findability, accessibility, interoperability and reuse of digital assets). Researchers rapidly incorporate studies in frequent cycles that follow established methods for high-quality synthesis and guidelines (for instance, predefined protocols and careful appraisal of the research included).

Even with a deluge of new research, it is feasible to update syntheses and treatment guidelines frequently. For COVID-19, we pushed that limit lower than ever, updating some living guidelines weekly (see ‘Evidence accelerated’). Frequent updating assured policymakers and the public that all relevant research had been taken into account when recommending against the use of the drug hydroxychloroquine, for example. And the COVID-END collaboration used living evidence to keep Canada’s public-health agency updated on COVID‑19 vaccine effectiveness as variants of concern emerged.

This year, the UK National Institute for Health and Care Excellence (NICE) announced that living guidelines will be a pillar of its improvement strategy over the next five years. After piloting efforts for several years, the WHO this year began promoting living systematic reviews and living guidelines as standard methodology. It has used the approach for COVID-19, maternal and perinatal care and contraception. In the academic literature, about 100 living systematic reviews have been published since the start of 2020, compared with perhaps 15 in the 4 years previously.

Many global challenges can benefit from living evidence. Consider climate policy. The Intergovernmental Panel on Climate Change (IPCC) has been very effective in synthesizing evidence on the anthropogenic causes of climate change, its impacts and the potential effects of long-term emission-reduction strategies. But there is no comparable culture of evidence synthesis on the effectiveness of climate-change mitigation or adaptation. Living evidence could help to firmly establish this capacity to ensure that the thousands of decisions needed to achieve net-zero emissions are supported by reliable and up-to-date scientific evidence. There is real urgency for funders and policymakers to incentivize this cultural shift.

Stakeholders and end-users should be involved in both the design and production of living evidence. Depending on the topic, this might mean including communities that are most likely to be affected by extreme weather, or vocational training programmes or a national disease- treatment guideline. For example, early in the pandemic, the COVID‑19 Core Outcome Set initiative identified the most important COVID-19 outcomes for patients and clinicians from around the world. This was used to guide how living-guidelines panels judged the potential benefits of new treatments.

Living evidence is particularly relevant for research funders. To direct resources to important questions, funders must identify current knowledge gaps. Living evidence reveals these gaps by mapping published and ongoing research and by keeping up to date with new research. Investments in living evidence can therefore benefit society twice — directly by funding synthesis efforts, and indirectly by enhancing research investments. Funders should invest in systems, tools and partnerships that will build overall capacities beyond any specific project.

Four steps

How best can living evidence expand? Four considerations are key.

Reduce unit costs. Cutting the time and effort required for systematic review is crucial to the scale-up of living evidence. At present, an initial systematic review typically requires more than 200 person-hours of manual work to sift through titles and abstracts of potentially relevant studies. Many of us are developing technologies to make workflows more efficient and cost-effective. These include natural-language processing and machine learning, crowdsourcing, FAIR data and non-profit software tools, including Covidence and EPPI-Reviewer for systematic review, and MAGICapp for guideline development (J.E. and P.O.V are chief executives at Covidence and MAGICapp, respectively). Together, these technologies could slash the time required by two-thirds. The more others get involved, the better and faster these tools will get. Publications should include better metadata to improve discovery, and should follow FAIR practices to provide open and machine-readable research data.

Prevent research waste. As systematic reviews became widely recognized as a form of publication, there was an increase in wasteful, duplicated, low-quality reviews. Some researchers and journals pursue ‘quick’ systematic reviews as a way to boost their publication and citation counts. As with the remdesivir example, the number of systematic reviews summarizing a body of randomized trials can often outnumber the number of relevant trials.

Funders could help by focusing on a set of high-quality living systematic reviews and guidelines. Another approach could be to establish larger collaborative projects, analogous to global multisite clinical trials or ‘big science’ collaborations in physics, as a mechanism to reduce redundancy.

Optimize publishing. Publishers such as F1000, Cochrane, The BMJ and Annals of Internal Medicine have shown that academic publishers can support frequent updating of living systematic reviews and guidelines with multiple updates of essentially the same document. Most are minor and only occasionally major. Workflows were modified so that editorial and peer review, as well as copyediting and production, supported frequent revisions. This required differentiating between small and large alterations and ensuring links between versions. Minor updates can be incorporated in the original publication, or as an addendum. When authors and editors judge that changes to a paper warrant a major update, this can trigger a new article version (linked to previous versions through CrossRef and other links on the journal website) and a new bibliographic database listing and digital object identifier (DOI).

The research community must rethink what the ‘version of record’ means and how to optimize the incentives and rewards for authors and peer reviewers of frequently updated systematic reviews. Peer review of these continuously updated documents should be neither slow and onerous nor a quick rubber stamp. The underlying data from living systematic reviews and guidelines should be FAIR, to support replicability and transparency and maximize reuse.

Facilitate implementation. Living evidence has the potential to speed up the incorporation of science into practice and policy. Decisions do not depend solely on evidence, however, and changes in practice generally lag behind changes in guidance. Research on implementation is therefore essential to understand how best living evidence can serve policy and practice. So far, experience with implementation has been limited to fewer than a dozen case studies such as those mentioned here.

Issues that need to be investigated include: assessing the impact of new models of dissemination (for example, continuous ‘what’s new’ feeds rather than conventional, intermittent announcements); deciding when changes in evidence should trigger implementation; and whether a supply of reliable, current evidence affects how it is incorporated into decisions. In many instances, implementation activities will not need to change, and living evidence will give them a head start.

Living-evidence outputs should also be set up to integrate with data systems. For example, health-care recommendations in living guidelines could flow into clinical tools to support physicians’ decisions. Living systematic reviews of low-carbon technologies could update climate-change-mitigation models.

Keep moving

Decisions relevant to global challenges must be informed by the best available evidence. It should no longer be acceptable for evidence to be out of date, biased or selective. Without trustworthy and up-to-date summaries, the world risks making ill-informed decisions and wasting investment.

Living evidence as a practice has been around for barely five years, and there is much to learn. Our experience so far is counter to common initial concerns. Rather than being confused by changing guidelines, clinicians value a resource they know is up to date. Interest from living-evidence practitioners can be sustained and team membership can gradually shift without interrupting updates. The cost of continuously updating guidelines seems about the same as gearing up for big revisions every few years.

The advances made in evidence systems during the COVID-19 pandemic should extend beyond health. We call on researchers in all scientific fields, and their funders, to test the living-evidence model across diverse domains. Trialling the approach with different types of evidence, for a wide range of decision makers, will contribute to the advancement and scale-up of the model. Science does not stand still; neither should its synthesis and translation into action.

About the authors & affiliations

Julian Elliott directs the Australian Living Evidence Consortium, based at Cochrane Australia, Monash University, Melbourne, Australia, and is chief executive at Covidence in Melbourne.

Rebecca Lawrence, Jan C. Minx, Olufemi T. Oladapo, Philippe Ravaud, Britta Tendal Jeppesen, James Thomas, Tari Turner, Per Olav Vandvik, Jeremy M. Grimshaw.

Originally published at https://www.nature.com.