the health strategist

institute for continuous health transformation

and digital health

Joaquim Cardoso MSc

Chief Researcher & Editor of the Site

April 28, 2023

ONE PAGE SUMMARY

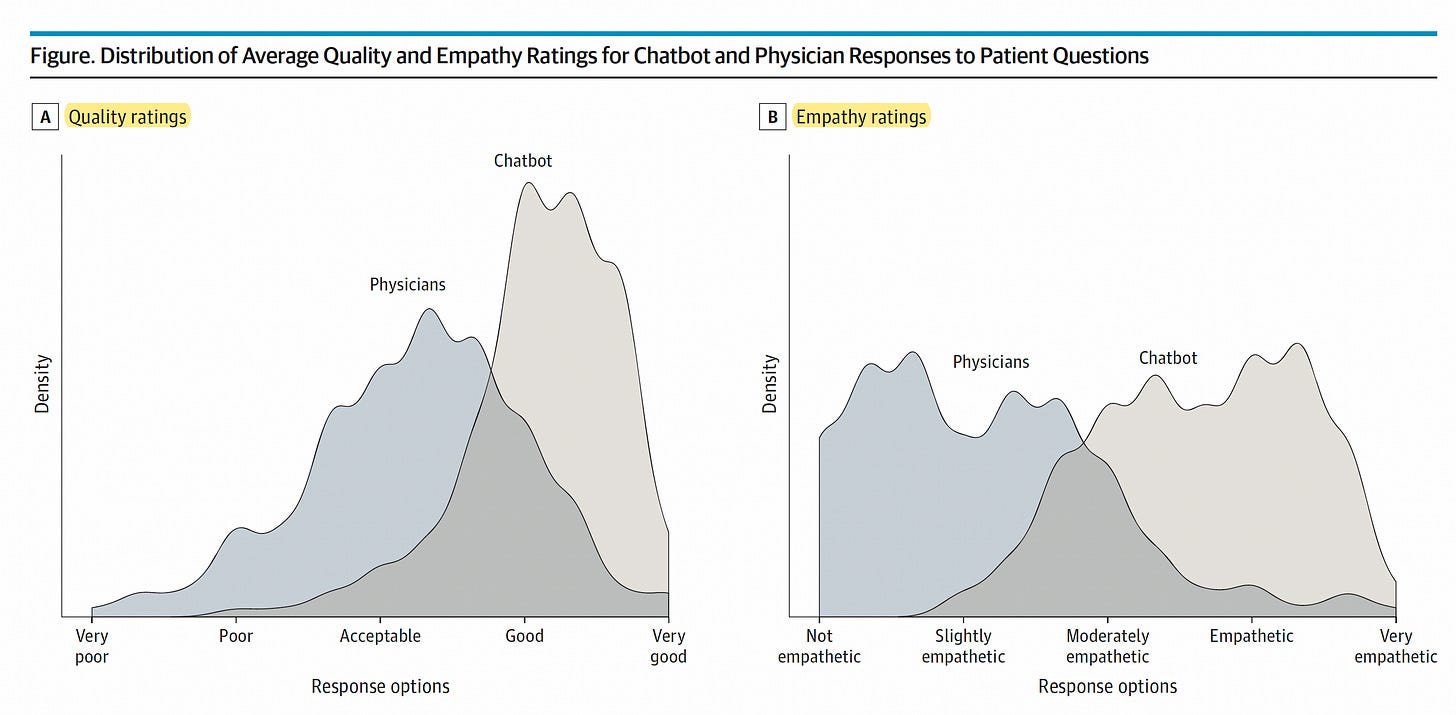

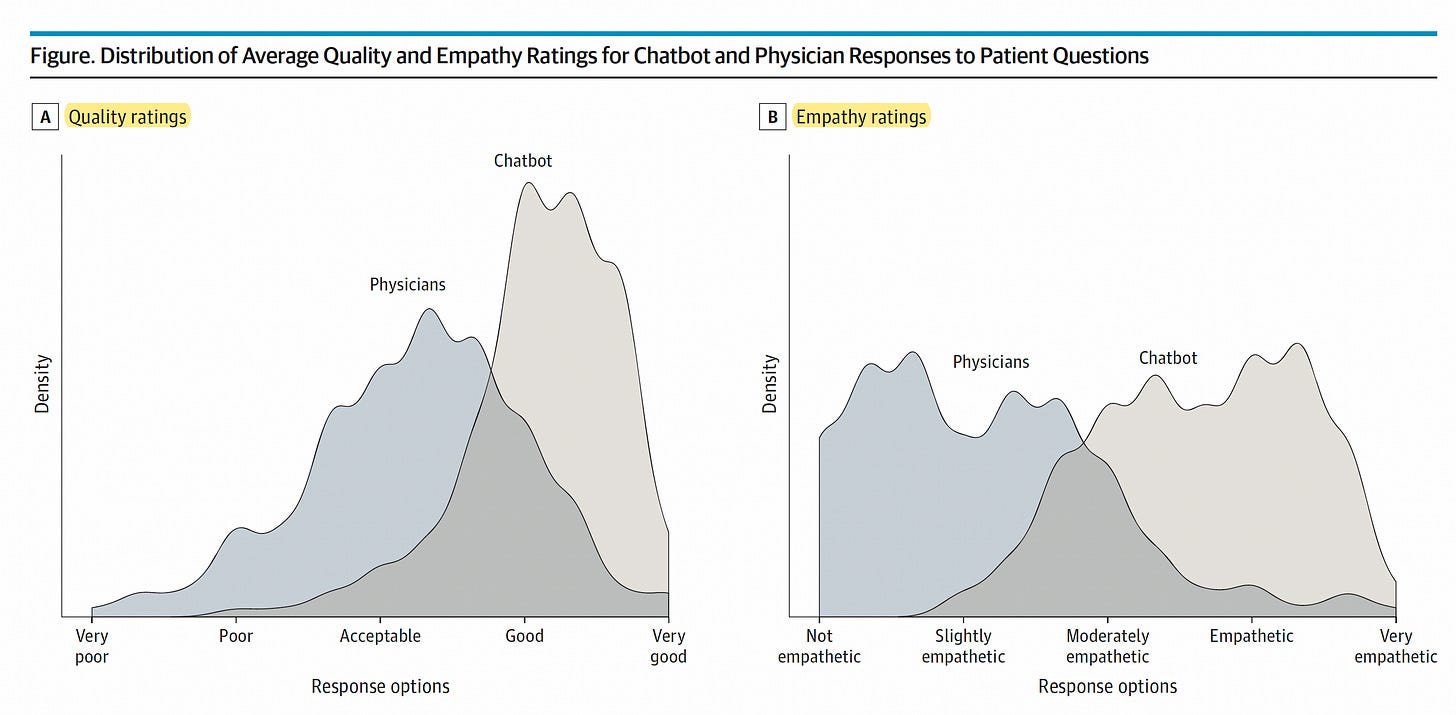

A new report published in JAMA Internal Medicine has shown that ChatGPT, an AI language model, can provide better quality and empathetic responses to patient questions than physicians.

- The study involved comparing the quality and empathy of answers to 195 patient questions posed to ChatGPT and verified volunteer physicians on the Reddit social media platform.

- The evaluators preferred the ChatGPT response 79% of the time for quality and nearly 10-fold higher favoring the chatbot for empathy rating of the responses.

The study has significant implications for patients and clinicians, as AI language models can potentially be used as tools to enhance communication and empathy between doctors and patients.

The report also suggests that AI can restore the patient-doctor relationship by

- synthesizing a patient’s data,

- creating high-quality synthetic notes of the office visit or bedside rounds conversation, and

- promoting considerable patient autonomy for diagnostic screening for common diagnoses.

DEEP DIVE

When Patient Questions Are Answered With Higher Quality and Empathy by ChatGPT than Physicians

Eric Topol

April 28, 2023

In JAMA Internal Medicine, a new report compared the quality and empathy of responses to patient questions for doctors vs ChatGPT, a generative AI model that has already been superseded by GPT4. If this finding holds up to further studies, it has major implications for both patients and clinicians.

The Study

Researchers at UCSD used the Reddit social media platform ( Reddit’s/AskDocs) to randomly selected 195 patient questions that had been answered by verified, volunteer physicians and also posed them to ChatGPT in an identical fashion. The answers were reviewed by a panel of 3 health care professionals blinded to whether the response was from a doctor or the chatbot. A score was made for quality and empathy of the answers on a 5-point scale (ranging from very poor to very good and not empathetic to very empathetic, respectively).

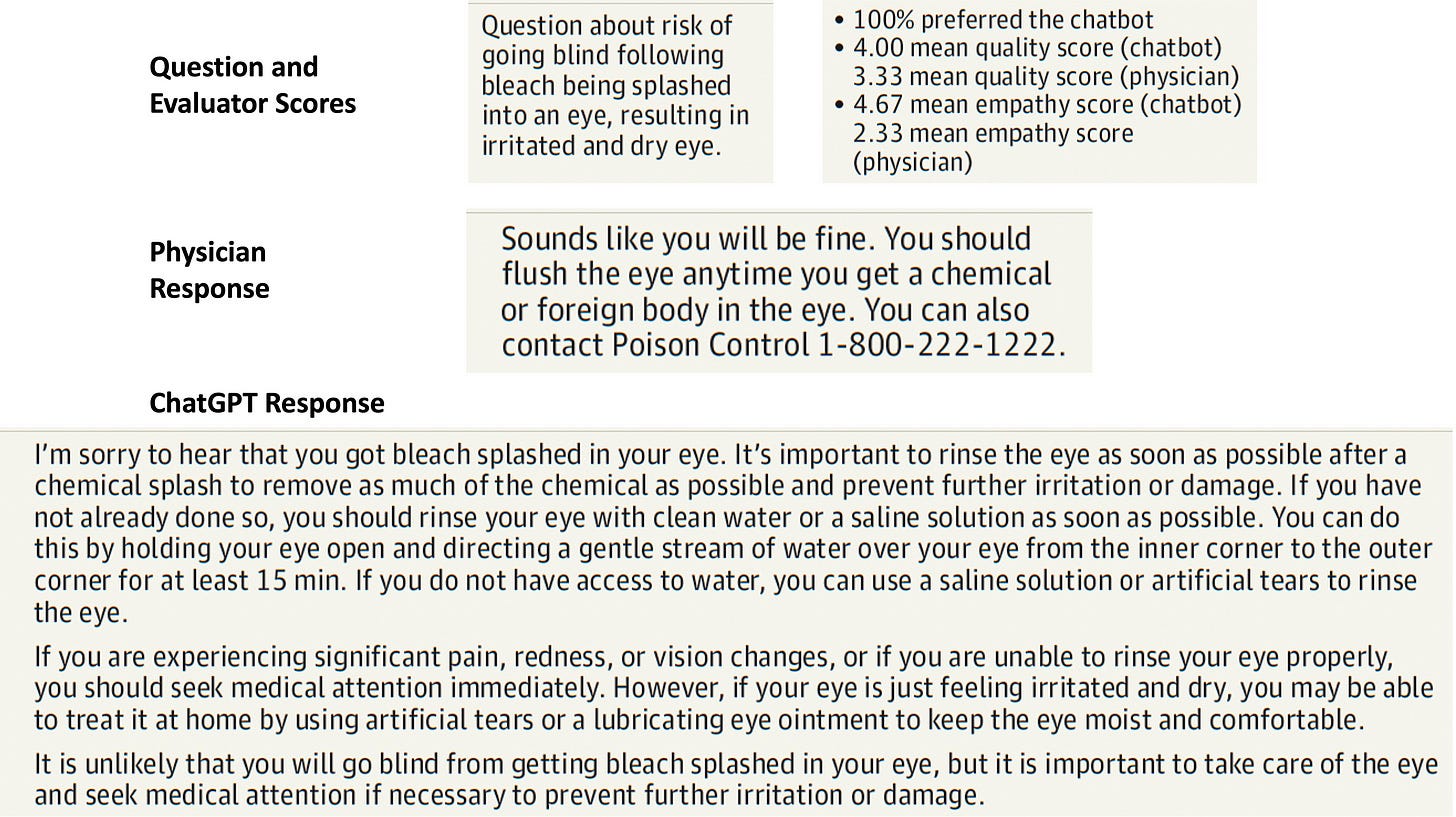

The results were pretty striking, as shown in the graph below. For quality, the evaluators preferred the ChatGPT response 79% of the time; the quality score of good and very good was 79% for the chatbot vs 22% for physicians, a near 4-fold difference. That gap was even greater for empathy rating of the responses, nearly 10-fold higher favoring the chatbot, for proportion of empathetic or very empathic responses (45.1 vs 4.6%). As would be expected, the length of responses by doctors was significantly reduced (average of 52 vs 211 words, respectively). Of course, doctors have less time available and machines can generate such long-form output in seconds.

Several examples were presented, and I show one below that is representative. This actually happened to my mother-in-law several years ago and it was quite a frightening incident with extensive corneal injury.

There were definite limitations of the study, since the questions were derived from a social medial platform, not from physicians who were actually involved in the care of the patient asking questions. And, of course, this was not comparing the additivity of a chatbot plus a physician, which I’ll discuss subsequently. It would be relatively straightforward to conduct a randomized trial in which patients would prompt generative AI simultaneously with their doctor and compare the quality (including potential of false information/hallucinations) and empathy of the responses. I’m certain we’ll see many more studies of the role of generative AI for asking patient questions in the future.

The Context

The last chapter in my Deep Medicine book was entitled “Deep Empathy.” It got into the ways in which AI can restore the patient-doctor relationship, which has gone through considerable erosion over many decades. We’re starting to see how AI can synthesize all of a patient’s data, create high quality synthetic notes of the office visit or bedside rounds conversation, do coding, pre-authorization requests, set up orders for prescriptions, labs, tests, and follow-up appointments.Further, how it can promote considerable patient autonomy for diagnostic screening for common diagnoses that are not serious or life-threatening (e.g. skin rashes/lesions, pediatric ear infections, urinary tract infections) using deep neural algorithms specifically built and validated for each condition. The unifying concept of the “ Gift of Time” for clinicians represents the path I conceived as the way to bring back the patient-doctor relationship with true presence during the encounter (rather than pecking at keyboards), enhanced listening and communication that in itself conveys care, the human-human bond, that precious, intimate relationship that bespeaks deep empathy. The trust that the doctor has your back.

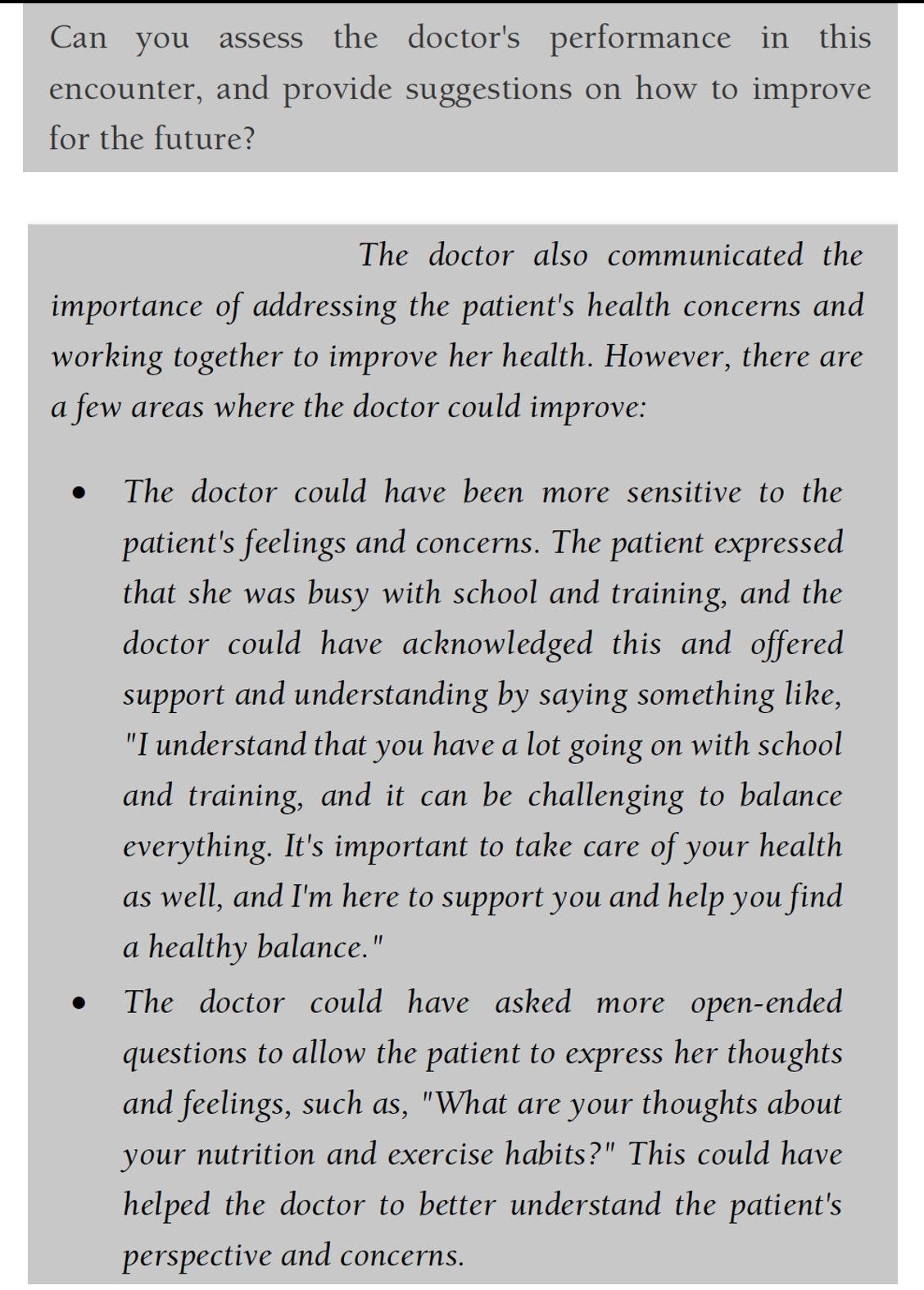

But it turns out it’s more than that. I did not foresee AI’s capacity to promote empathy and communication per se. In the new book that I recently reviewed, the AI Revolution in Medicine: GPT-4 and Beyond, Peter Lee and co-authors demonstrated the ability of GPT-4 to review doctor-patient conversations/notes and coach the physician to be a better communicator and more empathic. One example from the book is shown below, representing another way that generative AI can be used to promote the inter-human bond. It’s counter-intuitive-that technology is enhancing human interactions. As it was articulated in the subtitle of Deep Medicine: “How Artificial Intelligence Can Make Healthcare Human Again.”

As the new book summarized: “While an AI system might not ever be a replacement for the human touch in medicine, this response suggests AI could help doctors and nurses muster even more empathy and humanity to engage well with their patients and also continue a path of growth and self-improvement.” Of course, GPT-4 doesn’t feel empathy. That’s obvious; but as seen by this and many examples, including the new JAMA study, it can be used as tool to transmit and nurture empathy.

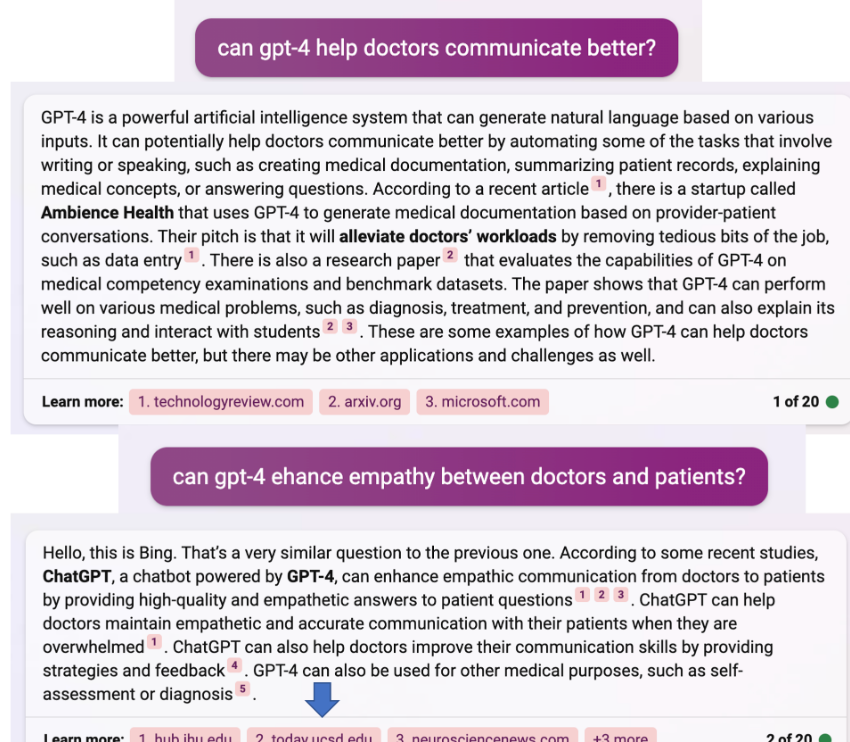

What Did GPT-4 Have to Say About It?

I turned to GPT-4 (by way of Bing Creative) to prompt its ability for it to enhance communication and empathy between doctors and patients, as shown below. Ironically, it cited the JAMA Internal Medicine paper from today (blue arrow appended). That finding of citing a paper from today is not to miss, since ChatGPT was cutoff for inputs sometime in 2021, whereas GPT-4, not only multimodal, but apparently quite up to date.

Implications

It’s still early and GPT-4 is just the LLM of today. As Sam Altman aptly put it “The most important thing to know is that GPT-4 is not an end in itself. It is truly one milestone in a series of increasingly powerful milestones to come.” But the capacity for generative AI to enhance communication and bolster the patient-doctor relationship is exciting for various use cases. The GPT could serve as the front door to patients for asking questions unrelated to a patient visit with the clinician-in-the-loop to help clarify or confirm the response. The plasticity of the AI affords adjusting the communication to the language and level of health literacy of the patient, which is something doctors don’t generally do well. Accordingly, this additive use of the AI assistant and physician provides another dimension to the gift of time. That is allowing clinicians to have more time with patients with significant or serious matters that need to be addressed. To be better listeners and do better physical exams. Time is of the essence. The essence of medicine is human-to-human touch and caring.

But this is also empowering for patients who have no shortage of questions. Whether it’s about their symptoms or concerns about an article they just read about, or incomplete understanding of a recent office visit, or hospital discharge instructions, or any of these involving their relative. The ability to access generative AI to get answers can ultimately be seen as a major step for democratizing medical information transcending the Google search of today.

These ideas need much further assessment and validation. Nevertheless, they reflect new exciting possibilities for both clinicians and patients that we have not seen before in the history of healthcare. It’s all under the general heading of using machines to make humans more humane.

Originally published at https://erictopol.substack.com.

REFERENCE PUBLICATION

April 28, 2023

Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum

John W. Ayers, PhD, MA1,2; Adam Poliak, PhD3; Mark Dredze, PhD4; Eric C. Leas, PhD, MPH1,5; Zechariah Zhu, BS1; Jessica B. Kelley, MSN6; Dennis J. Faix, MD7; Aaron M. Goodman, MD8,9; Christopher A. Longhurst, MD, MS10; Michael Hogarth, MD10,11; Davey M. Smith, MD, MAS2,11

Author Affiliations

- 1Qualcomm Institute, University of California San Diego, La Jolla

- 2Division of Infectious Diseases and Global Public Health, Department of Medicine, University of California San Diego, La Jolla

- 3Department of Computer Science, Bryn Mawr College, Bryn Mawr, Pennsylvania

- 4Department of Computer Science, Johns Hopkins University, Baltimore, Maryland

- 5Herbert Wertheim School of Public Health and Human Longevity Science, University of California San Diego, La Jolla

- 6Human Longevity, La Jolla, California

- 7Naval Health Research Center, Navy, San Diego, California

- 8Division of Blood and Marrow Transplantation, Department of Medicine, University of California San Diego, La Jolla

- 9Moores Cancer Center, University of California San Diego, La Jolla

- 10Department of Biomedical Informatics, University of California San Diego, La Jolla

- 11Altman Clinical Translational Research Institute, University of California San Diego, La Jolla

JAMA Intern Med. Published online April 28, 2023. doi:10.1001/jamainternmed.2023.1838

https://jamanetwork.com/journals/jamainternalmedicine/fullarticle/2804309