Health Systems-Transformation Institute (HSTI)

research institute and knowledge portal

Joaquim Cardoso MSc

Chief Researcher, Editor and Strategy Officer

November 15, 2022

Source: European Heart Journal

Executive Summary:

- Providing therapies tailored to each patient is the vision of precision medicine, enabled by the increasing ability to capture extensive data about individual patients.

- In this position paper, we argue that the second enabling pillar towards this vision is the increasing power of computers and algorithms to learn, reason, and build the ‘digital twin’ of a patient.

- Computational models are boosting the capacity to draw diagnosis and prognosis, and future treatments will be tailored not only to current health status and data, but also to an accurate projection of the pathways to restore health by model predictions.

- The early steps of the digital twin in the area of cardiovascular medicine are reviewed in this article, together with a discussion of the challenges and opportunities ahead.

- We emphasize the synergies between mechanistic and statistical models in accelerating cardiovascular research and enabling the vision of precision medicine.

- This position paper claims that precision cardiology will be delivered in a synergetic fashion that combines induction, by using statistical models learnt from data, and deduction, through mechanistic modelling and simulation integrating multiscale knowledge and data

Conclusion:

- Precision cardiology will be delivered, not only by data, but also by the inductive and deductive reasoning built in the digital twin of each patient.

- Treatment and prevention of cardiovascular disease will be based on accurate predictions of both the underlying causes of disease and the pathways to sustain or restore health.

- These predictions will be provided and validated by the synergistic interplay between mechanistic and statistical models.

- The early steps towards this vision have been taken, and the next ones depend on the coordinated drive from scientific, clinical, industrial, and regulatory stakeholders in order to build the evidence and tackle the organizational and societal challenges ahead.

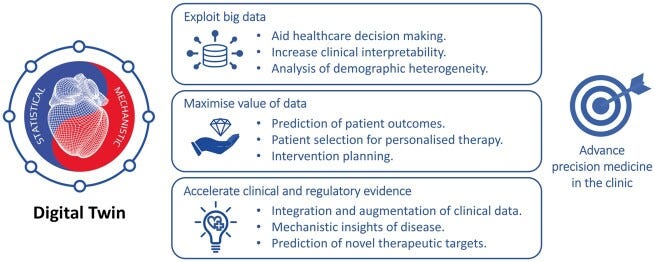

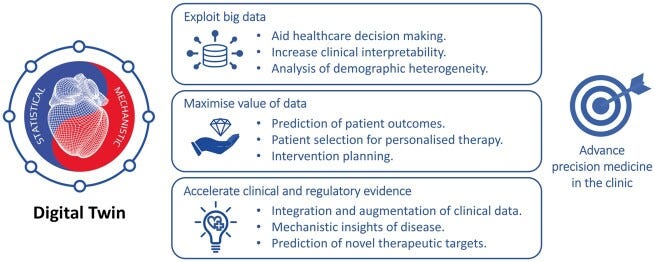

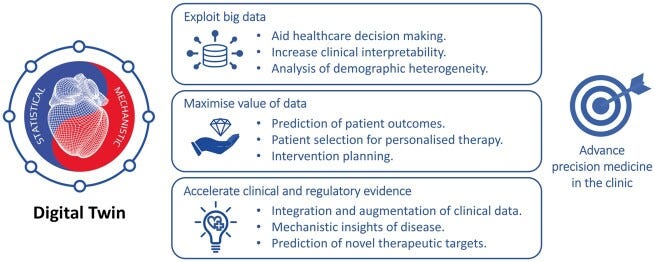

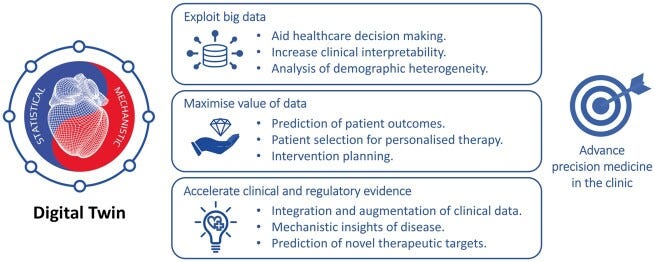

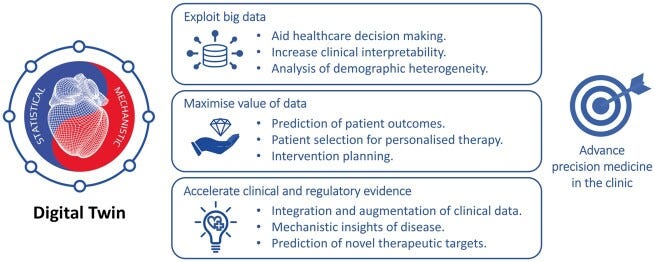

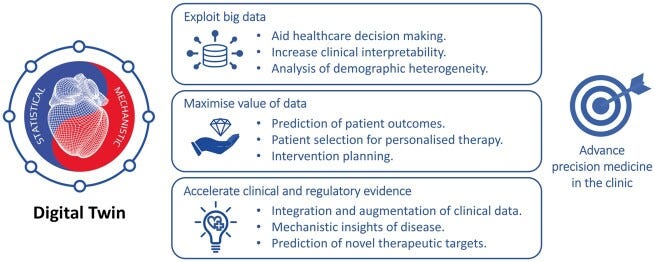

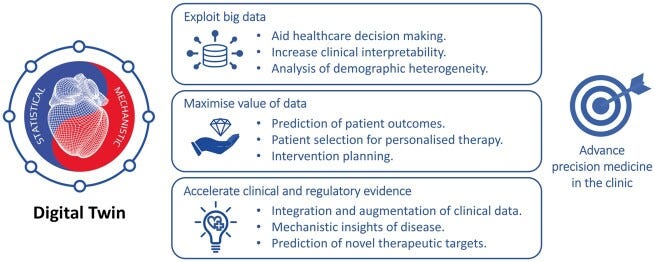

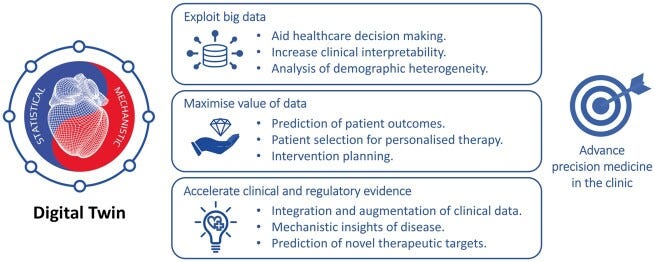

Figure 2 — Conceptual summary of the main benefits of digital twin technologies.

Keywords: Precision medicine, Digital twin, Computational modelling, Artificial intelligence

ORIGINAL PUBLICATION (full version)

The ‘Digital Twin’ to enable the vision of precision cardiology

European Heart Journal

Jorge Corral-Acero, Francesca Margara, Maciej Marciniak, Cristobal Rodero, Filip Loncaric, Yingjing Feng, Andrew Gilbert, Joao F Fernandes, Hassaan A Bukhari, Ali Wajdan, Manuel Villegas Martinez, Mariana Sousa Santos, Mehrdad Shamohammdi, Hongxing Luo, Philip Westphal, Paul Leeson, Paolo DiAchille, Viatcheslav Gurev, Manuel Mayr, Liesbet Geris, Pras Pathmanathan, Tina Morrison, Richard Cornelussen, Frits Prinzen, Tammo Delhaas, Ada Doltra, Marta Sitges, Edward J Vigmond, Ernesto Zacur, Vicente Grau, Blanca Rodriguez, Espen W Remme, Steven Niederer, Peter Mortier, Kristin McLeod, Mark Potse, Esther Pueyo, Alfonso Bueno-Orovio, and Pablo Lamata

2020 Mar 4

Introduction

Providing therapies that are tailored to each patient, and that maximize the efficacy and efficiency of our healthcare system, is the broad goal of precision medicine.

The main shift from current clinical practice is to take inter-individual variability into greater account. This exciting vision has been championed by the -omics revolution, i.e., the increasing ability to capture extensive data about the pathophysiology of the patient. 1,2 This -omics approach has already delivered great achievements, especially in the management of specific cancer conditions. 3 Nevertheless, the initial conception of precision medicine has already been criticized for being too centred in genomics and failing to address challenges of clinical management. 4 The concept is thus gradually widening, shifting from the original gene-centric perspective to the wide spectrum of lifestyle, environment, and biology data. 5,6

In this context, we argue that the definition of optimal therapy options requires a mechanistic understanding that links all levels from genetic and molecular traces to the pathophysiology, lifestyle and environment of the patient, and back.

Precision medicine requires, not only better and more detailed data, but also the increasing ability of computers to analyse, integrate, and exploit these data, and to construct the ‘digital twin’ of a patient. In health care, the ‘digital twin’ denotes the vision of a comprehensive, virtual tool that integrates coherently and dynamically the clinical data acquired over time for an individual using mechanistic and statistical models. 7 This borrows but expands the concept of ‘digital twin’ used in engineering industries, where in silico representations of a physical system, such as an engine or a wind farm, are used to optimize design or control processes, with a real-time connection between the physical system and the model. 8

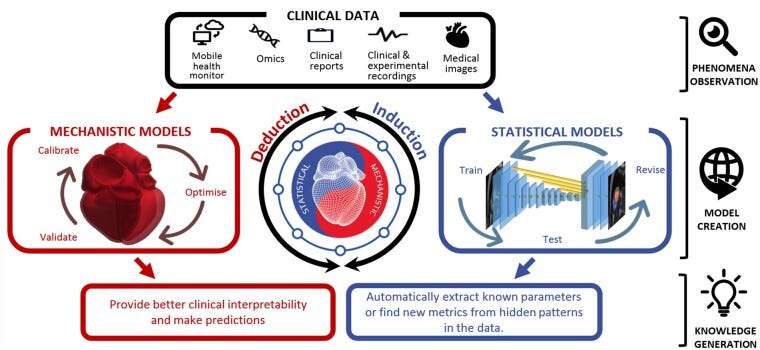

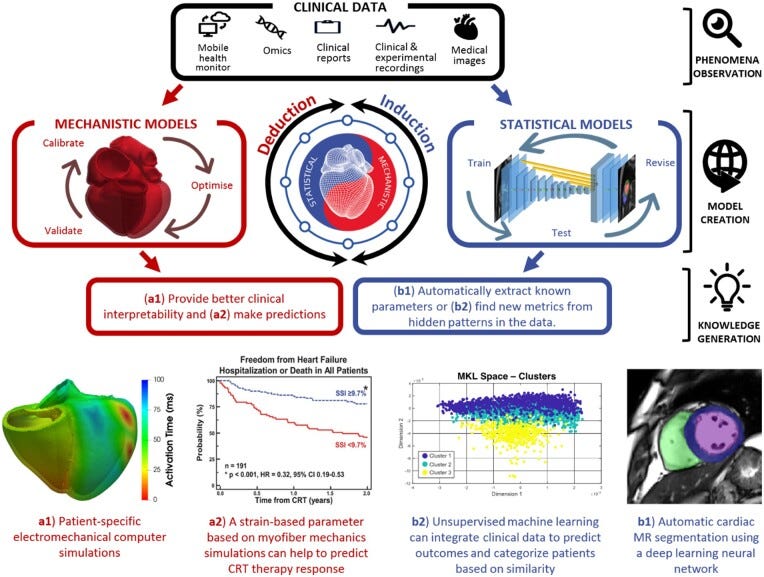

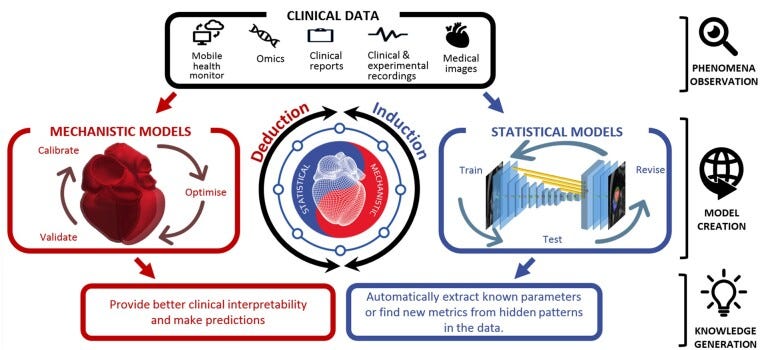

This position paper claims that precision cardiology will be delivered in a synergetic fashion that combines induction, by using statistical models learnt from data, and deduction, through mechanistic modelling and simulation integrating multiscale knowledge and data. 9

These are the two pillars of the digital twin ( Figure 1 ). We review the state of the art of the interplay between such models that supports this vision, considering that there are already excellent independent review papers in the fields of statistical 14–16 and mechanistic 17,18 models for cardiovascular medicine.

Figure 1 — The two pillars of the digital twin, mechanistic and statistical models, illustrating its construction and four examples of use: a1,10 a2,11 b1,12 b2.13

Mechanistic models encapsulate our knowledge of physiology and the fundamental laws of physics and chemistry.

They provide a framework to integrate and augment experimental and clinical data, enabling the identification of mechanisms and/or the prediction of outcomes, even under unseen scenarios without the need for retraining. 19 Examples of such mechanistic models are the bidomain equations for cardiac electrophysiology 20 or the Navier-Stokes equations for coronary blood flow. 21 In a complementary manner, statistical models encapsulate the knowledge and relations induced from the data. They allow the extraction and optimal combination of individualized biomarkers with mathematical rules. Examples of statistical models applied to computational cardiology are random forests for assessment of heart failure severity 22 or Gaussian processes to capture heart rate variability. 23

There are clinical needs that can be solved with a single modelling approach.

But both mechanistic and statistical models have limitations that can be addressed by combining them. Mechanistic models are constrained by their premises (assumptions and principles), while statistical models are constrained by the observations available (the amount and diversity of data). A mechanistic model may be a good choice when a good understanding of the system is available. A statistical model, on the other hand, can serve to find predictive relations even when the underlying mechanisms are poorly understood or are too complex to be modelled mechanistically. The rest of the article describes the synergies between mechanistic and statistic models (see Figure 2 for an overview), motivated by actual clinical problems and needs, with specific representative components of the digital twin. Supplementary material online reviews the model synergies for exploiting and integrating clinical data.

Figure 2 — Conceptual summary of the main benefits of digital twin technologies.

Mechanistic and statistical model synergy for improving clinical decisions

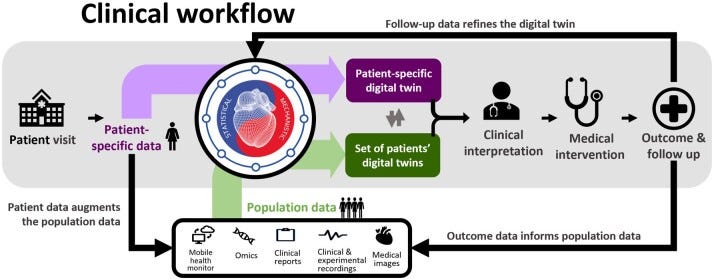

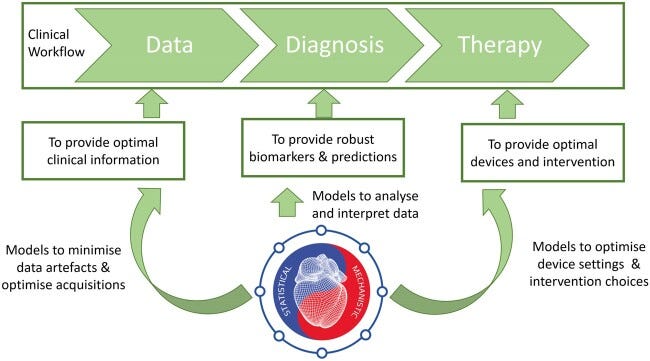

Technical, ethical, and financial constraints limit the data acquisition needed to assist clinical decision-making. 14,15 Synergy between mechanistic and statistical models has shown value in aiding diagnosis, treatment, and prognosis evaluation. A fully developed digital twin will combine population and individual representations to optimally inform clinical decisions ( Figure 3 ).

Figure 3 — Envisioned clinical workflow using the fully developed digital twin concept

Model synergy in aiding diagnosis

Models can pinpoint the most valuable piece of diagnostic data. An example is the simulation study that revealed that fibrosis and other pulmonary vein properties may better characterize susceptibility to atrial fibrillation. 24 Models can also reliably infer biomarkers that cannot be directly measured or that require invasive procedures. For instance, the combination of cardiovascular imaging and computational fluid dynamics enables non-invasive characterizations of flow fields and the calculation of diagnostic metrics in the domains of coronary artery disease, aortic aneurysm, aortic dissection, valve prostheses, and stent design. 25–29

The key to guide diagnosis is the personalization of a mechanistic model to the actual health status of the patient as captured in available clinical data. In this personalization process, statistical models enable robust and reproducible analysis of clinical data and infer missing parameters. An example of this synergy is the assessment of left ventricular myocardial stiffness and decaying diastolic active tension by fitting mechanical models to pressure data and images during diastole. 30,31 Another example is the non-invasive computation of pressure drops in flow obstructions, 32,33 such as aortic stenosis or aortic coarctation, which has been proven more accurate than methods recommended in clinical guidelines. 34 Models have also been used to derive fractional flow reserve from computed tomography (CT) to non-invasively identify ischaemia in patients with suspected coronary artery disease, avoiding invasive catheterized procedures. 29,35–37

Some diagnostic medical devices based on personalized mechanistic models have already reached their industrial translation and clinical adoption. HeartFlow FFR CT Analysis (HeartFlow, USA) and CardioInsight (Medtronic, USA) use patient-specific mechanistic models to non-invasively calculate clinically relevant diagnostic indexes and have received clearance from the USA Food and Drug Administration (FDA). 38 HeartFlow predicts fractional flow reserve by means of a personalized 3D model of blood flow in the coronary arteries. 36 In the CardioInsight mapping system, the electrical activity on the heart surface is recovered from body surface potentials using a personalized model of the patient’s heart and torso. 39

Model synergy in guiding treatments

A digital twin may indicate whether a medical device or pharmaceutical treatment is appropriate for a patient by simulating device response or dosage effects before a specific therapy is selected.

The benefits of cardiac resynchronization therapy (CRT) have been demonstrated in patients with prolonged QRS duration. However, uncertainty remains in patients with more intermediate electrocardiogram (ECG) criteria. 40 To guide decision-making in this ‘grey zone’, approaches using mechanistic modelling have investigated the role of different aetiologies of mechanical discoordination in CRT response. 10 For example, a novel radial strain-based metric was defined based on simulations of the human heart and circulation to differentiate patterns of mechanical discoordination, suggesting that the response to CRT could be predicted from the presence of non-electrical substrates. 11 Statistical methods were used to verify these findings in a clinical cohort, and the novel index remained useful in predicting response in the clinical ‘grey zone’, creating the opportunity to improve patient selection in the group with intermediate ECG.

Another example is the improvements in ablation guidance of infarct-related ventricular tachycardia, where the accurate identification of patient-specific optimal targets is provided before the clinical procedure. 41 Mechanistic models can propose novel electro-anatomical mapping indices to locate critical sites of re-entry formation in scar-related arrhythmias, aid acquisition and quantitative interpretation of electrophysiological data, and optimize future clinical use. 42

The industrial translation and clinical adoption of models for guiding treatment are exemplified by the optimal planning of valve prosthesis with the HEARTguide™ platform (FEops nv, Belgium), or by the platform to guide ventricular tachycardia ablations (inHeart, France).

Model synergy in evaluating prognosis

While statistical modelling allows for categorizing patients based on the probability of various outcomes, mechanistic modelling provides more insights to support or reject the categorization.

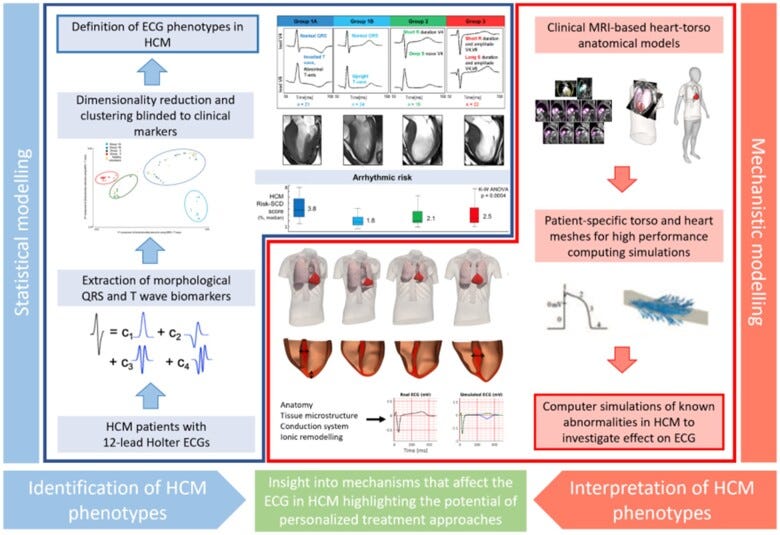

For example, model synergies represent an exciting approach to interpret structure-function relationships and improve risk prediction in inherited disease conditions, such as hypertrophic cardiomyopathy (HCM). Relationships among specific ECG changes, ventricle morphologies, and sudden cardiac death have been inferred from observations. 43,44 However, the complex process of translating underlying heterogeneous substrates in HCM to ECG findings is still poorly understood, and there exists a ‘grey zone’ of clinical decision-making in the low-risk patient subgroups, specifically when deciding on restriction of involvement in professional sports. 45 In this context, by using methods of statistical inference and mathematical modelling (see Figure 4 ), HCM patients were categorized into phenogroups based on ECG biomarkers extracted from 24-h ECG recordings, 48 and the aetiology of each ECG phenogroup linked with different underlying substrates, suggesting ion-channel and conduction system abnormalities. 46,47 The results directly highlighted the potential of personalized anti-arrhythmic approaches in the treatment of HCM patients, and addressed the low-risk patients, showing that a normal ECG might indeed be the discriminatory factor signalling minimal ionic remodelling, fibrosis, disarray, and ischaemia in these ‘grey zone’ patients.

Figure 4 — Synergy between mechanistic and statistical models in the definition of electrocardiogram (ECG) biomarkers for the management of hypertrophic cardiomyopathy.47 , 48

Models have also been used in the prediction of arrhythmic events in post-myocardial infarction, outperforming existing clinical metrics including ejection fraction. 49 When the amount of data is not sufficient to inform state-of-the-art machine learning methods, statistical methods can still prove useful. An example is the use of principal component analysis to account for right ventricular motion in predicting survival in pulmonary hypertension, 50 or to identify signatures of anatomical remodelling that predict a patient’s prognosis following CRT implantation. 51

While statistical models allow predictions, mechanistic models provide the underlying explanations. Understanding the actual meaning of the selected features improves the plausibility of findings and increases their credibility. For both approaches, quantifying uncertainty of prediction can help identify cases that may require further review, while building trust in cases where models are shown to be robust. 52,53

Mechanistic and statistical model synergy to accelerate evidence generation

While digital twin technologies in cardiology show promising research results, only a small number of models have reached clinical translation. The difficulties encountered include the need to increase validation, lack of clinical interpretability, and potentially obscure model failures. 54 Therefore, solid evidence for the generalization of preliminary findings and efficient testing strategies are needed. Even when these barriers are overcome, rigid assessment of algorithmic performance and quality control from regulatory bodies can slow down the adoption. In this context, model synergy can be used to accelerate the integration of novel technologies into clinical practice by increasing clinical interpretability, validating generality of findings, and accelerating regulatory decision-making.

Model validation towards generality of findings

The goal after validating an initial concept is to extend it to a more general patient cohort, with less controlled characteristics. The problem of sampling bias, based on both intrinsic (physiological) and extrinsic (environmental) demographic heterogeneity of the population, becomes relevant when implementing solutions for broader patient cohorts. 55,56 Consequently, models (as clinical guidelines) may need recalibrations when used on populations from different countries or ethnicities, or even from different centres in the same country. In recent years, only 6% of artificial intelligence algorithms had external evaluation performed (note this is beyond the minimum requirement of using the learning, validation, and testing partitions of the data), and none adopted the three design criteria of a robust validation: diagnostic cohort design, the inclusion of multiple institutions, and prospective data collection. 57 The quality of datasets also needs to be thoroughly validated to avoid possible biases before the models developed from them can be integrated in clinical decision-making. 58

To address this issue, an increasing number of institutions are creating initiatives for data-sharing platforms, aiming at reusing existing datasets and verifying published research works. 59 Governments, regulatory agencies, and philanthropic funders are promoting the open science culture, enforcing publishing patient-level data by means of compliance to product launching, funding application, and journal publishing. 60

Another approach to improve the generality of data is the generation of synthetic cases of a representative wider population. The core idea is to expand the average mechanistic model to obtain populations of models, all of them parameterized within the range of physiological variability obtained by experimental protocols. 61,62 Such an approach, which allows investigating many more scenarios than possible experimental acquisitions, is not only able to evaluate the impact of physiological variability but to explain the mechanisms underpinning inter-individual variability in therapy response (e.g. adverse drug reactions), and to identify sub-populations at higher risk. 63,64 Statistical shape modelling techniques can represent inter-patient anatomical variability for a cohort, and be used in combination of mechanistic models for clinical decision support systems. 65

As in traditional scientific research, mechanistic and statistical models are complementary tools to verify the findings derived from one another. Finding a mechanistic explanation of an inductive inference from statistical models increases its plausibility, such as the redistribution of work in the left bundle branch block to explain the remodelling pattern that predicts response to CRT. 51 Equivalently, data computed from mechanistic models need to be scrutinized quantitatively as it was done in the comparison of clinical and simulation groups to validate a model for acute normovolaemic haemodilution. 66

An important final remark is that randomized control trials will always be needed to establish evidence that can never be obtained from large observational databases. 67

Models as critical tools for accelerating regulatory decision-making

Clinical decisions are built on evidence from bench to bedside. Regulatory decisions, on the contrary, are often based on heterogeneous, limited, or completely absent human data, as in the case of approval for first-in-human clinical trials. In this regard, the results of computational models can now be accepted for some regulatory submissions. 68,69 Digital evidence obtained using computer simulations can be used for safety of therapy prior to first-in-human use, or under scenarios not ethically possible in human. 38 Computational models have an increasingly important role in the overall product life cycle management, proving useful in the processes of design optimization for development and testing, supplemental non-clinical testing, and post-market design changes and failure assessment. 27

The development process for medical devices involves manufacturing and testing samples under a wide range of scenarios, which is often time-consuming and financially overwhelming. Moreover, pre-clinical testing conditions are often very simplified with respect to the actual patient environment. Statistical and mechanistic models synergistically offer to streamline this process, where statistical models can be used to collect a representative virtual patient cohort, and mechanistic models can then be used to simulate the device behaviour under defined scenarios. In this way, new devices can be tested in a representative virtual patient population, thereby decreasing the risk before moving to an actual clinical trial. An example is HEARTguide™ (FEops nv, Belgium), where device-patient interactions after transcatheter aortic valve implantation can be predicted. 25

The augmentation of clinical trial design with virtual patients is also an evolving idea. 70–72 This would overcome limitations of current empirical trials, where patients burdened with comorbidities or complex treatment regimens are often excluded from the trials, and enrolled individuals are handled under reductionist approaches, assuming they share a common phenotype. Such approaches often fail to capture differences in response to treatment. 70 Alternatively, computational evidence can inform collection of novel evidence from clinical trials, 13,38 where models can improve patient selection by derived biomarkers and predictions. This offers an opportunity to answer questions traditionally restricted by financial or ethical considerations, and to investigate therapy efficacy in more clinically relevant cases. Computational modelling can also facilitate safe methods to explore treatment effects in sub-populations clinically more complex to address, such as patients with rare diseases or paediatric cohorts, and therefore may allow for insights not possible in the current clinical trial practice.

One of the first examples in which digital evidence (i.e. an in silico trial) replaced any additional clinical evidence was in the approval of the Advisa MRI SureScan pacemaker (Medtronic, Inc.). 73 Another powerful example is a computer simulator of type 1 diabetes mellitus, 74 which was accepted by the FDA as a substitute to animal trials for the pre-clinical testing of control strategies in artificial pancreas studies. Later, an investigational device exemption (i.e. the approval needed to initiate a clinical study), issued solely on the basis of modelling testing, was granted by the FDA for a closed-loop control clinical trial of the safety and effectiveness of the proposed artificial pancreas algorithm.

In the context of drug safety and efficacy assessment, an unmet need is filling the gaps between animal translation or in vitro preparations and prediction of the human response. Mechanistic models may assist in scaling observations into humans. 75 This is, for example, the goal of the CIPA initiative, 69 sponsored by the FDA among others, aiming at facilitating the adoption of a new paradigm for assessment of potential risk of clinical Torsades de Pointes, where mechanistic models of human electrophysiology will play a crucial role. This is reinforced by a recent study in which human in silico trials outperformed animal models in predicting clinical pro-arrhythmic cardiotoxicity, so they might be soon integrated into existing drug safety assessment pipelines. 63

Finally, after a product is launched, mechanistic models can be still used for post-market re-evaluation and failure assessment in order to identify any potential underlying problems. This creates a valuable opportunity for simulations to evaluate any design changes planned for next-generation productions, ultimately closing the product life cycle loop, and demonstrating the ubiquitous presence and utility of statistical and mechanistic models in the future of medical product regulation.

Discussion

The digital twin, i.e., the dynamic integration and augmentation of patient data using mechanistic and statistical models, is the actual pathway towards the vision of precision medicine.

Simple and fragmented components of the digital twin are already used in clinical practice: a decision tree in a clinical guideline encapsulates the best-documented evidence that is based in statistical and mechanistic insights. The digital twin will gradually include tailored computer-enabled decision points, and create the transition from healthcare systems founded on describing disease to healthcare systems focused on predicting response, and thus shifting treatment selection from being based on the state of the patient today to optimizing the state of the patient tomorrow.

Envisioned impact and timeline

The digital twin provides a pathway to map current patient observations into a predictive framework, combining inductive and deductive reasoning.

Early components of the digital twin are already making a clinical impact. In a generic clinical workflow divided in the stages of data acquisition, diagnosis, and therapy planning, computational models can provide value in the three stages, see Figure 5 . To improve data acquisition techniques, there are already statistical models to automate the image analysis tasks. 16 To provide better diagnosis, a virtual fractional flow reserve can replace an invasive catheter, 29,37 or the body surface recordings can be mapped to the surface of the heart. 39 With regards to therapy planning, a virtual deployment of the valve replacement 25,76 or a roadmap to guide ablation procedures 77,78 represents existing techniques (statistical and mechanistic) that have been implemented into the clinical workflow. These solutions have thus met regulatory approval, where they are referred to as ‘software as a medical device’, and where guidelines from the International Medical Device Regulators Forum are accepted by the EU and the USA.

Figure 5 — The vision of a personalized in silico cardiology, where the digital twin informs all the stages through the clinical workflow.

Take home figure — The cardiovascular digital twin that will deliver the vision of precision medicine by the synergetic combination of computer-enhanced induction (using statistical models learnt from data) and deduction (mechanistic modelling and simulation integrating multi-scale knowledge).

A digital twin will follow the life journey of each person and harness both data collected by wearable sensors and lifestyle information that patients may register, shifting the clinical approach towards preventive healthcare.

A notable challenge is the integration of these data with healthcare organizations, where security and confidentiality of the sensitive information remain paramount.

The currently still fragmented and incipient concept of the digital twin will be gradually crystallized and adopted during the next 5–10 years.

The holistic integration of a Digital Twin is the aspiration that will be reached through two complementary and synergetic pathways: the first is the refinement of key decision points in the management of cardiac disease, driven by personalized mechanistic models that are informed by key pieces of patient’s data; and the second is the disease-centred optimization of the patient’s lifetime journey through the healthcare system, driven by statistical models being informed by the electronic health record of a large population.

On the actual implementation of the digital twin, we envision that the evolution will be towards a gradually better inter-operability of current health information systems, leading to a distributed location of the information.

Digital twin users will mainly be citizens and physicians, with different interfaces that retrieve the relevant data and trigger the analysis capabilities hosted in the local device or remote cloud resources. The analysis may also require specialized skills that may be delivered by industry, or even by computational cardiologists inside healthcare organizations.

Organizational and societal challenges ahead

Access to data is the main challenge in both the development and the clinical translation of the digital twin, caused by infrastructural, regulatory, and societal reasons.

Information systems and electronic health records are fragmented, highly heterogeneous and difficult to inter-operate. Information is often contained in unstructured format, and its extraction requires either manual work or further research efforts of automation through natural language processing technologies. 79 Simulations may also require specialized skills and supercomputers. In this context, provision of digital twin technologies may be enabled by cloud infrastructures (e.g. HeartFlow FFR CT Analysis).

Consent and confidentiality are key ingredients to address the societal concerns when handling the personal data needed to develop and validate digital twin technologies.

The EU General Data Protection Regulation (GDPR) has imposed new legal requirements, such as the right to withdraw consent and the right to be forgotten, causing controversy about the cost and feasibility of its enforcement. 80 Any digital twin solution that holds enough information to identify a patient needs to carefully watch these requirements, that also apply to retrospective data and safety backups.

Potential professional, cultural, and ethical issues

As more clinical tasks are performed by models, the fear of replacement of physicians by machines may arise.

In some scenarios, machines may match or even outperform physicians. 81 In other scenarios, human experts, by not practising on the easy problems solved by the machine, may lose the skills that may still be needed when dealing with difficult cases.

The second professional barrier is the mistrust that originates from a ‘black box’, where predictions derived by algorithms are not matched with a plausible explanation.

Generation of evidence is one clear way to generate trust. Another solution is to use methods to illustrate the logic inside the box, including clustering and association techniques, 82 which may help to identify the causes and mechanisms.

From the patient’s perspective, personalization creates the opportunity of more involvement in healthcare decisions.

Patients will be empowered to better manage their disease using the digital twin to gain information about their current and predicted state, and potentially to adopt optimized lifestyle suggestions. A well-informed patient shall have more efficient discussions with physicians, and consent and decide faster on diagnostic or treatment procedures.

Finally, on the ethical side, there is a risk of models to create or exacerbate existing racial or societal biases in healthcare systems: if a group is misrepresented in the data used to train models, that group may receive a sub-optimal treatment. 83

Recommendations

The pathway to accelerate the clinical impact with digital twin technologies is to generate trust among researchers, clinicians, and society.

Research communities shall avoid inflating expectations. Claims about generality and potential impact should be based on rigorous methodology, with external cohorts to demonstrate the validity of inferences, and with the quantification of the uncertainty of predictions. 84 Any model is a simplified representation of the reality, with a limited scope and dependence on assumptions made. The opportunity is an adequate handling of these limitations, with models able to identify data inconsistencies, and with data used to constrain and verify the model assumptions. 85

As an emerging field, the digital twin needs guidelines, gold-standards, and benchmark tests. 86,87

Scientific organizations and regulatory bodies have released guidelines that can be used to establish the level of rigour needed for computational modelling. 27 Such guidelines and standards are useful tools as they allow regulators to judge computational evidence and industry to understand regulatory requirements for computational models, leveraging a substantial part of the risk and uncertainty associated to the development of these new technologies. They can even increase and facilitate their translational impact, as the quality and robustness of the models and their reporting will increase by adhering to such guidance during model development. Further effort is needed to widen the scope of these first multi-stakeholder consensuses involving industry, academia, and regulators. Current initiatives that develop visions, technologies, or infrastructure relevant to the ‘digital twin’ community are Elixir ( https://elixir-europe.org/), FAIRDOM ( https://fair-dom.org/), and EOSC ( https://ec.europa.eu/research/openscience/index.cfm? pg=open-science-cloud).

The education of citizens, care providers, physicians, and researchers in the uses and possibilities of digital twin technologies is key for its adoption and acceptance.

University education systems should also allow for the exchange of knowledge at the earliest stages of the career: medical students should have some computational training, just as engineers in biomedical industry should be trained in cardiology during their studies. 88 And postgraduate training programmes should bridge remaining cultural and language gaps between disciplines, such as our Personalised In-silico Cardiology EU funded Innovative Training Network ( https://picnet.eu).

Conclusion

Precision cardiology will be delivered, not only by data, but also by the inductive and deductive reasoning built in the digital twin of each patient.

Treatment and prevention of cardiovascular disease will be based on accurate predictions of both the underlying causes of disease and the pathways to sustain or restore health. These predictions will be provided and validated by the synergistic interplay between mechanistic and statistical models. The early steps towards this vision have been taken, and the next ones depend on the coordinated drive from scientific, clinical, industrial, and regulatory stakeholders in order to build the evidence and tackle the organizational and societal challenges ahead.

About the authors & affiliations

Jorge Corral-Acero, Department of Engineering Science, University of Oxford, Oxford, UK.

Francesca Margara, Department of Computer Science, British Heart Foundation Centre of Research Excellence, University of Oxford, Oxford, UK.

Maciej Marciniak, Department of Biomedical Engineering, Division of Imaging Sciences and Biomedical Engineering, King’s College London, London, UK.

Cristobal Rodero, Department of Biomedical Engineering, Division of Imaging Sciences and Biomedical Engineering, King’s College London, London, UK.

Filip Loncaric, Institut Clínic Cardiovascular, Hospital Clínic, Universitat de Barcelona, Institut d’Investigacions Biomèdiques August Pi i Sunyer (IDIBAPS), Barcelona, Spain.

Yingjing Feng, IHU Liryc, Electrophysiology and Heart Modeling Institute, fondation Bordeaux Université, Pessac-Bordeaux F-33600, France. IMB, UMR 5251, University of Bordeaux, Talence F-33400, France.

Andrew Gilbert, GE Vingmed Ultrasound AS, Horton, Norway.

Joao F Fernandes, Department of Biomedical Engineering, Division of Imaging Sciences and Biomedical Engineering, King’s College London, London, UK.

Hassaan A Bukhari, IMB, UMR 5251, University of Bordeaux, Talence F-33400, France. Aragón Institute of Engineering Research, Universidad de Zaragoza, IIS Aragón, Zaragoza, Spain.

Ali Wajdan, The Intervention Centre, Oslo University Hospital, Rikshospitalet, Oslo, Norway.

Manuel Villegas Martinez, The Intervention Centre, Oslo University Hospital, Rikshospitalet, Oslo, Norway.

Mariana Sousa Santos, FEops NV, Ghent, Belgium.

Mehrdad Shamohammdi, CARIM School for Cardiovascular Diseases, Maastricht University, Maastricht, The Netherlands.

Hongxing Luo, CARIM School for Cardiovascular Diseases, Maastricht University, Maastricht, The Netherlands.

Philip Westphal, Medtronic PLC, Bakken Research Center, Maastricht, the Netherlands.

Paul Leeson, Radcliffe Department of Medicine, Division of Cardiovascular Medicine, Oxford Cardiovascular Clinical Research Facility, John Radcliffe Hospital, University of Oxford, Oxford, UK.

Paolo DiAchille, Healthcare and Life Sciences Research, IBM T.J. Watson Research Center, Yorktown Heights, NY, USA.

Viatcheslav Gurev, Healthcare and Life Sciences Research, IBM T.J. Watson Research Center, Yorktown Heights, NY, USA.

Manuel Mayr, King’s British Heart Foundation Centre, King’s College London, London, UK.

Liesbet Geris, Virtual Physiological Human Institute, Leuven, Belgium.

Pras Pathmanathan, Center for Devices and Radiological Health, U.S. Food and Drug Administration, Silver Spring, MD, USA.

Tina Morrison, Center for Devices and Radiological Health, U.S. Food and Drug Administration, Silver Spring, MD, USA.

Richard Cornelussen, Medtronic PLC, Bakken Research Center, Maastricht, the Netherlands.

Frits Prinzen, CARIM School for Cardiovascular Diseases, Maastricht University, Maastricht, The Netherlands.

Tammo Delhaas, CARIM School for Cardiovascular Diseases, Maastricht University, Maastricht, The Netherlands.

Ada Doltra, Institut Clínic Cardiovascular, Hospital Clínic, Universitat de Barcelona, Institut d’Investigacions Biomèdiques August Pi i Sunyer (IDIBAPS), Barcelona, Spain.

Marta Sitges, Institut Clínic Cardiovascular, Hospital Clínic, Universitat de Barcelona, Institut d’Investigacions Biomèdiques August Pi i Sunyer (IDIBAPS), Barcelona, Spain. CIBERCV, Instituto de Salud Carlos III, (CB16/11/00354), CERCA Programme/Generalitat de, Catalunya, Spain.

Edward J Vigmond, IHU Liryc, Electrophysiology and Heart Modeling Institute, fondation Bordeaux Université, Pessac-Bordeaux F-33600, France. IMB, UMR 5251, University of Bordeaux, Talence F-33400, France.

Ernesto Zacur, Department of Engineering Science, University of Oxford, Oxford, UK.

Vicente Grau, Department of Engineering Science, University of Oxford, Oxford, UK.

Blanca Rodriguez, Department of Computer Science, British Heart Foundation Centre of Research Excellence, University of Oxford, Oxford, UK.

Espen W Remme, The Intervention Centre, Oslo University Hospital, Rikshospitalet, Oslo, Norway.

Steven Niederer, Department of Biomedical Engineering, Division of Imaging Sciences and Biomedical Engineering, King’s College London, London, UK.

Peter Mortier, FEops NV, Ghent, Belgium.

Kristin McLeod, GE Vingmed Ultrasound AS, Horton, Norway.

Mark Potse, IHU Liryc, Electrophysiology and Heart Modeling Institute, fondation Bordeaux Université, Pessac-Bordeaux F-33600, France. IMB, UMR 5251, University of Bordeaux, Talence F-33400, France. Inria Bordeaux Sud-Ouest, CARMEN team, Talence F-33400, France.

Esther Pueyo, Aragón Institute of Engineering Research, Universidad de Zaragoza, IIS Aragón, Zaragoza, Spain. CIBER in Bioengineering, Biomaterials and Nanomedicine (CIBER‐BBN), Madrid, Spain.

Alfonso Bueno-Orovio, Department of Computer Science, British Heart Foundation Centre of Research Excellence, University of Oxford, Oxford, UK.

Pablo Lamata, Department of Biomedical Engineering, Division of Imaging Sciences and Biomedical Engineering, King’s College London, London, UK.

References and additional information

See the original publication

Originally published at https://www.ncbi.nlm.nih.gov.