health transformation institute

ai health transformation unit

Joaquim Cardoso MSc

Senior Advisor and

Chief Researcher & Editor

January 16, 2023

EXECUTIVE SUMMARY

In the book Deep Medicine, published in 2019, the author discussed the role of deep learning in transforming medicine, which was primarily focused on automated interpretation of medical images.

However, four years later, AI has advanced with large language models (LLMs) also known as “foundation models” that can be used in medicine.

- These models are characterized by their in-context learning, meaning they can perform tasks for which they were never explicitly trained.

- In the past two years, there has been an exponential growth in computation used to train these models, culminating with Google’s PaLM, which uses 2.5 billion petaFLOPS and 540 billion parameters.

- In 2023, there are models that are 10,000 times larger, with over a trillion parameters.

- These foundation models have the potential to revolutionize medicine by providing a diverse, integrated view of medical data that includes electronic health records, imaging, and genomics.

- The authors of the paper below concludes “the resulting model, Med-PaLM, performs encouragingly, but remains inferior to clinicians.

- We show that comprehension, recall of knowledge, and medical reasoning improve with model scale and instruction prompt tuning, suggesting the potential utility of LLMs in medicine.”

These foundation models have the potential to revolutionize medicine by providing a diverse, integrated view of medical data that includes electronic health records, imaging, and genomics.

It should be point out that it’s not exactly a clear or rapid path because there is a paucity of large or even massive medical datasets, and the computing power required to run these models is expensive and not widely available.

But the opportunity to get to machine-powered, advanced medical reasoning skills, that would come in handy (an understatement) with so many tasks in medical research, and patient care, such as

- generating high-quality reports and notes,

- providing clinical decision support for doctors or patients,

- synthesizing all of a patient’s data from multiple sources,

- dealing with payors for pre-authorization, and

- so many routine and often burdensome tasks,

is more than alluring.

For balance, however, there’s this quote from Phil Libin, the CEO of Evernote: “All of these models are about to shit all over their own training data. We’re about to be flooded with a tsunami of bullshit.”

One point to make is that, the key concept here is augment; I can’t emphasize enough that machine doctors won’t replace clinicians. Ironically, it’s about technology enhancing the quintessential humanity in medicine.

INFOGRAPHIC

DEEP DIVE

When M.D. is a Machine Doctor

Helping medical doctors and patients in the Foundation Model A.I. era

Eric Topol

January 15, 2023

Back in 2019, I wrote Deep Medicine, a book centered on the role that deep learning will have on transforming medicine, which until now has largely been circumscribed to automated interpretation of medical images.

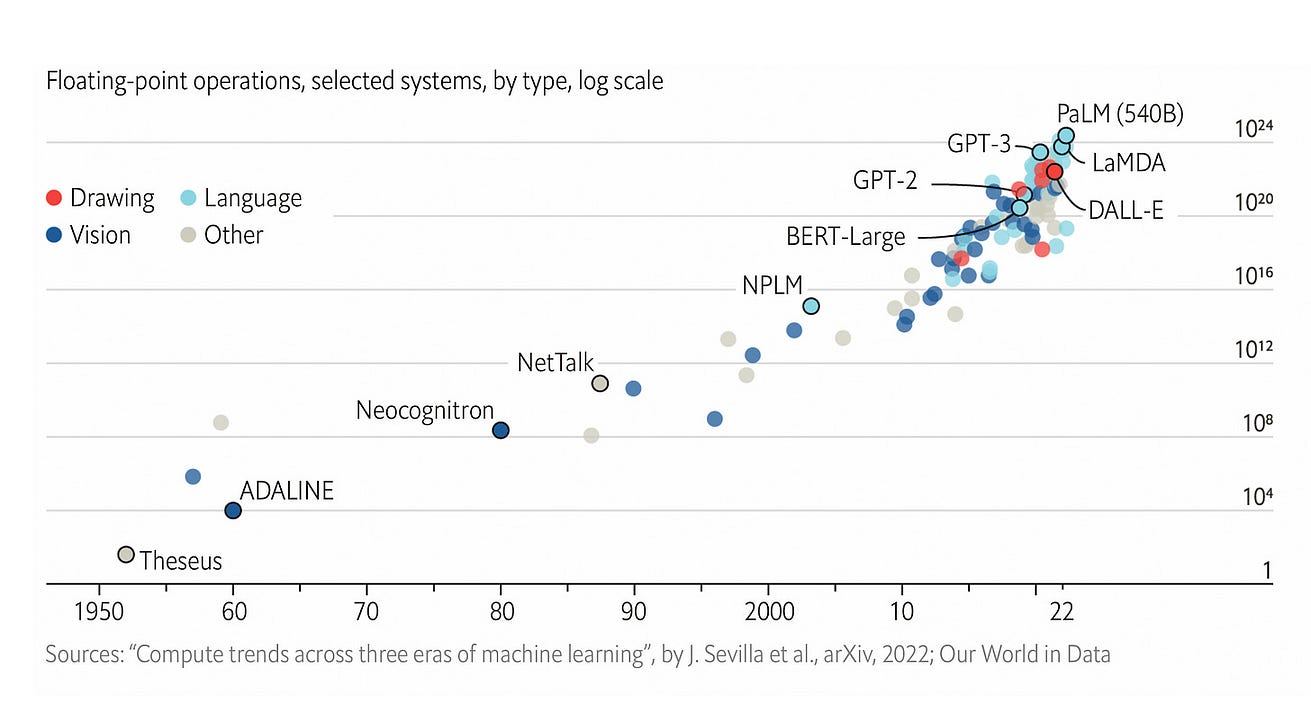

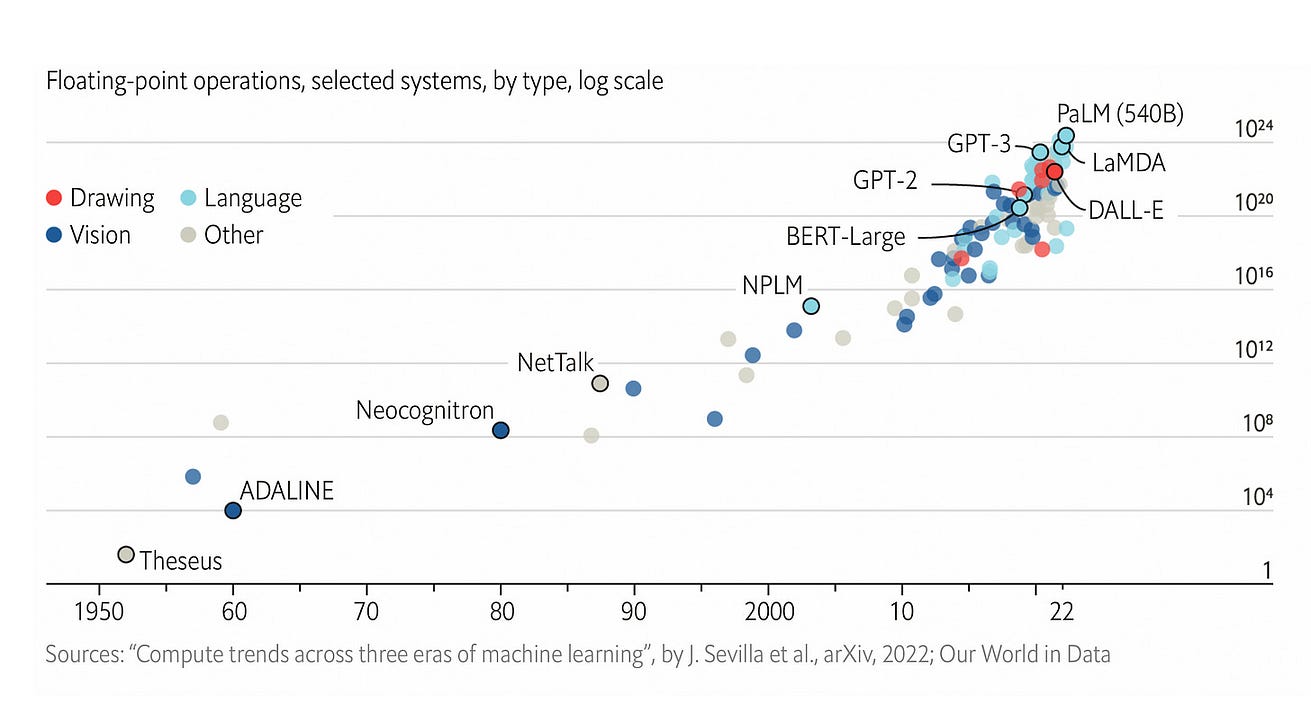

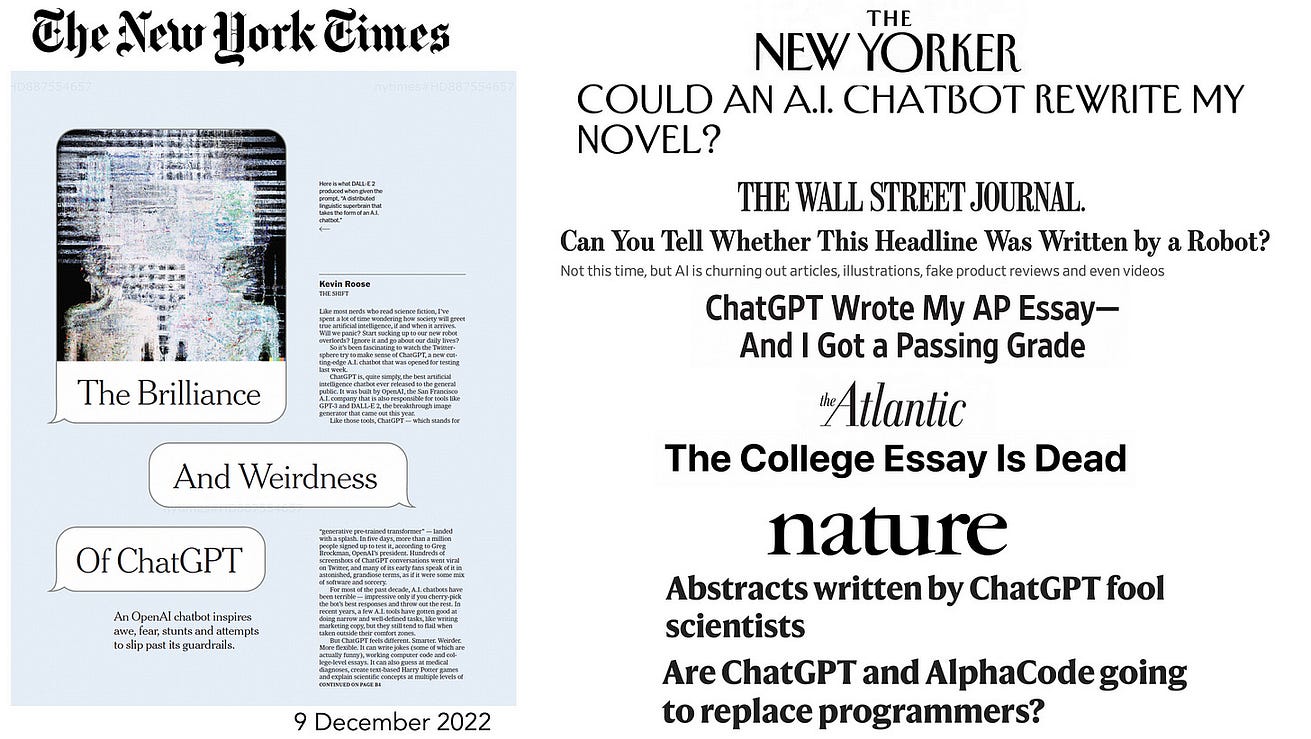

Now, four years later, the AI world has charged ahead with large language models (LLMs), dubbed “ foundation models” by Stanford HAI’s Percy Jiang and colleagues (a 219-page preprint, the longest I have ever seen), which includes BERT, DALL-E, GPT-3, LaMDA and a blitz of others, also known by others as generative AI.

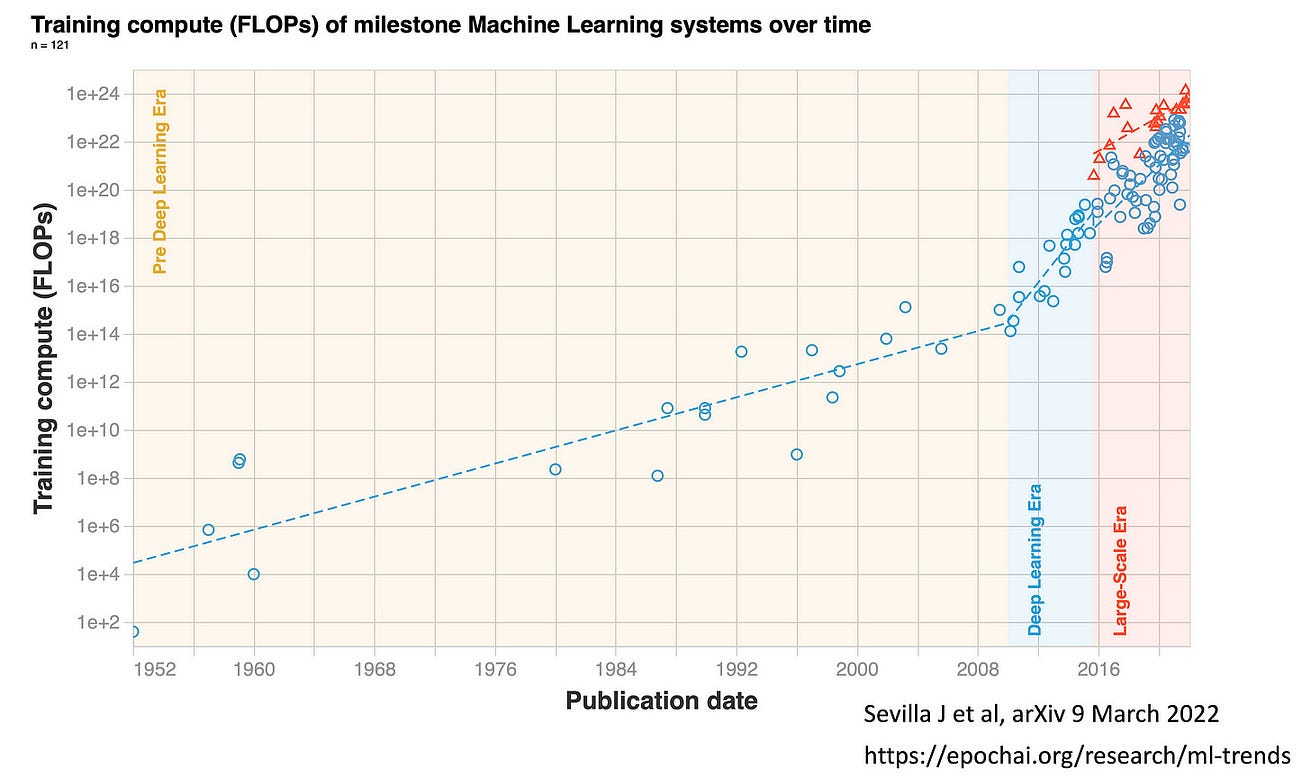

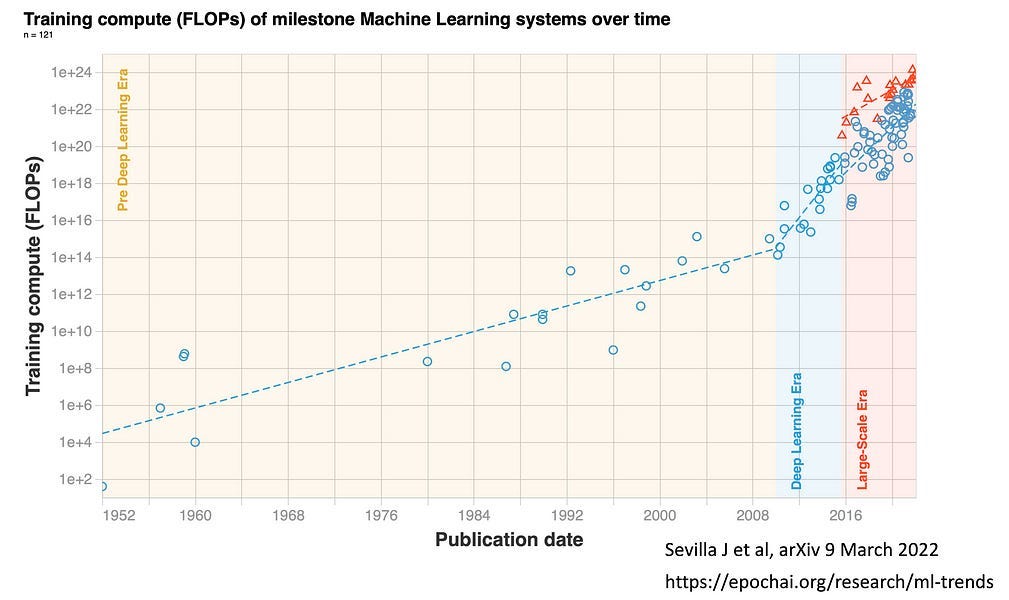

You can quickly get an appreciation of the “Large-Scale Era” of transformer models from this Figure below by Jamie Sevilla and colleagues.

It got started around 2016, with unprecedented levels of computer performance as quantified by floating point operations per second (FLOPs).

Whereas Moore’s Law for nearly 6 decades was characterized by doubling of training computation every 18–24 months, that is now doubling every 6 months in the era of foundation models.

Whereas Moore’s Law for nearly 6 decades was characterized by doubling of training computation every 18–24 months, that is now doubling every 6 months in the era of foundation models.

As of 2022, the training computation used has culminated with Google’s PaLM with 2.5 billion petaFLOPs and Minerva with 2.7 billion peta FLOPS.

PaLM uses 540 billion parameters, the coefficient applied to the different calculations within the program.

BERT, which was created in 2018, had “only” 110 million parameters, which gives you a sense of exponential growth, well seen by the log-plot below .

In 2023, there are models that are 10,000 times larger, with over a trillion parameters and a British company Graphcore that aspires to build one that runs more than 500 trillion parameters.

In 2023, there are models that are 10,000 times larger, with over a trillion parameters and a British company Graphcore that aspires to build one that runs more than 500 trillion parameters.

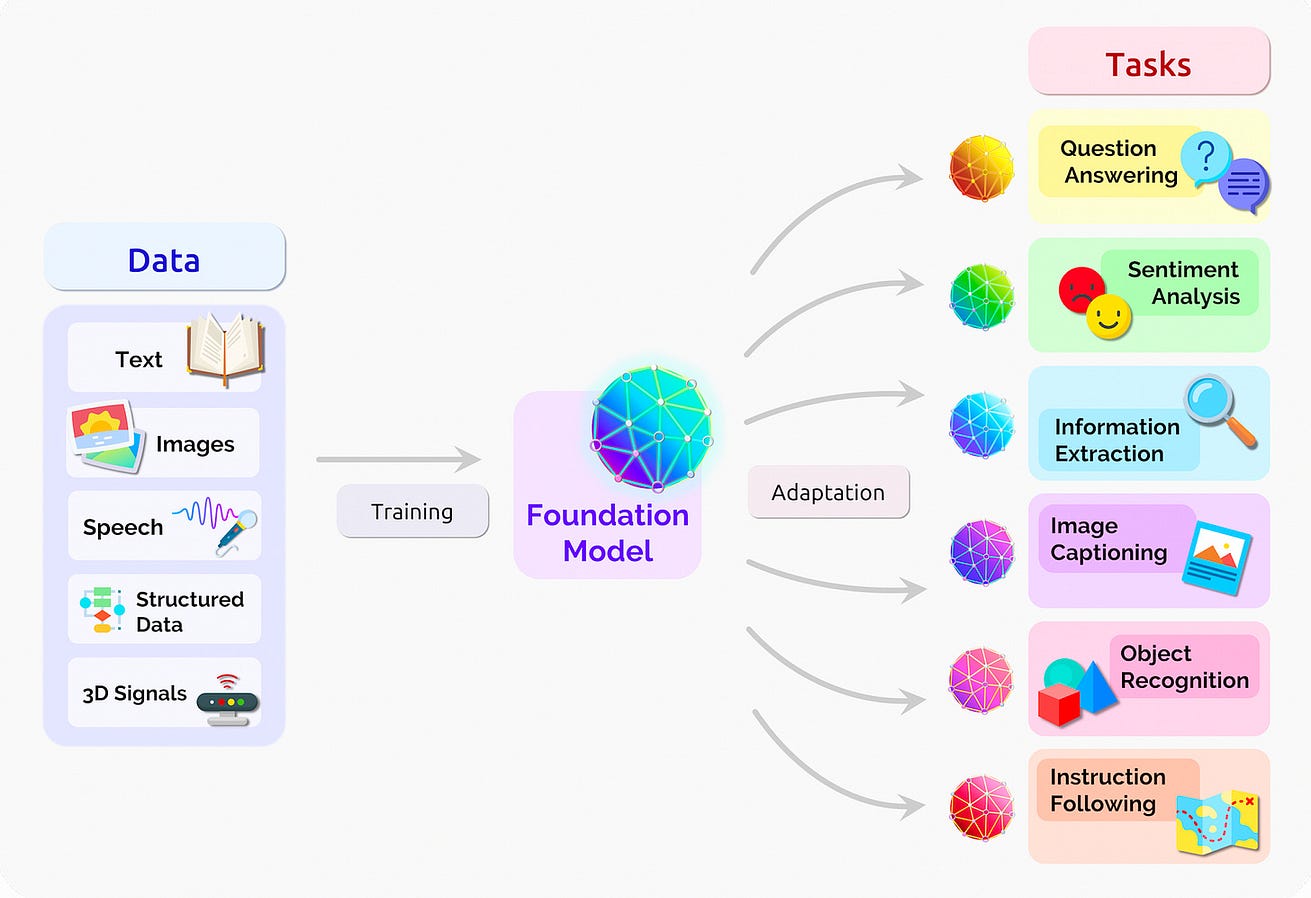

You’ve undoubtedly seen a plethora of articles in the media in recent months with these newfound capabilities from large language models,

… setting up the ability to go from text to images, text to video, write coherent essays, write code, generate art and films, and many other capabilities that I’ve tried to cull some of these together below.

This provides a sense of seamless integration between different types and massive amounts of data.

You may recall the flap about the LaMDA foundation model developed at Google — an employee believed it was sentient.

This provides a sense of seamless integration between different types and massive amounts of data.

Three Tests for Medicine

Before I get into the potential of foundation models in medicine, I want to review 2 rudimentary attempts to have a machine take medical qualifying exams, and then recap the recent Med-PaLM paper to appreciate the jump forward to medical foundation models.

1.In China, back in 2017, Tsinghua University and the company iFlyTek collaborated to build Xiaoyi (translates to “little doctor”) trained on 2 million medical records and 400,000 articles.

It was able to achieve a passing score of 456 for the Chinese medical licensing exam for which 360 to 600 is passing, by excelling on memorization and information recall but performing poorly on patient cases.

While it attracted media attention, there was (appropriately) no assertion that the AI was ready to take the place of doctors or formally be licensed.

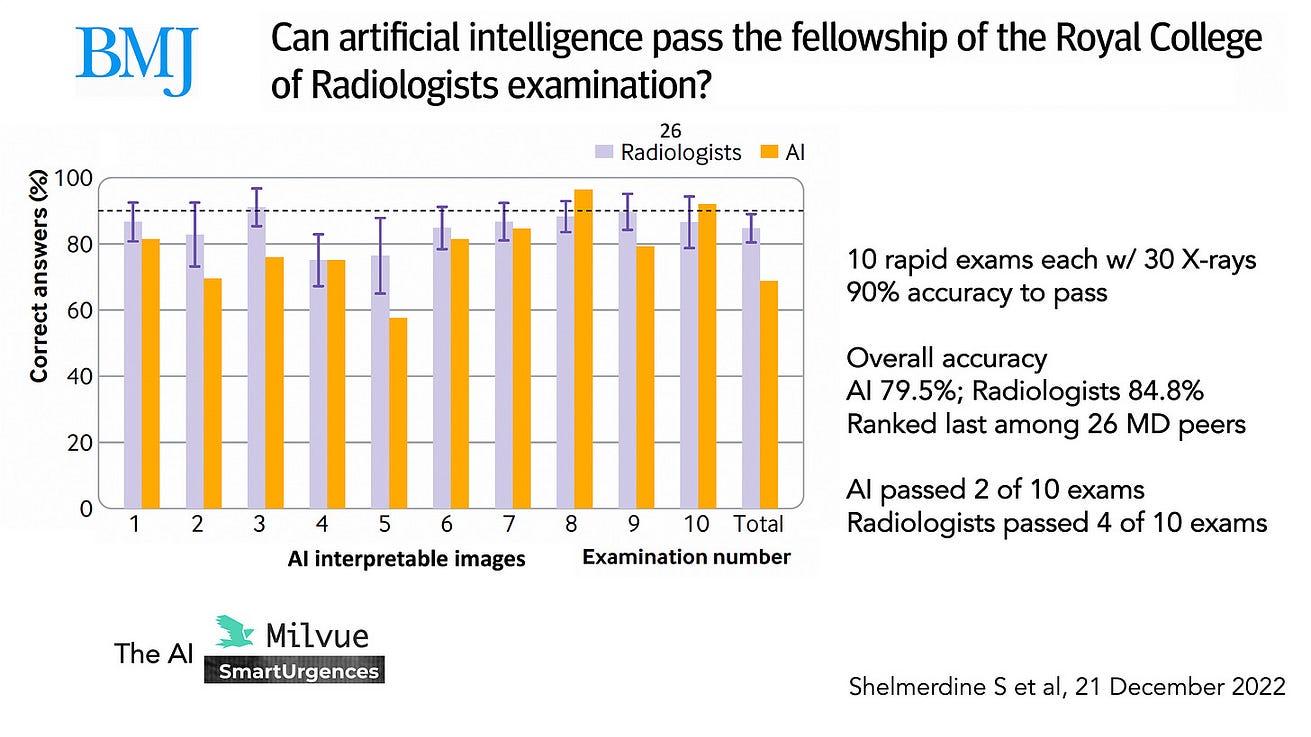

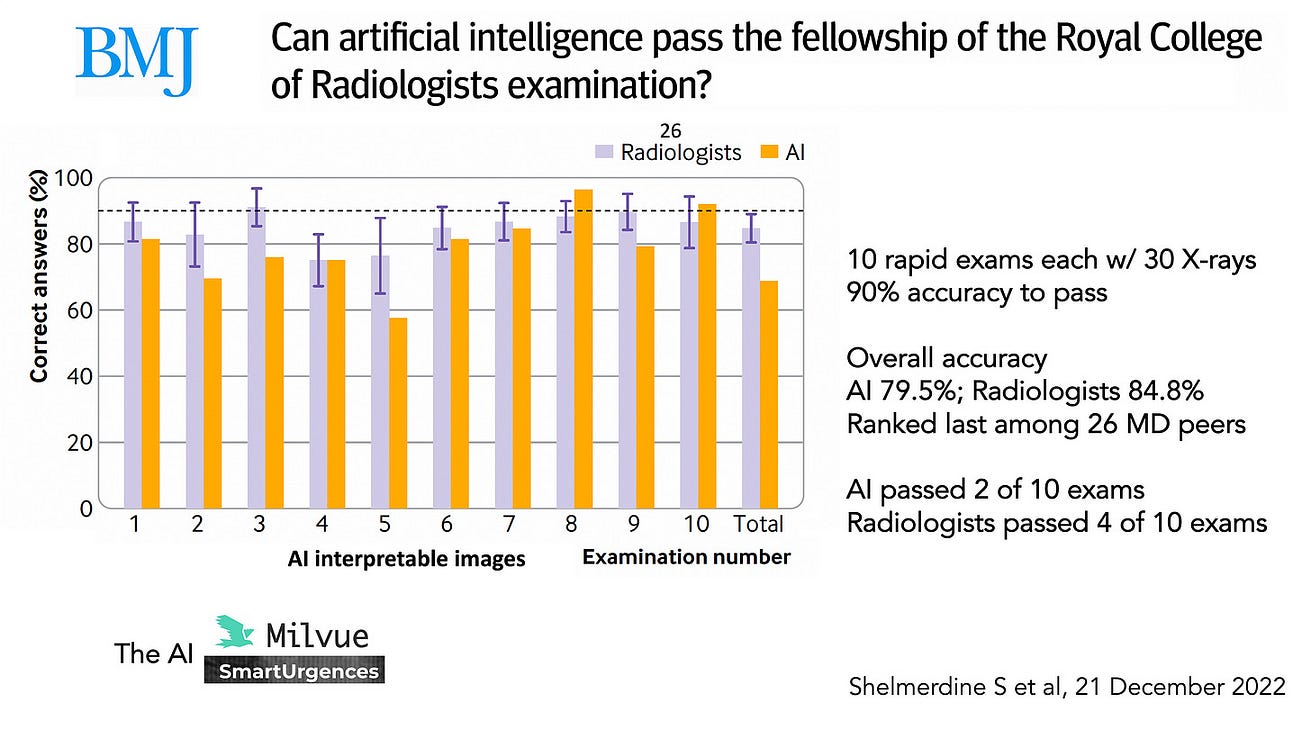

2.Very recently, an AI was put to a specific test of passing the UK Royal College of Radiology examination, as compared with 26 radiologists who had recently passed the test which consists of 10 sets of 30 images, with each set given only 35 minutes for interpretation.

- The results are summarized below for the images deemed AI interpretable and showed the AI passed 2 of the exam sets, as defined by ≥ 90% accuracy (as seen for exam set 8 and 10) and the overall accuracy for the AI was 79.5% compared with the radiologists at 84.8% who only passed 4 of the exam sets.

- There’s clearly room for improvement, but that’s not too bad a showing for the AI, a commercial product from the company Milvue.

- And that’s without the use of foundation models.

3.Next was a giant step for medical AI as far as testing its performance versus doctors.

That came last month with publication of the PaLM foundation model capabilities for the United States Medical Licensing Examination (USMLE),and was also assessed for several other medical question answering challenges, including consumer health questions.

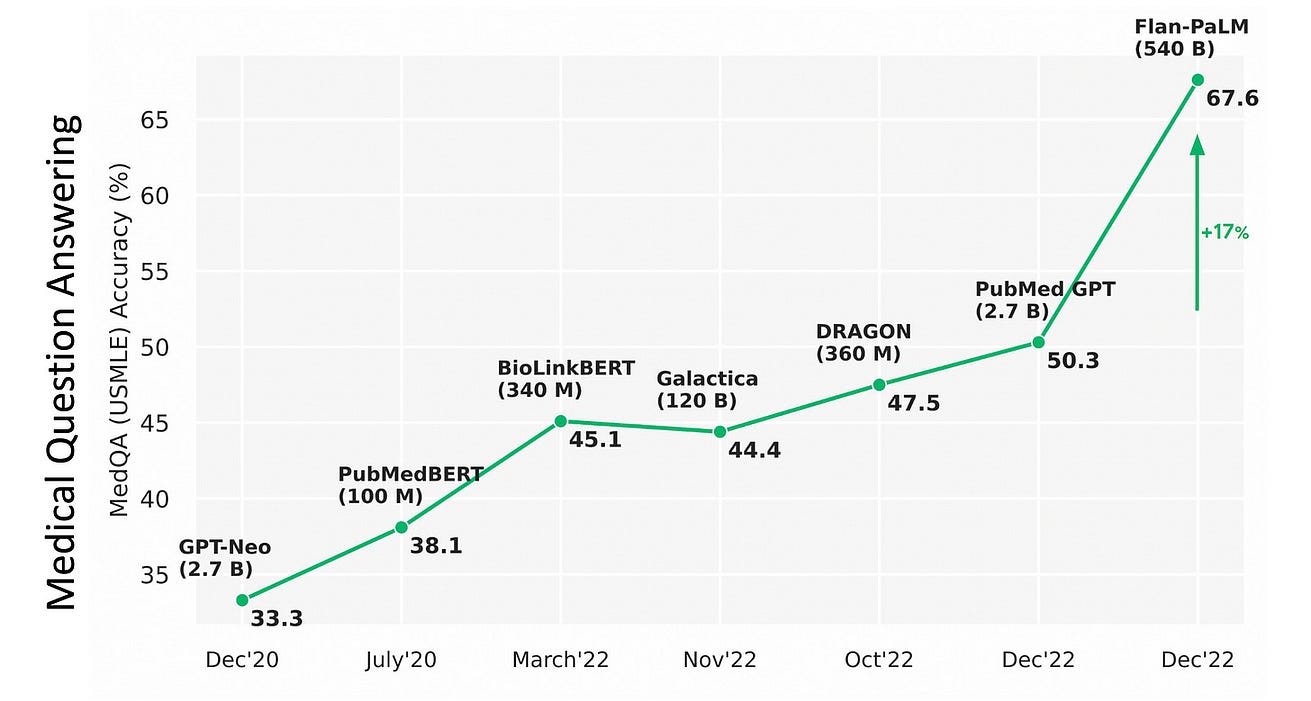

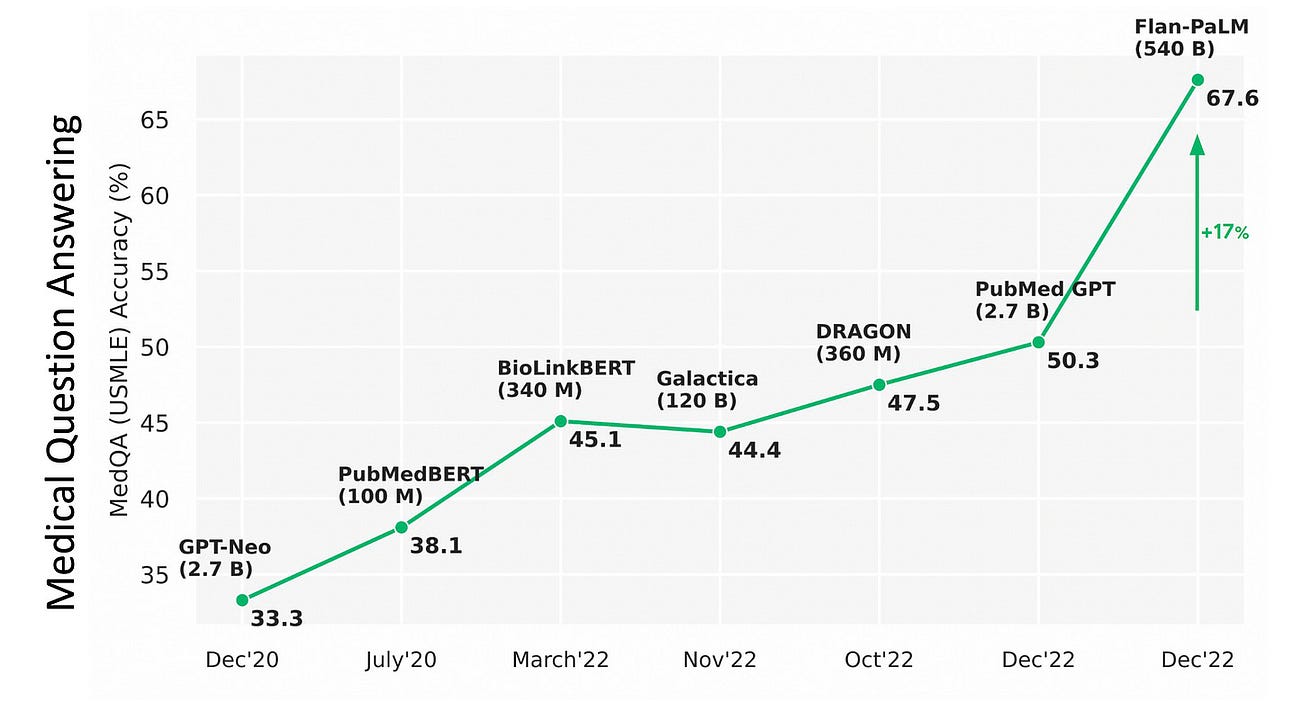

- From the above graphic, you can see the PaLM catapulting from 50% accuracy to 67.6%, an absolute jump of 17% (a relative increase of 33%!).

- Importantly, the parity in medical question answering was demonstrated by 92.6% of doctors saying the MED-PaLM chatbot was right as compared with 92.9% of other doctors being correct.

- Furthermore, for potential harm of the answers, there was only a small gap: the extent of possible harm was 5.9% for Med-PaLM and 5.7% for clinicians; the likelihood of possible harm was 2.3% and 1.3%, respectively.

- The authors from Google and Deep Mind concluded: “the resulting model, Med-PaLM, performs encouragingly, but remains inferior to clinicians.

- We show that comprehension, recall of knowledge, and medical reasoning improve with model scale and instruction prompt tuning, suggesting the potential utility of LLMs in medicine.”

Of note, the MED-PaLM findings were also replicated via ChatGPT for all 3 exam parts of the USMLE.

The Power of Foundation Models in Medicine

Until now. the deep learning in healthcare has almost exclusively been unimodal, …

… particularly emphasizing its applicability for all different types of medical images, from X-rays, CT and MRI scans, path slides, to skin lesions, retinal photos, and electrocardiograms.

These deep neural networks for medicine have been based on supervised learning from large annotated datasets, solving one task at a time. Typically the results of a model are only valid locally, where the training and validation was performed. It all has a narrow look.

In contrast, foundation models are multimodal, based upon large amounts of unlabeled, diverse data with self-supervised and transfer learning.

(For an in-depth review of self-supervised learning, see our recent Nature BME paper). The limited pre-training requirement provides for adaptability, interactivity, expressivity, and creativity, as we’ve seen with ChatGPT, DALL-E, Stable Diffusion and many models outside of healthcare domains. These models are characterized by in-context learning: the ability to perform tasks for which they were never explicitly trained.

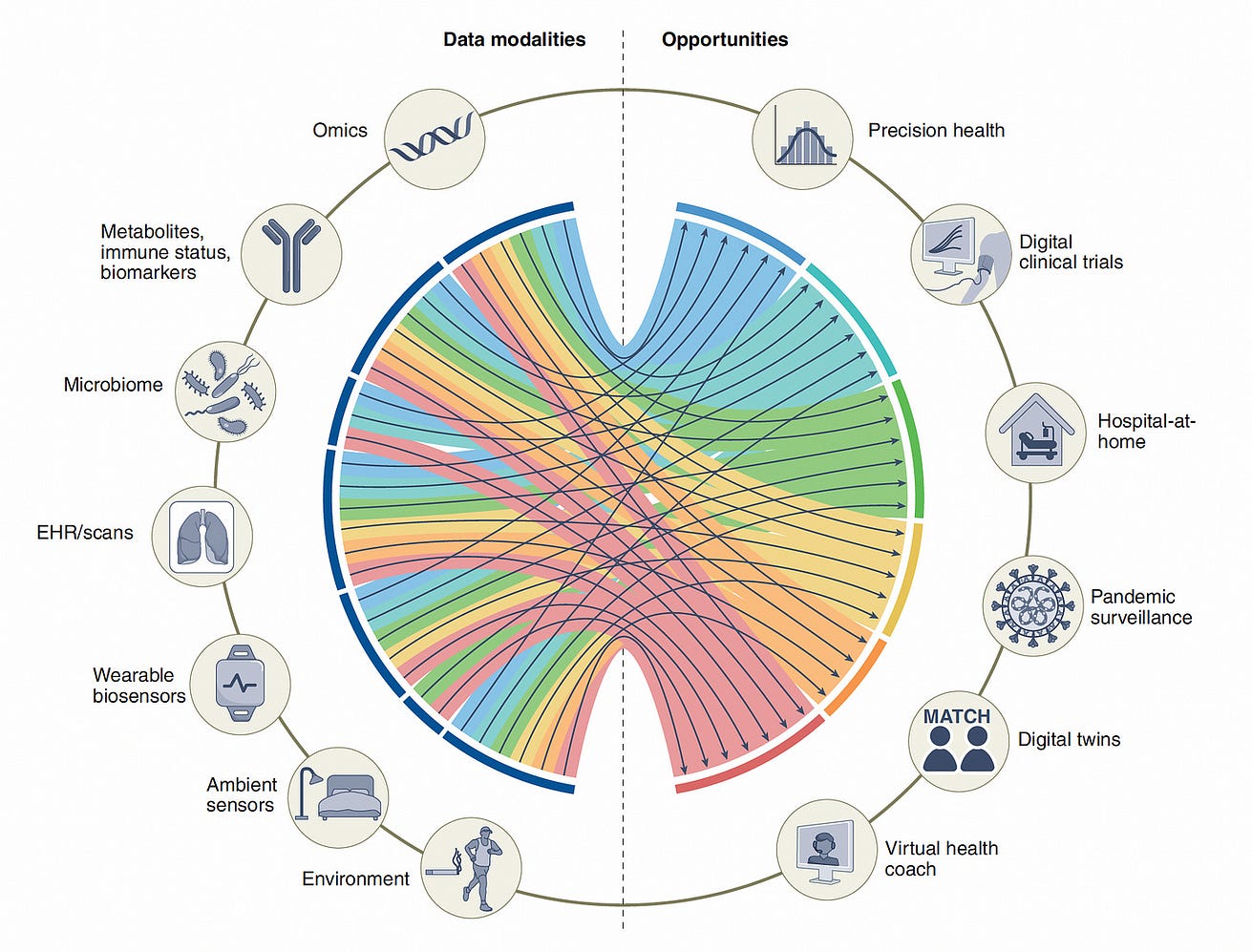

Accordingly, going forward, foundation models for medicine provide the potential for a diverse, integration of medical data that includes electronic health records, images, lab values, biologic layers such as the genome and gut microbiome, and social determinants of health.

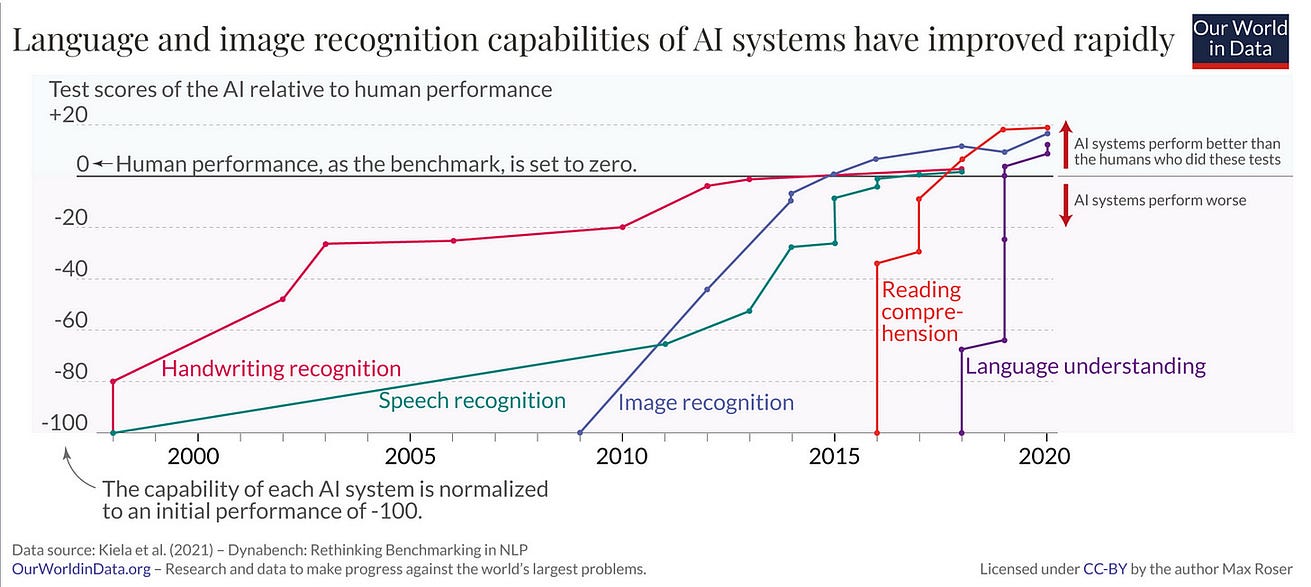

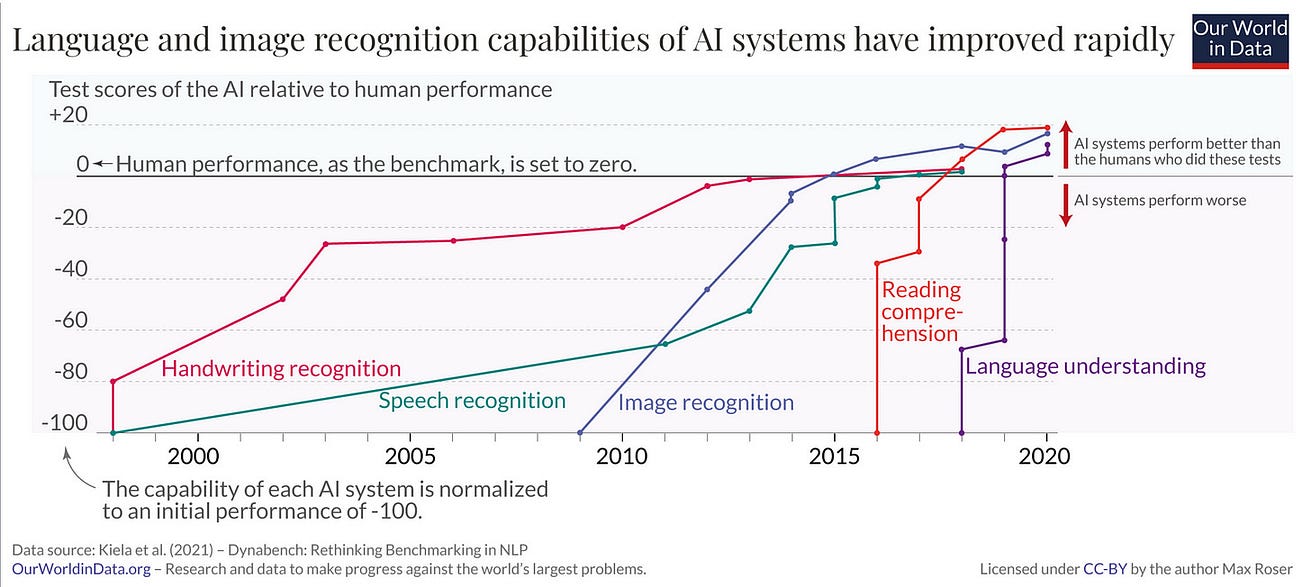

This represents the culmination of increased AI performance (relative to human) across multiple domains as depicted below, with particular impact of language understanding that has come as a result of foundation models.

It’s important for me to contextualize the term “language understanding” since it is not as humanoid as one would think.

LLMs find patterns in text; they really don’t connect words to meaning, which can result in off-base outputs, fabrication, and gross misunderstandings.

It’s important for me to contextualize the term “language understanding” since it is not as humanoid as one would think.

LLMs find patterns in text; they really don’t connect words to meaning, which can result in off-base outputs, fabrication, and gross misunderstandings.

Recently, the University of Florida’s massive computing resource, GatorTron, developed from scratch a LLM using 8.9 billion parameters and >90 billion words of text from electronic health records …

… to improve 5 clinical natural language processing tasks including medical question answering and medical relation extraction.

While much smaller than Med-PALM’s model, this is one of the first medical foundation models to be developed by an academic health system, not large tech companies like Google, OpenAI, or Meta.

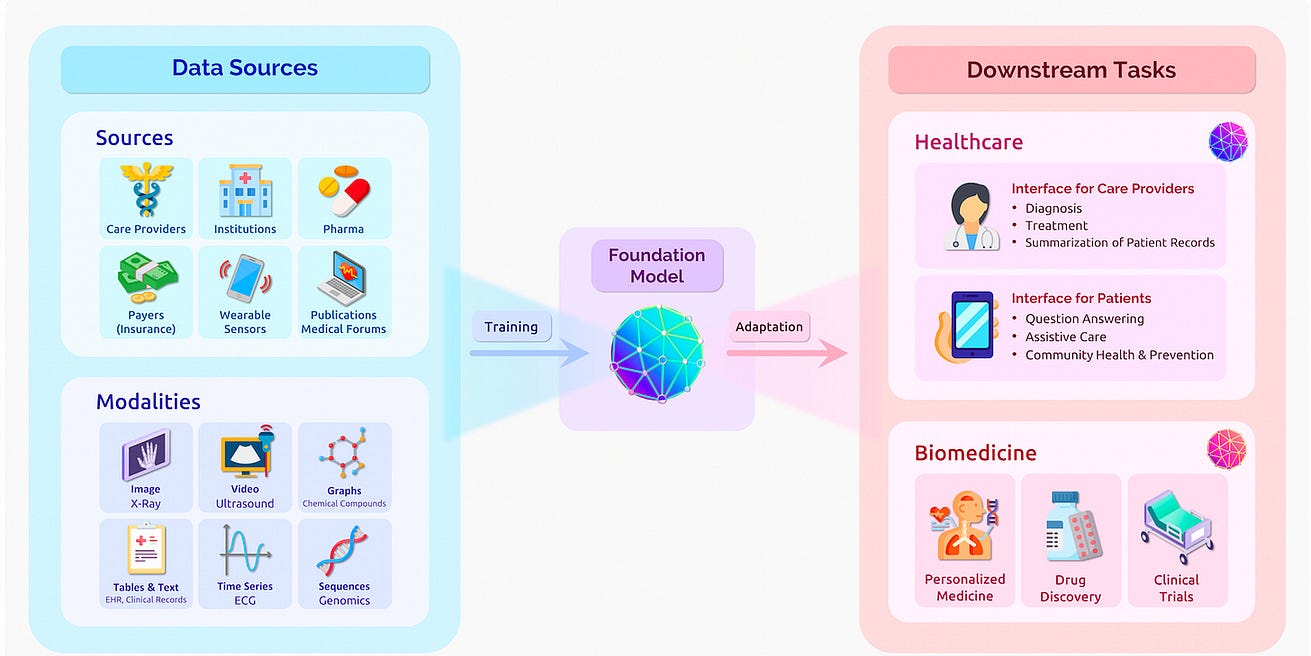

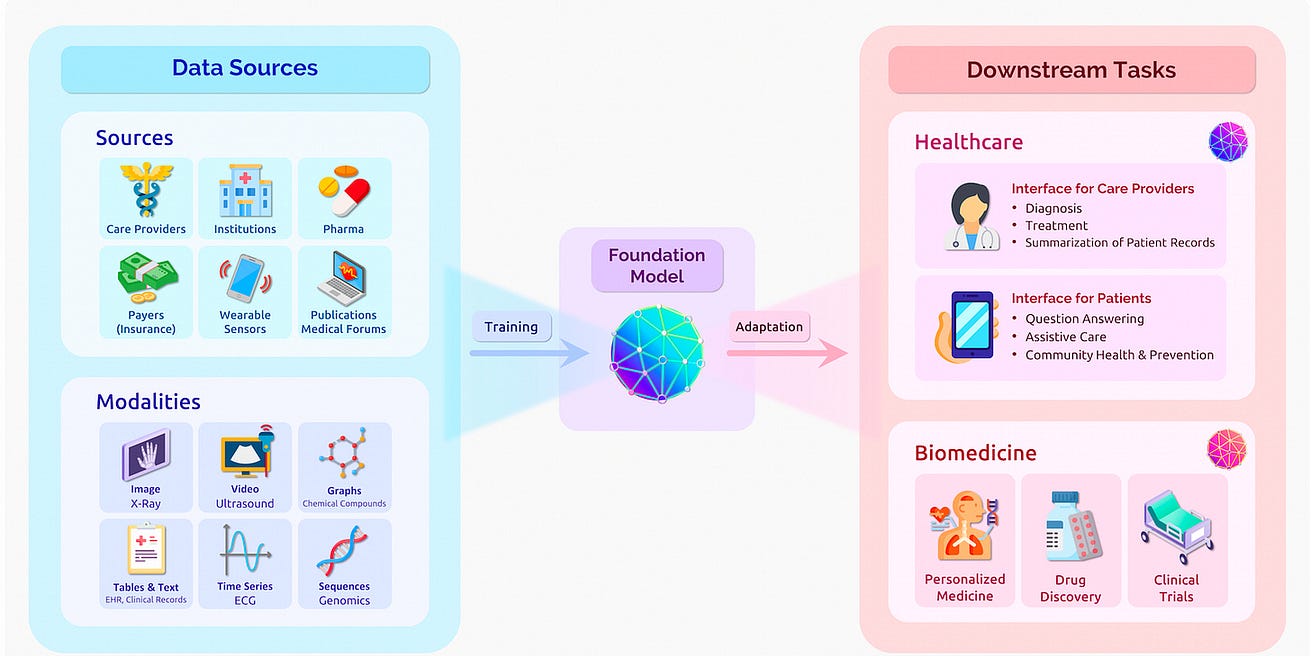

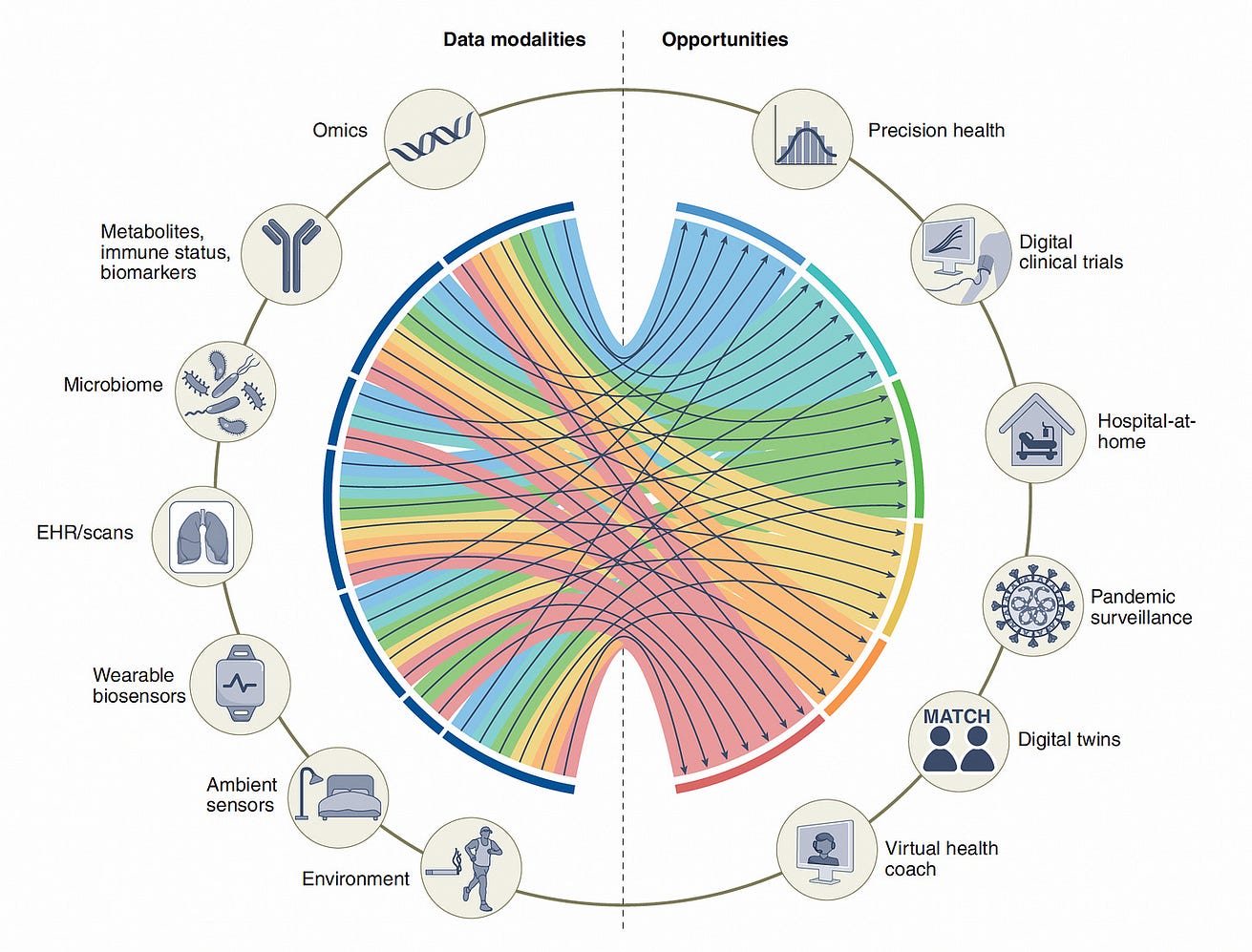

That’s just the beginning (only focused on EHRs), as seen by the path of multiple integrated data sources for major downstream tasks at the clinician and patient level, as seen below.

That’s just the beginning (only focused on EHRs), as seen by the path of multiple integrated data sources for major downstream tasks at the clinician and patient level, as seen below.

We really haven’t had a pragmatic way to proceed with multimodal medical AI, as we recently reviewed in Nature Medicine.

Now, with foundation models, there’s a path forward to go in many directions as we graphed below at right (from data sources on the left side of the diagram).

Now, with foundation models, there’s a path forward to go in many directions as we graphed below at right (from data sources on the left side of the diagram).

I should point out that it’s not exactly a clear or rapid path because there is a paucity of large or even massive medical datasets, and the computing power required to run these models is expensive and not widely available.

But the opportunity to get to machine-powered, advanced medical reasoning skills, that would come in handy (an understatement) with so many tasks in medical research (above Figure), and patient care, such as

- generating high-quality reports and notes,

- providing clinical decision support for doctors or patients,

- synthesizing all of a patient’s data from multiple sources,

- dealing with payors for pre-authorization, and

- so many routine and often burdensome tasks,

is more than alluring.

While LLMs are still evolving, the hype surrounding them is extraordinary.

Recently, Open AI, the startup with its ChatGPT (a refinement of GPT-3 and GPT-3.5, built initially in 2020, with 175 billion parameters using >400 billion words), has a potential valuation of $29 billion and Microsoft plans to follow on its initial $1 billion investment with a $10 billion investment.

Recently, Open AI, the startup with its ChatGPT (a refinement of GPT-3 and GPT-3.5, built initially in 2020, with 175 billion parameters using >400 billion words), has a potential valuation of $29 billion and Microsoft plans to follow on its initial $1 billion investment with a $10 billion investment.

And we know that Open AI is going to release GPT-4 soon, which will likely transcend the computing benchmarks of the current foundation models.

For balance, however, there’s this quote from Phil Libin, the CEO of Evernote: “All of these models are about to shit all over their own training data. We’re about to be flooded with a tsunami of bullshit.”

“All of these models are about to shit all over their own training data. We’re about to be flooded with a tsunami of bullshit.”

A Foundation for Medicine’s Future

It’s very early for LLMs/generative AI/foundation models in medicine, but I hope you can see from this overview that there has been substantial progress in answering medical questions

… -that AI is starting to pass the tests that approach the level of doctors, and it’s no longer just about image interpretation, but starting to incorporate medical reasoning skills.

That doesn’t have anything to do with licensing machines to practice medicine, but it’s a reflection that a force is in the works to help clinicians and patients process their multimodal health data for various purposes.

The key concept here is augment; I can’t emphasize enough that machine doctors won’t replace clinicians. Ironically, it’s about technology enhancing the quintessential humanity in medicine.

The key concept here is augment; I can’t emphasize enough that machine doctors won’t replace clinicians. Ironically, it’s about technology enhancing the quintessential humanity in medicine.

That gets us back to the premise of Deep Medicine, …

… AI has vast transformative potential to improve accuracy and precision of medicine, provide more autonomy for patients, and ultimately achieve the far reaching goal of restoring the patient-doctor relationship that has eroded over many decades.

Undoubtedly, over the years ahead, these models, combining massive data and computing power, deep learning on steroids, will find their way to the multitude of medical tasks.

I’m optimistic this new era of AI can accelerate the progress that is desperately needed. Stay tuned!

That gets us back to the premise of Deep Medicine, …

… AI has vast transformative potential to improve accuracy and precision of medicine, provide more autonomy for patients, and ultimately achieve the far reaching goal of restoring the patient-doctor relationship that has eroded over many decades.

Undoubtedly, over the years ahead, these models, combining massive data and computing power, deep learning on steroids, will find their way to the multitude of medical tasks.

I’m optimistic this new era of AI can accelerate the progress that is desperately needed. Stay tuned!

Originally published at https://erictopol.substack.com on January 15, 2023.