Based on a 8 months period review, of 230,865 articles, from 43 different news sources.

This is an excerpt from the publication above. For the complete version, see the original publication.

Rand

by Christian Johnson, William Marcellino

2021

COVID-19 offered authoritarian regimes, such as China and Russia, an opportunity to manipulate news media to serve state ends.

Researchers conducted a scalable proof-of-concept study for detecting state-level news manipulation.

Using a scalable infrastructure for harvesting global news media, and using machine-learning and data analysis workflows, the research team found that both Russia and China appear to have employed information manipulation during the COVID-19 pandemic in service to their respective global agendas.

This report, the second in a series, describes these efforts, as well as the analytic workflows employed for detecting and documenting state-actor malign and subversive information efforts.

This work is a potential blueprint for a detective capability against state-level information manipulation at the global scale, using existing, off-the-shelf technologies and methods.

This report is part of RAND’s Countering Truth Decay Initiative, which considers the diminishing role of facts and analysis in political and civil discourse and the policymaking process.

The research team found that both Russia and China appear to have employed information manipulation during the COVID-19 pandemic in service to their respective global agendas.

This report, the second in a series, describes these efforts, as well as the analytic workflows employed for detecting and documenting state-actor malign and subversive information efforts.

Research Questions

- Did authoritarian regimes engage in news manipulation during the pandemic?

- How can such manipulation be brought to light?

Key Findings

During the COVID-19 pandemic, both Russia and China engaged in news manipulation that served their political goals

- Russian media advanced anti-U.S. conspiracy theories about the virus.

- Chinese media advanced pro-China news that laundered their reputation in terms of COVID-19 response. Russian media also supported this laundering effort.

- Russia and China directly threatened global health and well-being by using authoritarian power over the media to oppose public health measures.

An enduring collection capability would enable real-time detection and analysis of state-actor propaganda

- Existing natural language processing methods can be combined to make sense of news reporting by nation, at global scale.

- A public system for monitoring global news that detects and describes global news themes by nation is plausible. Such a system might help guard against Truth Decay efforts from malicious state actors.

Recommendation

- Disinformation analyses should be performed at the state-actor level.

- Given the potential harm from such bad actors as Russia and China, and given the low level of investment needed to build a robust system for news manipulation detection, the U.S. government should support the establishment of such a system.

ORIGINAL PUBLICATION (excerpt)

The global spread of the coronavirus disease 2019 (COVID-19) created fertile ground for attempts to influence and destabilize different populations and countries. In response to this, RAND Corporation researchers conducted a proof-of-concept study for detecting these efforts at scale. Marrying a large-scale collection pipeline for global news with machine-learning and data analysis workflows, the RAND team found that both Russia and China appear to have employed information manipulation during the COVID-19 pandemic in service to their respective global agendas. This report is the second in a series of two reports; the first (Matthews, Migacheva, and Brown, 2021) focused on qualitative and descriptive analysis of the same data referred to in this report. Here, we describe our analytic workflows for detecting and documenting state-sponsored malign and subversive information efforts, and we report quantitative results that support the qualitative findings from the first report.

Introduction

As part of our analysis, we searched for both differences and similarities in the topics discussed by Russian, Chinese, and Western news media, and we found that conspiracy theories and geopolitical posturing were relatively common in Russian and Chinese news articles compared with Western (U.S. and UK) articles. The work we describe here lays the foundation for a robust protective capability that detects and sheds light on stateactor information manipulation and misconduct in the global arena.

Disinformation, Propaganda, and Truth Decay

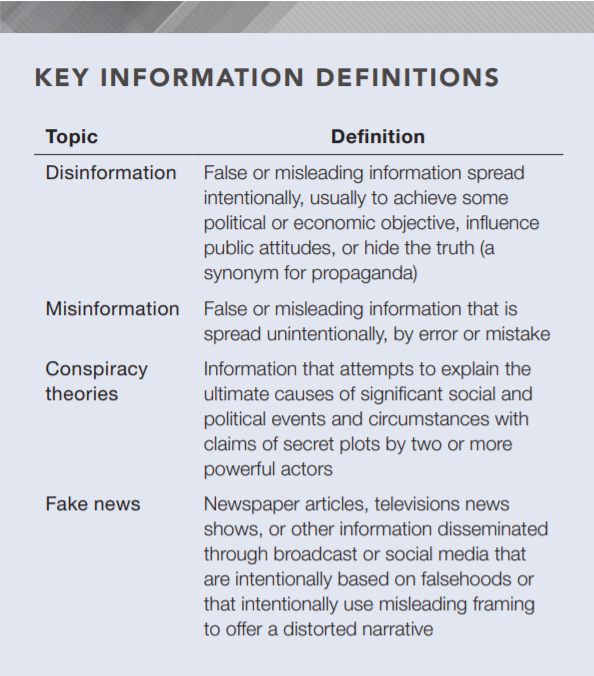

The world is experiencing a crisis related to disagreements over the established truth, a phenomenon that RAND refers to as Truth Decay — a shift in public discourse away from facts and analysis that is caused by four interrelated drivers (Rich and Kavanagh, 2018): 1. an increasing disagreement about facts and analytical interpretations of facts and data 2. a blurring of the line between opinion and fact 3. an increasing relative volume, and resulting influence, of opinion and personal experience over fact 4. a declining trust in formerly respected sources of factual information. Truth Decay is a serious threat to both domestic U.S. and international security, one that is being exacerbated by malign efforts from a variety of national bad actors. These ill-intentioned efforts to misuse information are labeled many ways — readers might have seen these efforts labeled as disinformation, misinformation, fake news, and information operations. For clarity and consistency’s sake, we use the definitions taken from Rich and Kavanagh, 2018, in the remainder of this paper. Our definition of conspiracy theories comes from Douglas et al., 2019. (See the Key Information Definitions box.

News Manipulation from Both China and Russia

See the original publication

Methodology (excerpt)

Identifying disinformation in a large, complex data set is not a simple task. The word disinformation is a catchall term used to refer to an array of different phenomena — from “fake news,” to opinion pieces masquerading as journalism, to legitimate news stories that heap inordinate attention on certain topics (while ignoring others). As described in the definitions box, disinformation is used to refer to the deliberate spreading of misleading or incorrect information; misinformation refers to honest but incorrect knowledge. However, the line between the two can sometimes be blurred; prior RAND work (Marcellino, Johnson, et al., 2020) showed that coordinated bot activity was likely used deliberately in the run-up to the 2020 U.S. presidential election to amplify authentic tweets and make them appear more popular than they really were (commonly called astroturfing) in an attempt to create a false impression of grassroots spread. Our goal, therefore, was not to detect disinformation per se, but to identify when and how Russian and Chinese news media appear to be manipulated by forces outside the normal news cycle and editorial processes. Because our data set featured many articles from a variety of U.S. and UK media, we make the key assumption that newsworthy stories will be covered by these Western outlets; instances in which Russian and Chinese media cover stories that are qualitatively different from those covered by Western media are worthy of more scrutiny to determine whether they could be part of a disinformation campaign.

Computational techniques have previously been used by researchers to study dissemination of fake news, particularly on Twitter. Grinberg et al., 2019, demonstrated that fake news in the lead-up to the 2016 U.S. presidential election was seen and shared primarily by a relatively small number of Twitter users, primarily consisting both of highly conservative and cyborg accounts.2 Using a similar methodology, Lazer et al., 2020, found that the same conclusions essentially held true for the spread of fake news related to COVID-19. Marcellino, Johnson, et al., 2020, used a different methodology to determine that botlike accounts likely played a significant role in spreading farright conspiracy theories and disinformation leading up to the 2020 election. In short, the available research suggests that much of the disinformation on social media is spread by a relatively small number of malign users.

These studies have mostly examined metadata and derived features to draw their conclusions instead of studying the language of disinformation itself.3 This paper builds on existing research to study not only metadata about news, but the actual content of the news itself. We hoped that understanding the topical themes being spread via disinformation would lead to new insights that cannot be seen simply by looking at user engagement on social networks, such as Twitter.

The first report in this series identified several key markers of disinformation in Russian and Chinese news: conspiracy theories, geopolitical posturing, and anti-U.S. messaging. Although we hoped that a data-driven approach would replicate these findings, we sought to perform our analysis as blindly as possible; that is, we did not seek to confirm our suspicions and simply search the data to find conspiracy theories. Instead, we used algorithms to detect the dominant themes in the data and only then analyzed these themes to determine their content.

Our overall strategy, as mentioned earlier, rested on the idea that any disinformation published by Russian and Chinese news sources would be detectable because its content would differ meaningfully from the content in U.S. and UK news articles. Certainly, some differences in content are to be expected under a no-manipulation hypothesis: For example, Russian news sources might be more likely to cover stories about Eastern Europe than news from the United States, simply because of geographical proximity. However, we hypothesized that by inspecting these differences closely, we would be able to uncover patterns associated with manipulation. Ultimately, any differences between Western and non-Western news articles would also require human analysis to determine whether the differences were innocuous or malign.

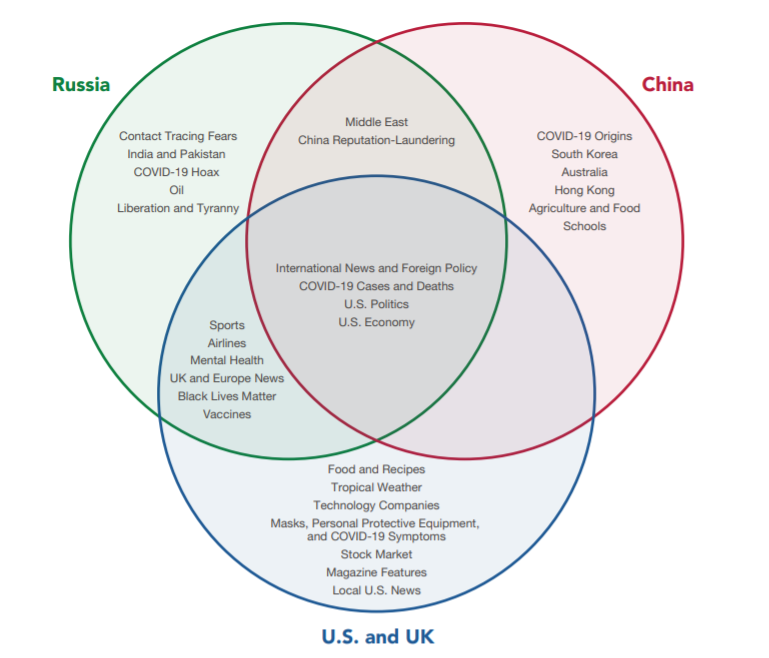

COVID-19 Topics

We performed LDA topic modeling separately for each of our three document groups: Russian news, Chinese news, and U.S./UK news. With major topics in hand for each group, we were then able to cross-reference the learned topics for each country to uncover major patterns. We did this manually: With only 20 or so topics for each data set, it was straightforward to identify the topics that overlapped significantly with one another. Future iterations of this pipeline could theoretically automate this step by computing an appropriate distance measure between the LDA word scores for each topic across our three data sets. Figure 2 displays the main results of our LDA modeling. Some topics were durable across the three groups: COVID-19 Cases and Deaths, for example, was a topic that each LDA model found independently in the different data sets. Example headlines from this topic (and all others) are shown in Table 1. Several topics were shared by only two of the three document groups — for example, news about airlines was found in both Russian and U.S./UK news but not in Chinese news. We distinguished among articles shared across all the three categories — or by Western and Russian news — and those unique to Russia or China or shared by those two because we felt the latter category deserved a closer look.8 Several of these topics appeared to be either promoting conspiracy theories or performing reputation-laundering, especially for China. The most suspicious of these topics are Contact Tracing Fears, COVID-19 Hoax, Liberation and Tyranny, and China Reputation-Laundering (and COVID-19 Origins). Here, we describe each of these topics and a few example headlines from each.

NOTES: All three news groups discussed major topics, such as the COVID-19 case rate and the U.S. economy.

We found that both China and Russia also devoted many articles to country and region-specific news in such places as India, Pakistan, Europe, Australia, and the Middle East.

Of particular note are the various conspiracy-adjacent topics promoted by Russia and China (COVID-19 Hoax, Liberation/Tyranny, Contact Tracing, and COVID-19 Origins), and the fact that both China and Russia engaged in reputation-laundering for China’s handling of the pandemic.

From the topics mentioned before, we excerpt below one of them (“Downplaying COVID-19 Severity”. For details about the other topics, please refer to the Original Publication.

Downplaying COVID-19 Severity

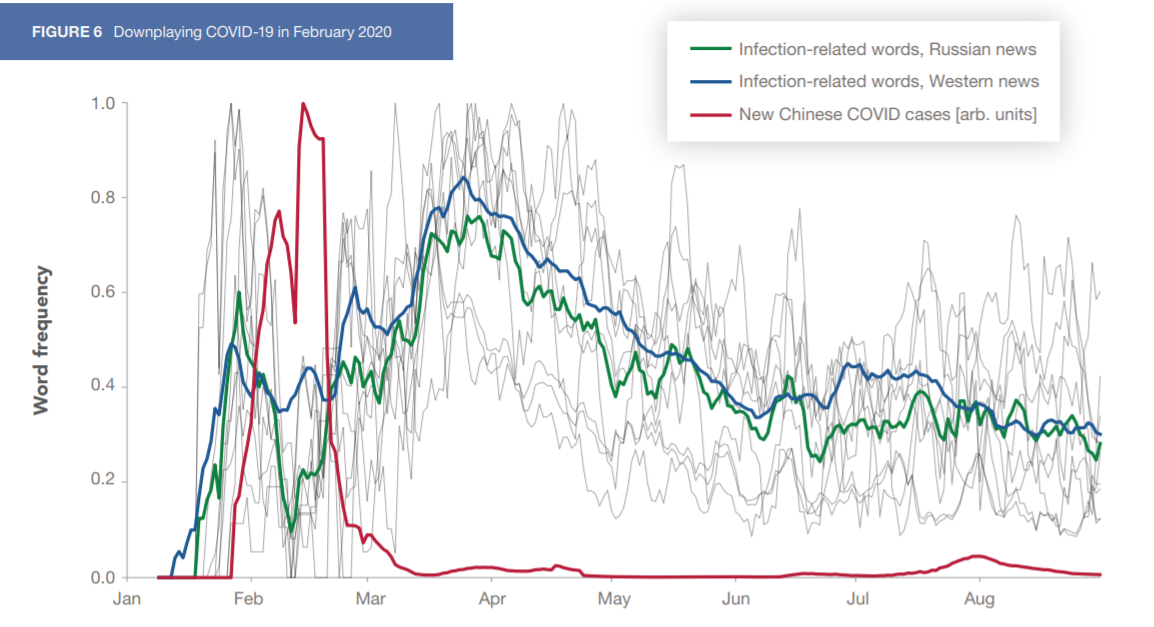

We discovered an unusual downtick in the frequency of words associated with COVID-19 in mid-February 2020 among Russian news sources. These words — coronavirus cases, spread, crisis, help, needed, and fight, plus a few variations on them — are clearly associated with COVID-19 outbreaks. We see these words grow in frequency in both Western media (shown in Figure 6) and Chinese media (not

shown in order to keep the plot less cluttered) during February as the first outbreak grew in Hubei Province in China. Surprisingly, Russian news shows a significant decrease in the frequency of these words at the same time, suggesting that the Russian news media was downplaying the severity of the crisis in China. As noted already, the decrease in word frequency is also associated with a decrease in total number of articles using the words coronavirus or COVID. We compare the word frequencies for each country with the official number of new COVID-19 cases in China in Figure 6.12 Note that the Russian use of these words and article-publishing rate recovered quickly after mid-February (as the Chinese outbreak was brought under control) and closely tracked Western news frequencies after March. Alternative explanations for why Russian news used these infection-related words less during an outbreak are difficult to come by — recall that the frequency of total articles pub lished also dropped (i.e., Russia was writing fewer articles about COVID-19, and the ones that were published downplayed the severity of the outbreak).

NOTE: Gray lines indicate the relative frequency of individual infection-related words and phrases (coronavirus cases, spread of, the spread, coronavirus crisis, crisis, fight the, help, needed, the crisis) among Russian news sources,

the green line indicates the Russian mean;

the red line indicates the number of Chinese COVID-19 cases.

In mid-February, we see a significant drop in word frequency, which is somewhat perplexing given the surge in COVID-19 cases. Similar patterns are not seen in Western media (blue line) or Chinese media (not shown).

We conclude that Russian news was deliberately downplaying the outbreak in China, although why it did so remains unclear.

Discussion (excerpt)

Our data-driven investigation into COVID-19 news was in alignment with much of what was seen in RAND’s earlier qualitative investigation.

Russian and Chinese English language news appear to be manipulated to promote certain narratives — particularly ones that promote Russian and Chinese geopolitical interests at the expense of the United States.

They also appear to promote a variety of different conspiracy theories, especially those that portray the pandemic as an opportunity for governments and elites to take advantage of citizens and those that cast doubt on the origin of COVID-19.

This represents serious misconduct on the part of Russia’s and China’s governments.

By using their authoritarian power over the media to oppose public health measures, they directly harmed global health and well-being.

This case study demonstrates the serious stakes of state-actor misconduct in information and highlights the importance of a robust response.

Funding

Funding for this research was provided by gifts from RAND supporters and income from operations. The research was conducted by the RAND National Security Research Division.

Originally published at https://www.rand.org on April 29, 2021.

Citation

Johnson, Christian and William Marcellino, Bad Actors in News Reporting: Tracking News Manipulation by State Actors. Santa Monica, CA: RAND Corporation, 2021. https://www.rand.org/pubs/research_reports/RRA112-21.html.

TAGS: fake news