modern health . institute

for continuous health transformation

& digital health strategy

Joaquim Cardoso MSc.

Founder and CEO

Chief Research and Strategy Officer (CRSO) — for the Research Institute

Chief Editor — for the Knowledge Portal;

& Independent Senior Advisor — for the Advisory Consulting Unit

September 16, 2023

What is the message?

The use of AI, specifically ChatGPT-4, has a significant and positive impact on the productivity and quality of work in a professional consulting setting.

However, it is essential to understand the limitations and challenges associated with AI and how to effectively integrate it into work processes.

Additionally, there are different approaches, termed “Centaurs” and “Cyborgs,” that consultants can adopt to maximize the benefits of AI while avoiding potential pitfalls.

Statistics and Examples:

Increased Productivity: Consultants using ChatGPT-4 completed 12.2% more tasks on average than those who did not use AI.

Faster Task Completion: Tasks were completed 25.1% more quickly when consultants used AI.

Higher Quality Results: Consultants using AI produced results of 40% higher quality compared to those without AI. This was confirmed by both human and AI graders.

Impact on Low Performers: Low-performing consultants experienced the most significant performance improvements when they used AI, with a 43% increase in their performance.

Challenges with AI Use: Consultants who used AI for tasks it wasn’t suitable for were more likely to make mistakes, highlighting the importance of understanding AI’s limitations.

Jagged Frontier: The capabilities of AI vary across different tasks, creating what the authors call a “Jagged Frontier” where some tasks are well-suited for AI while others are not. For example, AI excelled at tasks like idea generation but struggled with tasks like basic math.

Skill Leveling: AI serves as a skill leveler, helping lower-performing consultants bridge the performance gap with top consultants.

AI’s Limitations: In a specific task designed to challenge AI, human consultants outperformed AI because they could solve problems that AI couldn’t.

Automation Complacency: Overreliance on AI can lead to complacency and reduced human judgment and skills, as seen in other studies.

Centaurs and Cyborgs: Successful AI integration strategies include becoming “Centaurs,” where consultants divide tasks strategically between AI and humans, or “Cyborgs,” where consultants deeply integrate their work with AI, alternating back and forth.

Future of Work: AI is already a powerful disruptor in the workplace, and it is essential for individuals and organizations to make informed choices about how to use AI effectively.

Expanding Technological Frontier: The technological frontier is continually expanding, with the expectation that more powerful AI models will be available in the near future.

AI, such as ChatGPT-4, can significantly enhance productivity and quality in professional work but requires a nuanced approach to maximize its benefits.

Understanding AI’s capabilities and limitations and adopting effective integration strategies are crucial for the future of work.

Dancing on the Jagged Frontier

The paper, along with a stream of excellent work by other scholars, suggests that, regardless of the philosophic and technical debates over the nature and future of AI, it is already a powerful disrupter to how we actually work.

And this is not a hyped new technology that will change the world in five years, or that requires a lot of investment and the resources of huge companies — it is here, NOW.

Infographic

One page summary (by Ethan Mollick @ linkedin)

We have a new working paper full of experiments on how AI effects work, and the results suggest a big impact using just the technologies available today.

Over the past months, I have been working with a team of amazing social scientists on a set of large pre-registered experiments to test the effect of AI at Boston Consulting Group, the elite consulting firm.

The headline is that consultants using the GPT-4 AI

- finished 12.2% more tasks on average,

- completed tasks 25.1% more quickly, and

- produced 40% higher quality results than those without. And low performers had the biggest gains.

But we also found that people who used AI for tasks it wasn’t good at were more likely to make mistakes, trusting AI when they shouldn’t.

It was often hard for people to know when AI was good or bad at a task because AI is weird, creating a “Jagged Frontier” of capabilities.

But some consultants navigated the Frontier well, by acting as what we call “Cyborgs” or “Centaurs,” moving back and forth between AI and human work in ways that combined the strengths of both.

I think this is the way work is heading, very quickly.

All of this was done by a great team, including the Harvard social scientists Fabrizio Dell’Acqua, Edward McFowland III, and Karim Lakhani; Hila Lifshitz- Assaf from Warwick Business School and Katherine Kellogg of MIT (plus myself). Saran Rajendran, Lisa Krayer, and François Candelon ran the experiment on the BCG side.

Source: https://www.linkedin.com/feed/update/urn:li:activity:7108783242056478720/

DEEP DIVE

Centaurs and Cyborgs on the Jagged Frontier

I think we have an answer on whether AIs will reshape work….

One Useful Thing

ETHAN MOLLICK

16/09/2023

A lot of people have been asking if AI is really a big deal for the future of work. We have a new paper that strongly suggests the answer is YES.

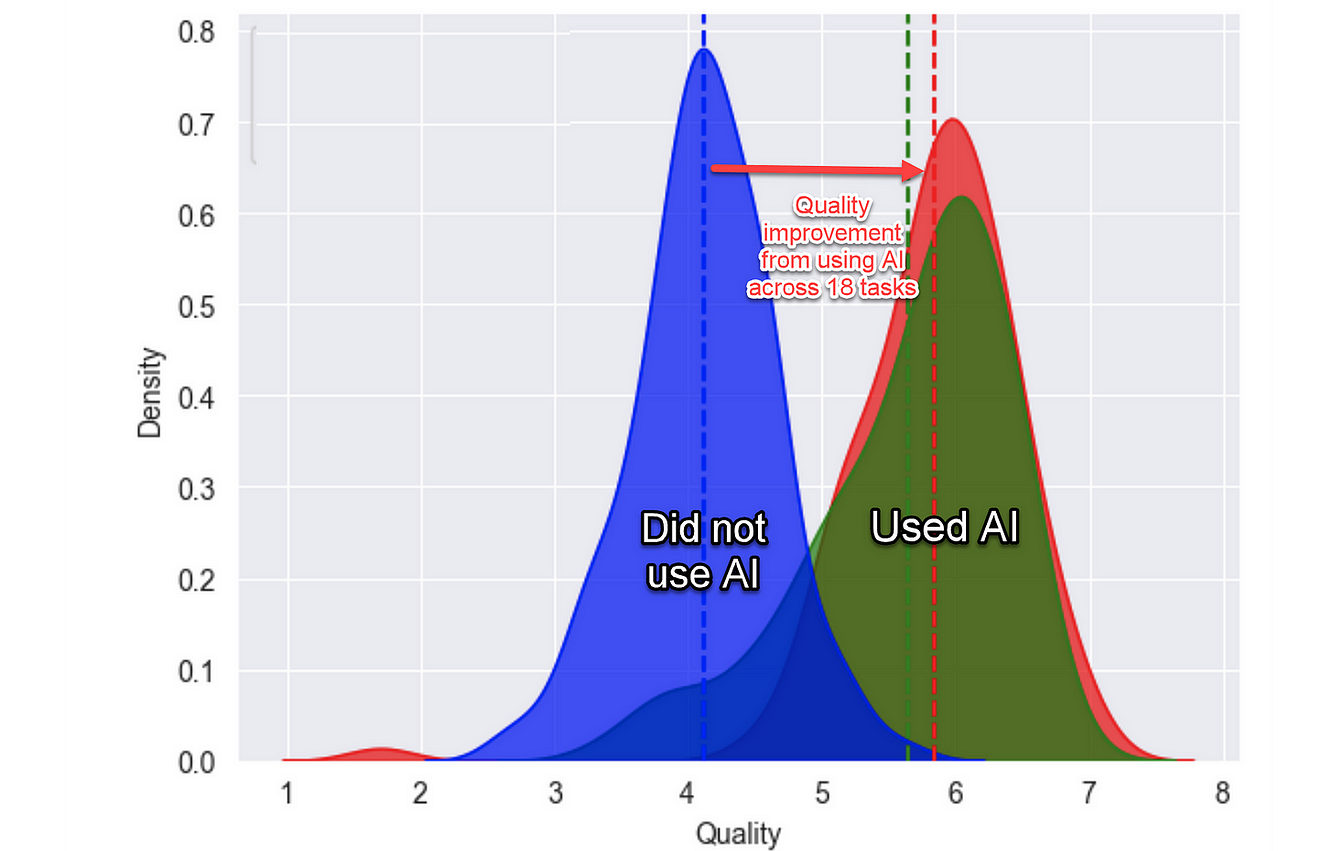

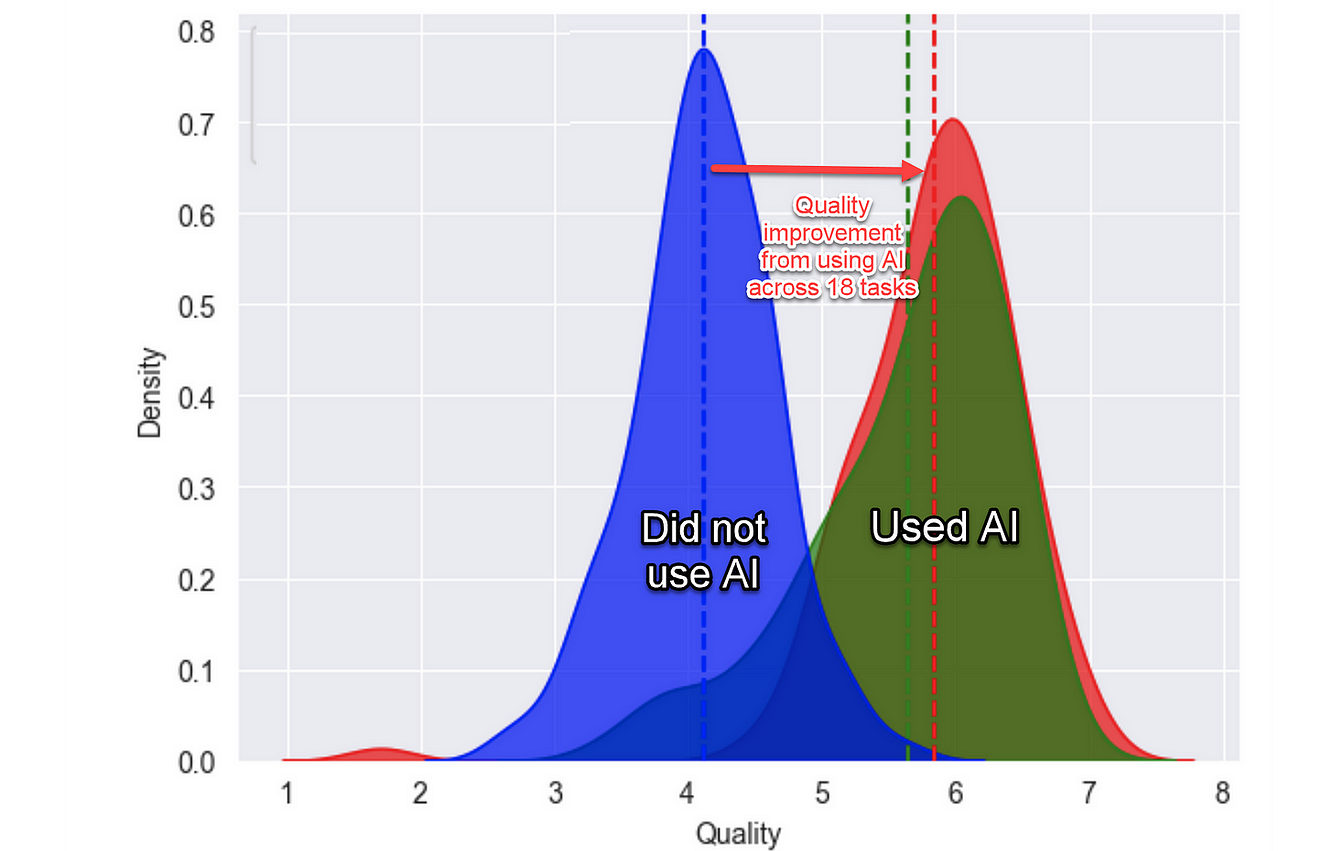

For the last several months, I been part of a team of social scientists working with Boston Consulting Group, turning their offices into the largest pre-registered experiment on the future of professional work in our AI-haunted age. Our first working paper is out today. There is a ton of important and useful nuance in the paper but let me tell you the headline first: for 18 different tasks selected to be realistic samples of the kinds of work done at an elite consulting company, consultants using ChatGPT-4 outperformed those who did not, by a lot. On every dimension. Every way we measured performance.

Consultants using AI finished12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without. Those are some very big impacts. Now, let’s add in the nuance.

First, it is important to know that this effort was multidisciplinary, involving multiple types of experiments and hundreds of interviews, conducted by a great team, including the Harvard social scientists Fabrizio Dell’Acqua, Edward McFowland III, and Karim Lakhani; Hila Lifshitz-Assaf from Warwick Business School and Katherine Kellogg of MIT (plus myself). Saran Rajendran, Lisa Krayer, and François Candelon ran the experiment on the BCG side, using a full 7% of its consulting force (758 consultants). They all did a lot of very careful work that goes far, far beyond the post. So, please look at the paper to make sure you get all the details — especially if you have questions about numbers or methods. I need to simplify a lot to fit 58 pages of findings into a post, and any mistakes are mine, not my co-authors. Also, while we pre-registered these experiments, this is still a new working paper, so there might be errors or mistakes, and the paper is not yet peer-reviewed. With that in mind, let’s get to the details…

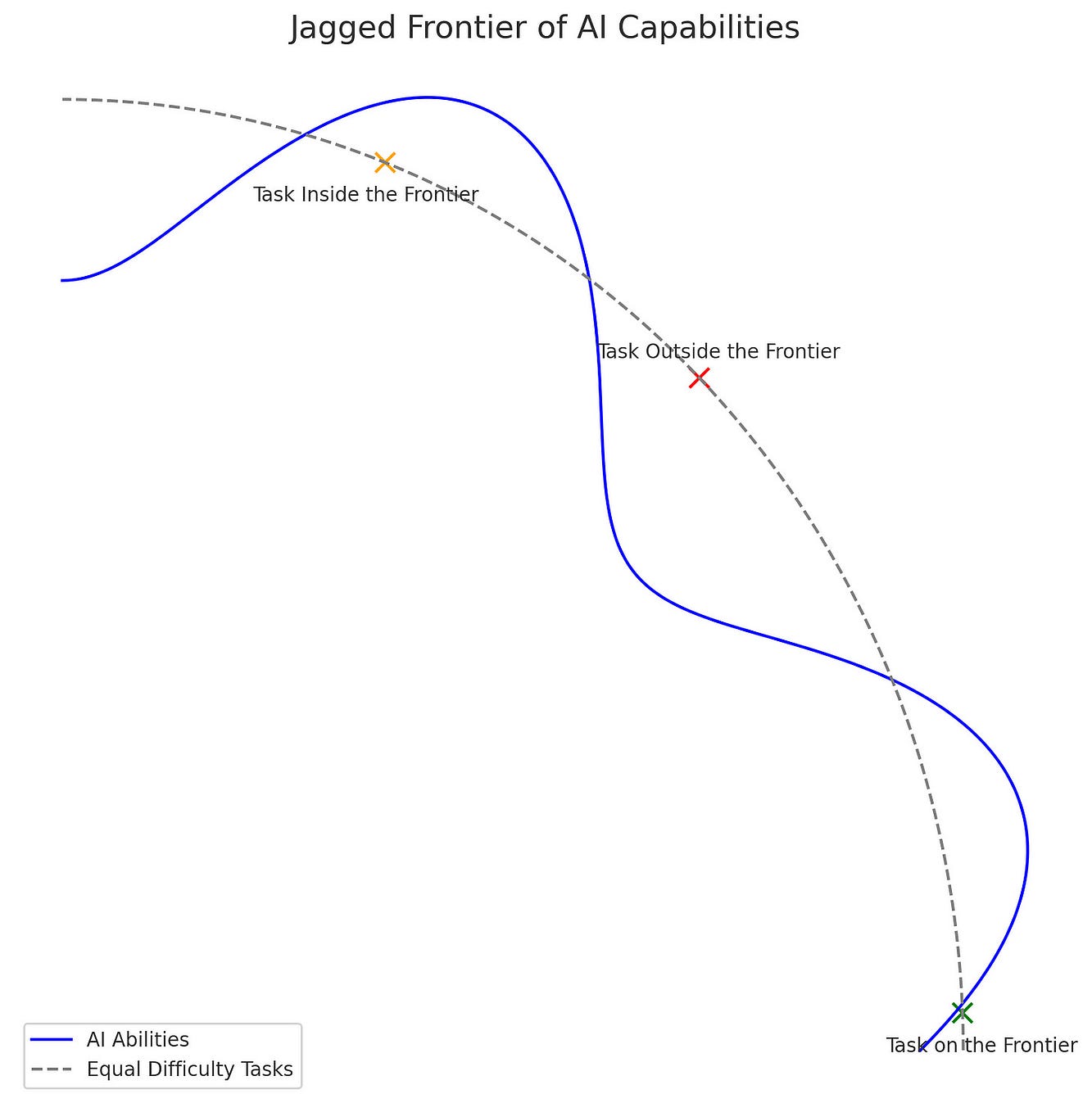

Inside the Jagged Frontier

AI is weird. No one actually knows the full range of capabilities of the most advanced Large Language Models, like GPT-4. No one really knows the best ways to use them, or the conditions under which they fail. There is no instruction manual. On some tasks AI is immensely powerful, and on others it fails completely or subtly. And, unless you use AI a lot, you won’t know which is which.

The result is what we call the “Jagged Frontier” of AI. Imagine a fortress wall, with some towers and battlements jutting out into the countryside, while others fold back towards the center of the castle. That wall is the capability of AI, and the further from the center, the harder the task. Everything inside the wall can be done by the AI, everything outside is hard for the AI to do. The problem is that the wall is invisible, so some tasks that might logically seem to be the same distance away from the center, and therefore equally difficult — say, writing a sonnet and an exactly 50 word poem — are actually on different sides of the wall. The AI is great at the sonnet, but, because of how it conceptualizes the world in tokens, rather than words, it consistently produces poems of more or less than 50 words. Similarly, some unexpected tasks ( like idea generation) are easy for AIs while other tasks that seem to be easy for machines to do (like basic math) are challenges for LLMs.

I asked the ChatGPT with Code Interpreter to visualize this for you:

To test the true impact of AI on knowledge work, we took hundreds of consultants and randomized whether they were allowed to use AI. We gave those who were allowed to use AI access to GPT-4, the same model everyone in 169 countries can access for free with Bing, or by paying $20 a month to OpenAI. No special fine-tuning or prompting, just GPT-4 through the API.

We then did a lot of pre-testing and surveying to establish baselines, and asked consultants to do a wide variety of work for a fictional shoe company, work that the BCG team had selected to accurately represent what consultants do. There were creative tasks (“Propose at least 10 ideas for a new shoe targeting an underserved market or sport.”), analytical tasks (“Segment the footwear industry market based on users.”), writing and marketing tasks (“Draft a press release marketing copy for your product.”), and persuasiveness tasks (“Pen an inspirational memo to employees detailing why your product would outshine competitors.”). We even checked with a shoe company executive to ensure that this work was realistic — they were. And, knowing AI, these are tasks that we might expect to be inside the frontier.

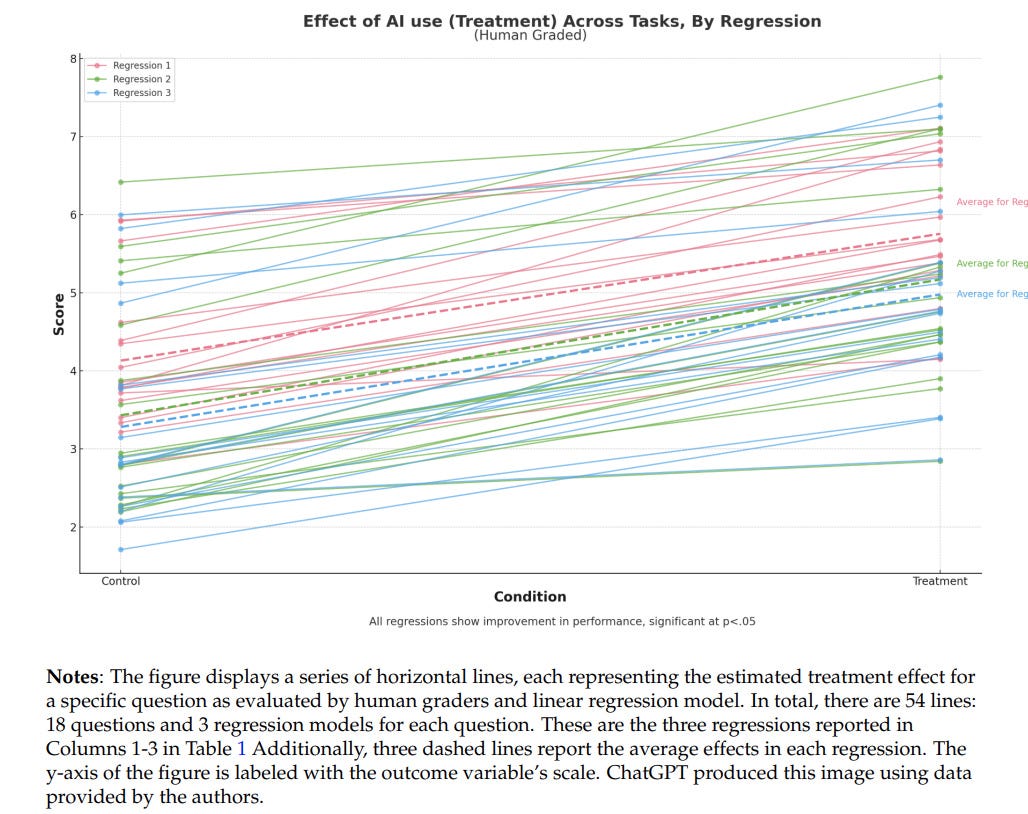

In line with our theories, and as we have discussed, we found that the consultants with AI access did significantly better, whether we briefly introduced them to AI first (the “overview” group in the diagram) or did not. This was true for every measurement, whether the time it took to complete tasks, the number of tasks completed overall (we gave them an overall time limit) or the quality of the outputs. We rated that quality using both human and AI graders, who agreed with each other (itself an interesting finding).

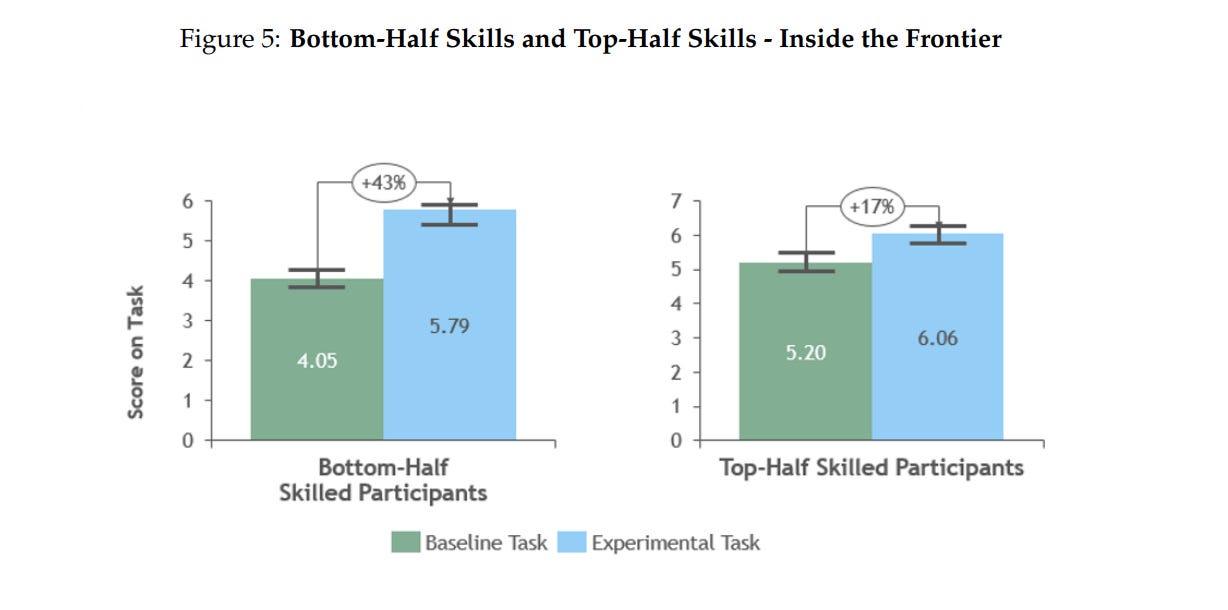

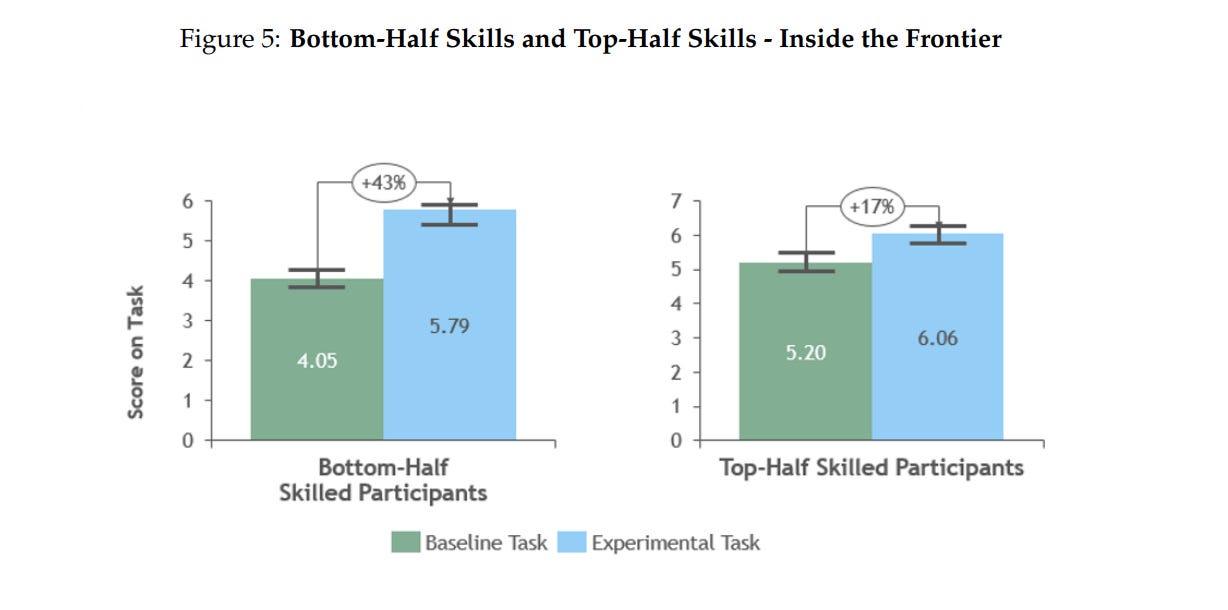

We also found something else interesting, an effect that is increasingly apparent in other studies of AI: it works as a skill leveler. The consultants who scored the worst when we assessed them at the start of the experiment had the biggest jump in their performance, 43%, when they got to use AI. The top consultants still got a boost, but less of one. Looking at these results, I do not think enough people are considering what it means when a technology raises all workers to the top tiers of performance. It may be like how it used to matter whether miners were good or bad at digging through rock… until the steam shovel was invented and now differences in digging ability do not matter anymore. AI is not quite at that level of change, but skill levelling is going to have a big impact.

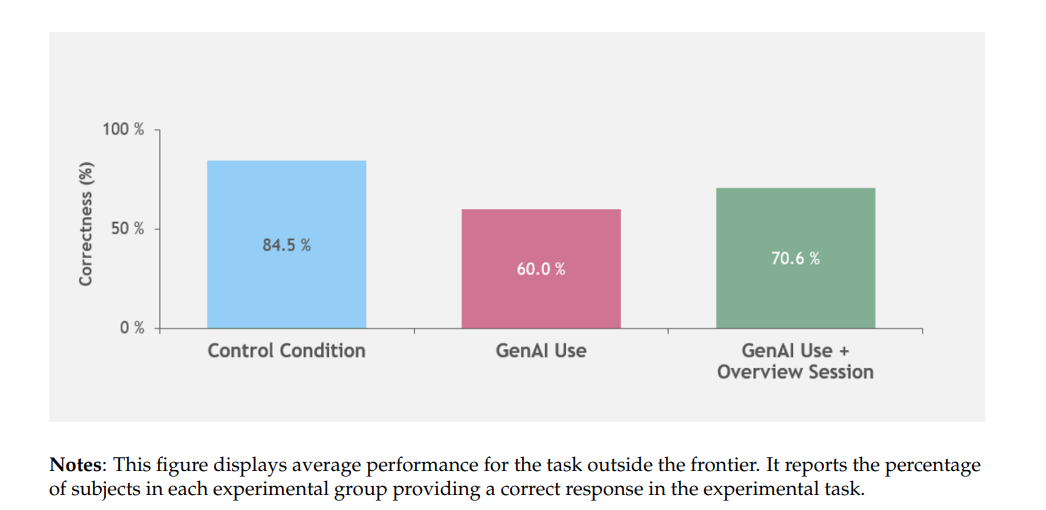

Outside the Jagged Frontier

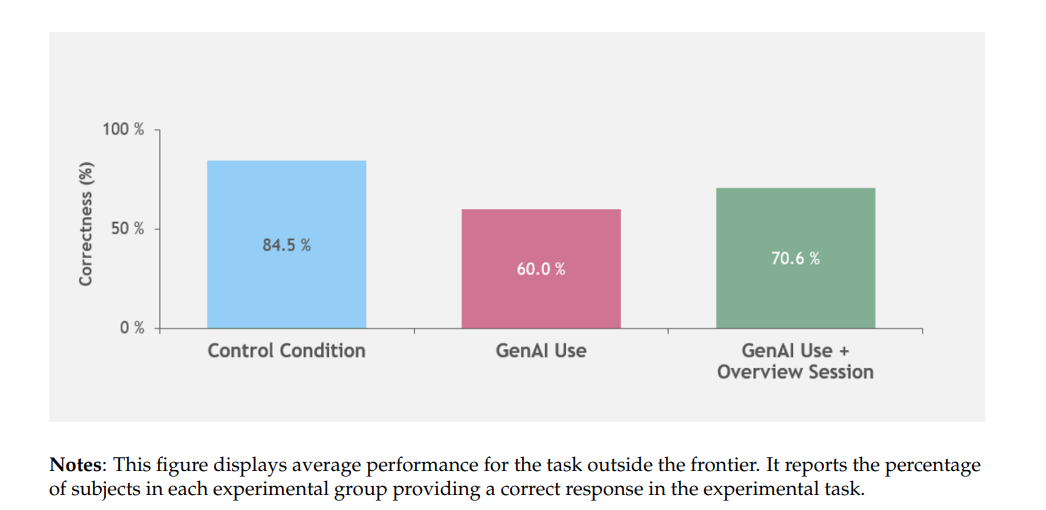

But there is more to the story. BCG designed one more task, this one carefully selected to ensure that the AI couldn’t come to a correct answer. This wasn’t easy. As we say in the paper “since AI proved surprisingly capable, it was difficult to design a task in this experiment outside the AI’s frontier where humans with high human capital doing their job would consistently outperform AI.” But we identified a task that used the blind spots of AI to ensure it would give a wrong, but convincing, answer to a problem that humans would be able to solve. Indeed, human consultants got the problem right 84% of the time without AI help, but when consultants used the AI, they did worse — only getting it right 60–70% of the time. What happened?

In a different paper than the one we worked on together, Fabrizio Dell’Acqua shows why relying too much on AI can backfire. In an experiment, he found that recruiters who used high-quality AI became lazy, careless, and less skilled in their own judgment. They missed out on some brilliant applicants and made worse decisions than recruiters who used low-quality AI or no AI at all. When the AI is very good, humans have no reason to work hard and pay attention. They let the AI take over, instead of using it as a tool. He called this “falling asleep at the wheel”, and it can hurt human learning, skill development, and productivity.

In our experiment, we also found that the consultants fell asleep at the wheel — those using AI actually had less accurate answers than those who were not allowed to use AI (but they still did a better job writing up the results than consultants who did not use AI). The authoritativeness of AI can be deceptive if you don’t know where the frontier lies.

Centaurs and Cyborgs

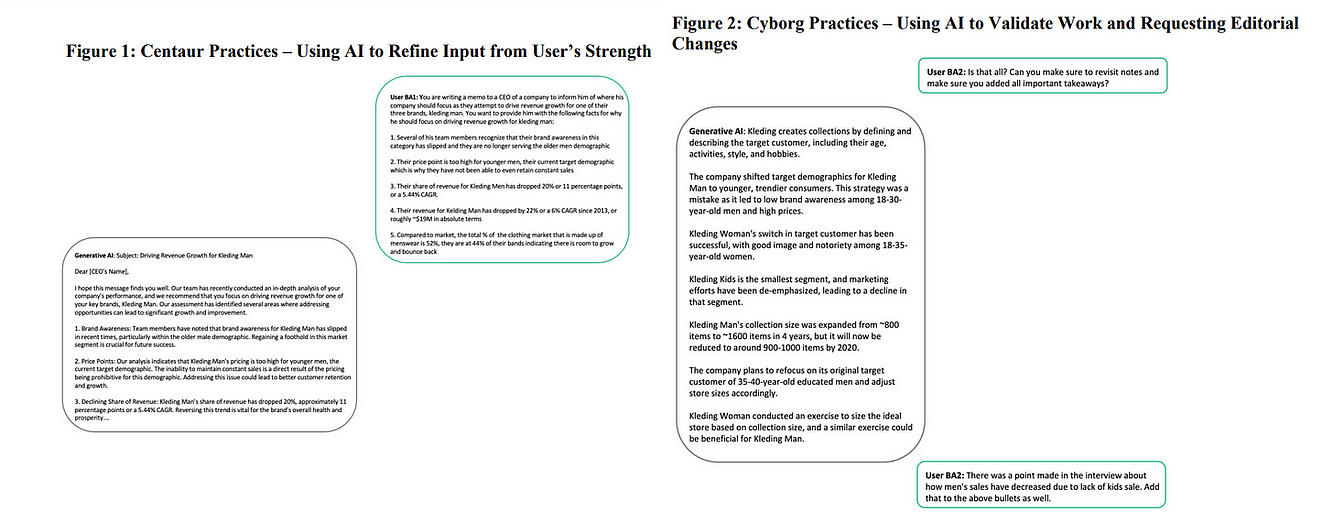

But a lot of consultants did get both inside and outside the frontier tasks right, gaining the benefits of AI without the disadvantages. The key seemed to be following one of two approaches: becoming a Centaur or becoming a Cyborg. Fortunately, this does not involve any actual grafting of electronic gizmos to your body or getting cursed to turn into the half-human/half-horse of Greek myth. They are rather two approaches to navigating the jagged frontier of AI that integrates the work of person and machine.

Centaur work has a clear line between person and machine, like the clear line between the human torso and horse body of the mythical centaur. Centaurs have a strategic division of labor, switching between AI and human tasks, allocating responsibilities based on the strengths and capabilities of each entity. When I am doing an analysis with the help of AI, I often approach it as a Centaur. I will decide on what statistical techniques to do, but then let the AI handle producing graphs. In our study at BCG, centaurs would do the work they were strongest at themselves, and then hand off tasks inside the jagged frontier to the AI.

On the other hand, Cyborgs blend machine and person, integrating the two deeply. Cyborgs don’t just delegate tasks; they intertwine their efforts with AI, moving back and forth over the jagged frontier. Bits of tasks get handed to the AI, such as initiating a sentence for the AI to complete, so that Cyborgs find themselves working in tandem with the AI. This is how I suggest approaching using AI for writing, for example. It is also how I generated two of the illustrations in the paper (the Jagged Frontier image and the 54 line graph, both of which were built by ChatGPT, with my initial direction and guidance)

Dancing on the Jagged Frontier

Our paper, along with a stream of excellent work by other scholars, suggests that, regardless of the philosophic and technical debates over the nature and future of AI, it is already a powerful disrupter to how we actually work. And this is not a hyped new technology that will change the world in five years, or that requires a lot of investment and the resources of huge companies — it is here, NOW. The tools the elite consultants used to supercharge their work are the exact same as the ones available to everyone reading this post. And the tools the consultants used will soon be much worse than what is available to you. Because the technological frontier is not just jagged, it is expanding. I am very confident that in the next year, at least two companies will release models more powerful than GPT-4. The Jagged Frontier advances, and we have to be ready for that.

Even aside from any anxiety that statement might cause, it is also worth noting the other downsides of AI. People really can go on autopilot when using AI, falling asleep at the wheel and failing to notice AI mistakes. And, like other research, we also found that AI outputs, while of higher quality than that of humans, were also a bit homogenous and same-y in aggregate. Which is why Cyborgs and Centaurs are important — they allow humans to work with AI to produce more varied, more correct, and better results than either humans or AI can do alone. And becoming one is not hard. Just use AI enough for work tasks and you will start to see the shape of the jagged frontier, and start to understand where AI is scarily good… and where it falls short.

In my mind, the question is no longer about whether AI is going to reshape work, but what we want that to mean. We get to make choices about how we want to use AI help to make work more productive, interesting, and meaningful. But we have to make those choices soon, so that we can begin to actively use AI in ethical and valuable ways, as Cyborgs and Centaurs, rather than merely reacting to technological change. Meanwhile, the Jagged Frontier advances.

Originally published at https://www.oneusefulthing.org.

References

Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality

Harvard Business School

Fabrizio Dell’Acqua Saran Rajendran Edward McFowland III Lisa Krayer Ethan Mollick François Candelon Hila Lifshitz-Assaf Karim R. Lakhani Katherine C. Kellogg

Abstract

The public release of Large Language Models (LLMs) has sparked tremendous interest in how humans will use Artificial Intelligence (AI) to accomplish a variety of tasks.

In our study conducted with Boston Consulting Group, a global management consulting firm, we examine the performance implications of AI on realistic, complex, and knowledge-intensive tasks.

The pre-registered experiment involved 758 consultants comprising about 7% of the individual contributor-level consultants at the company.

After establishing a performance baseline on a similar task, subjects were randomly assigned to one of three conditions:

- no AI access,

- GPT-4 AI access, or

- GPT-4 AI access with a prompt engineering overview.

We suggest that the capabilities of AI create a “jagged technological frontier” where some tasks are easily done by AI, while others, though seemingly similar in difficulty level, are outside the current capability of AI.

For each one of a set of 18 realistic consulting tasks within the frontier of AI capabilities, consultants using AI were significantly more productive (they completed 12.2% more tasks on average, and completed tasks 25.1% more quickly), and produced significantly higher quality results (more than 40% higher quality compared to a control group).

Consultants across the skills distribution benefited significantly from having AI augmentation, with those below the average performance threshold increasing by 43% and those above increasing by 17% compared to their own scores.

For a task selected to be outside the frontier, however, consultants using AI were 19 percentage points less likely to produce correct solutions compared to those without AI.

Further, our analysis shows the emergence of two distinctive patterns of successful AI use by humans along a spectrum of humanAI integration.

- One set of consultants acted as “Centaurs,” like the mythical halfhorse/half-human creature, dividing and delegating their solution-creation activities to the AI or to themselves.

- Another set of consultants acted more like “Cyborgs,” completely integrating their task flow with the AI and continually interacting with the technology.

Originally published at