the

health strategist

portal

the knowledge portal of “the health strategist — institute”

for in-person health strategy

and digital health strategy

Joaquim Cardoso MSc

Chief Researcher and Strategy Officer (CRSO)

for “the health strategist ” — research unit

Chief Editor for“the health strategist” — knowledge portal

Senior Advisor — for “the health strategist” — advisory consulting

July 12, 2023

ONE PAGE SUMMARY

Potential risks of unfair use of AI-generated content:

- Recent advancements in large language models have raised concerns about the potential for unethical or unfair use of AI-generated text in academic environments.

Detection tools for AI-generated text:

- The paper examines existing detection tools designed to differentiate between human-written text and AI-generated text.

Evaluation based on accuracy and error type analysis:

- The researchers evaluate the detection tools based on their accuracy in identifying AI-generated text and analyze the types of errors made by these tools.

Reliability of existing detection tools:

- The study finds that the available detection tools are neither accurate nor reliable.

- They tend to classify the output as human-written rather than detecting AI-generated text, indicating a bias in their performance.

Impact of machine translation and content obfuscation:

- The research investigates how machine translation and content obfuscation techniques affect the detection of AI-generated text.

- It concludes that these techniques significantly worsen the performance of the detection tools.

Conclusion:

- Overall, the paper underscores the limitations of existing detection tools in accurately identifying AI-generated text …

- and highlights the challenges in addressing the potential risks associated with the unfair use of AI in academic environments.

Infographic

Testing of Detection Tools for AI-Generated Text

arXiv

Debora Weber-Wulff (University of Applied Sciences HTW Berlin, Germany), Alla Anohina-Naumeca (Riga Technical University, Latvia), Sonja Bjelobaba (Uppsala University, Sweden), Tomáš Foltýnek (Masaryk University, Czechia), Jean Guerrero-Dib (Universidad de Monterrey, Mexico), Olumide Popoola (Queen Mary University of London, UK), Petr Šigut (Masaryk University, Czechia), Lorna Waddington (University of Leeds, UK)

July 2023

Recent advances in generative pre-trained transformer large language models have emphasised the potential risks of unfair use of artificial intelligence (AI) generated content in an academic environment and intensified efforts in searching for solutions to detect such content.

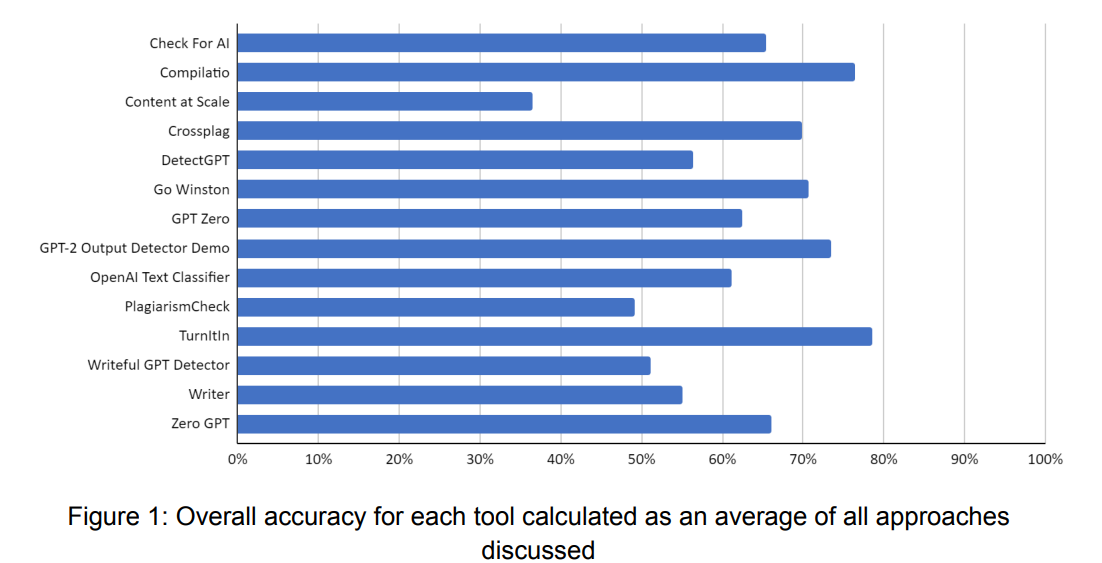

The paper examines the general functionality of detection tools for artificial intelligence generated text and evaluates them based on accuracy and error type analysis.

Specifically, the study seeks to answer research questions about whether existing detection tools can reliably differentiate between human-written text and ChatGPT-generated text, and whether machine translation and content obfuscation techniques affect the detection of AI-generated text.

The research covers 12 publicly available tools and two commercial systems (Turnitin and PlagiarismCheck) that are widely used in the academic setting.

The researchers conclude that the available detection tools are neither accurate nor reliable and have a main bias towards classifying the output as human-written rather than detecting AI-generated text.

Furthermore, content obfuscation techniques significantly worsen the performance of tools.

The study makes several significant contributions.

- First, it summarises up-to-date similar scientific and non-scientific efforts in the field.

- Second, it presents the result of one of the most comprehensive tests conducted so far, based on a rigorous research methodology, an original document set, and a broad coverage of tools.

- Third, it discusses the implications and drawbacks of using detection tools for AI-generated text in academic settings.

Comments: 38 pages, 13 figures and 10 tables, and an appendix with 18 figures. Submitted to the International Journal for Educational Integrity

Testing of Detection Tools for AI-Generated Text

Recent advances in generative pre-trained transformer large language models have emphasised the potential risks of…doi.org

Subjects: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Computers and Society (cs.CY)