the health

transformation

knowledge portal

Joaquim Cardoso MSc

March 5, 2024

This summary is based on the article “Groq: Pioneering the Future of AI with the Language Processing Unit (LPU)”, published by Gene Bernardin on February 20, 2024.

What is the message?

Groq, an emerging player in the AI industry, is disrupting the conventional GPU dominance with its innovative Language Processing Unit (LPU), setting new standards for speed and efficiency in processing large language models (LLMs).

This article explores Groq’s groundbreaking technology, its implications for the AI landscape, and its significance for developers and businesses.

What are the key points?

The Rise of the LPU: Groq’s LPU, designed for sequential processing, offers superior performance in language tasks compared to traditional GPU-based systems. It addresses compute density and memory bandwidth bottlenecks, resulting in faster and more energy-efficient processing.

Groq’s Vision and Strategy: Founded by Jonathan Ross, Groq prioritizes software and compiler development to optimize hardware performance. The company supports standard machine learning frameworks, making its technology accessible to developers. GroqLabs explores diverse applications beyond chat, showcasing the LPU’s versatility.

Industry Implications: The introduction of the LPU by Groq signifies a significant milestone in the AI industry. It challenges established players like NVIDIA, AMD, and Intel and opens doors to new AI applications and use cases. Independent benchmarks validate the LPU’s superior performance, positioning Groq as a leader in AI acceleration.

What are the key statistics?

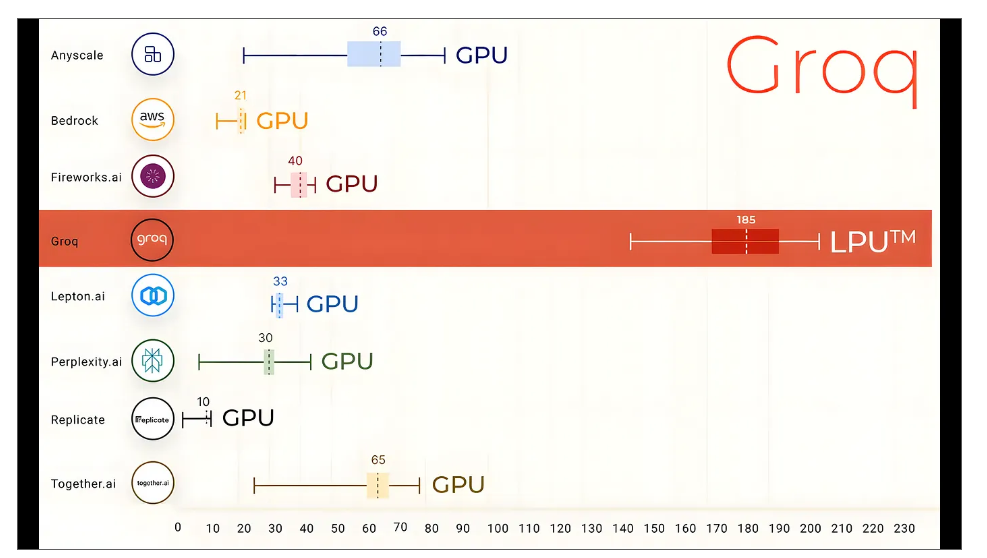

Groq’s LPU outperforms traditional GPUs in processing open-source LLMs like Llama-2 and Mixtral, showcasing remarkable speed and efficiency.

Independent benchmarking tests demonstrate Groq’s dominance in key performance indicators, including Latency vs. Throughput and Total Response Time.

What are the key examples?

ArtificialAnalysis.ai’s benchmarking test of Groq’s LPU showcased its superiority over GPU-based systems in various performance metrics.

Groq’s collaboration with Mistral and Meta enables users to select between open-source models like “Mixtral 8x7B-32k” and “Llama 2 70B-4k” via a user-friendly web interface.

Conclusion

Groq’s LPU represents a paradigm shift in AI processing, offering unparalleled speed, efficiency, and versatility for language tasks.

As Groq continues to innovate and expand its offerings, the LPU is poised to drive the next wave of AI applications, revolutionizing industries and unlocking new possibilities for developers and businesses worldwide.

To read the original publication, click here.