This is a republication of the article “How to save more lives and avoid a privacy apocalypse”, with the title above, highlighting the point in question.

Financial Times

Tim Harford’s

June 30, 2022

In the mid-1990s, the Massachusetts Group Insurance Commission, an insurer of state employees, released healthcare data that described millions of interactions between patients and the healthcare system to researchers.

Such records could easily reveal highly sensitive information — psychiatric consultations, sexually transmitted infections, addiction to painkillers, bed-wetting — not to mention the exact timing of each treatment. So, naturally, the GIC removed names, addresses and social security details from the records. Safely anonymised, these could then be used to answer life-saving questions about which treatments worked best and at what cost.

That is not how Latanya Sweeney saw it. Then a graduate student and now a professor at Harvard University, Sweeney noticed most combinations of gender and date of birth (there are about 60,000 of them) were unique within each broad ZIP code of 25,000 people.

The vast majority of people could be uniquely identified by cross-referencing voter records with the anonymised health records.

Only one medical record, for example, had the same birth date, gender and ZIP code as the then governor of Massachusetts, William Weld. Sweeney made her point unmistakable by mailing Weld a copy of his own supposedly anonymous medical records.

The vast majority of people could be uniquely identified by cross-referencing voter records with the anonymised health records.

In nerd circles, there are many such stories. Large data sets can be de-anonymised with ease; this fact is as screamingly obvious to data-science professionals as it is surprising to the layman.

The more detailed the data, the easier and more consequential de-anonymisation becomes.

But this particular problem has an equal and opposite opportunity: the better the data, the more useful it is for saving lives.

Good data can be used to evaluate new treatments, to spot emerging problems in provision, to improve quality and to assess who is most at risk of side effects.

Yet seizing this opportunity without unleashing a privacy apocalypse — and a justified backlash from patients — seems impossible.

Not so, says Professor Ben Goldacre, director of Oxford University’s Bennett Institute for Applied Data Science.

Goldacre recently led a review into the use of UK healthcare data for research, which proposed a solution. “It’s almost unique,” he told me.

“A genuine opportunity to have your cake and eat it.”

The British government loves such cakeism, and seems to have embraced Goldacre’s recommendations with gusto.

At the moment, we have the worst of both worlds: researchers struggle to access data because the people who have patient records (rightly) hesitate to share them.

Yet leaks are almost inevitable because there is patchy oversight over who has what data, when.

At the moment, we have the worst of both worlds: researchers struggle to access data because the people who have patient records (rightly) hesitate to share them.

What does the Goldacre review propose? Instead of emailing millions of patient records to anyone who promises to be good, the records would be stored in a secure data warehouse.

An approved research team that wants to understand, say, the severity of a new Covid variant in vaccinated, unvaccinated and previously infected individuals, would write the analytical code and test it on dummy data until it was proved to run successfully.

When ready, the code would be submitted to the data warehouse, and the results would be returned.

The researchers would never see the underlying data.

Meanwhile the entire research community could see that the code had been deployed and could check, share, reuse and adapt it.

What does the Goldacre review propose? Instead of emailing millions of patient records to anyone who promises to be good, the records would be stored in a secure data warehouse.

This approach is called a “trusted research environment” or TRE.

The concept is not new, says Ed Chalstrey, a research data scientist at The Alan Turing Institute.

The Office for National Statistics has a TRE called the Secure Research Service to enable researchers to analyse data from the census safely.

Goldacre and his colleagues have developed another, called OpenSAFELY. What is new, says Chalstrey, are the huge data sets now becoming available, including genomic data. De-anonymisation is just hopeless in such cases, while the opportunity they present is golden. So the time seems ripe for TREs to be used more widely.

The concept is not new, says Ed Chalstrey, a research data scientist at The Alan Turing Institute.

The Office for National Statistics has a TRE called the Secure Research Service to enable researchers to analyse data from the census safely.

The Goldacre review recommends the UK should build more trusted research environments with the fourfold aim of:

- earning the justified confidence of patients,

- letting researchers analyse data without waiting years for permission,

- making the checking and sharing of analytical tools something that happens by design, as well as

- nurturing a community of data scientists.

The NHS has an enviably comprehensive collection of patient records. But could it build TRE platforms? Or would the government just hand the project wholesale to some tech giant?

Top-to-bottom outsourcing would do little for patient confidence or the open-source sharing of academic tools.

The Goldacre review declares “there is no single contract that can pass over responsibility to some external machine. Building great platforms must be regarded as a core activity in its own right.”

The Goldacre review declares “there is no single contract that can pass over responsibility to some external machine. Building great platforms must be regarded as a core activity in its own right.”

Inspiring stuff, even if the history of government data projects is not wholly reassuring.

But the opportunity is clear enough: a new kind of data infrastructure that would protect patients, turbo-charge research and help build a community of healthcare data scientists that could be the envy of the world.

If it works, people will be sending the health secretary notes of appreciation, rather than his own medical records.

But the opportunity is clear enough: a new kind of data infrastructure that would protect patients, turbo-charge research and help build a community of healthcare data scientists that could be the envy of the world.

If it works, people will be sending the health secretary notes of appreciation, rather than his own medical records.

Tim Harford’s new book is ‘Follow on Twitter to find out about our latest stories first How to Make the World Add Up’

Originally published at https://www.ft.com on July 1, 2022.

Names mentioned

Professor Ben Goldacre, director of Oxford University’s Bennett Institute for Applied Data Science

Latanya Sweeney , then a graduate student and now a professor at Harvard University,

Ed Chalstrey, a research data scientist at The Alan Turing Institute.

RELATED ARTICLE:

Keeping health data secure in a Trustworthy Research Environment

Health e-Research Center

OCTOBER 15, 2018

Health researchers often use personal data in their research. This data is extremely sensitive; it can include confidential information such as patients’ medical history. If the wrong person accessed this information, it would be a severe breach of confidentiality. As a result, we must ensure that all human health data used in research is stored and handled securely. But how do we do this here at HeRC?

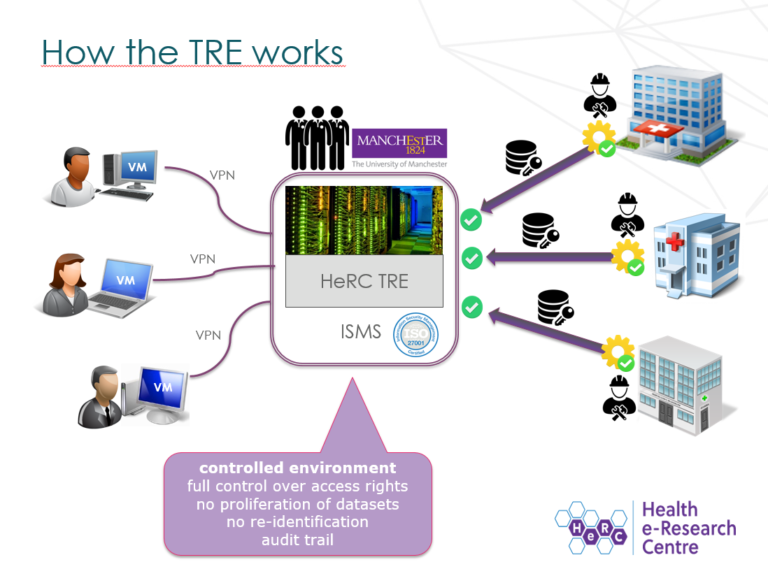

The Health eResearch Centre, based in the School of Health Sciences, keeps its data safe using the Trustworthy Research Environment (also known as the HeRC TRE), a data analytics resource certified. The TRE is used in a large number of the Health eResearch Centre and Connected Health Cities’ (CHC’s) research projects, such as CHC’s exploration of wound care data, a project that uses existing wound care records to improve wound assessment and treatment. The TRE is also used in various projects that are external to HeRC and CHC, and is available to host research on behalf of any organisation.

The TRE uses a number of strict security controls to prevent unauthorised access and misuse of data.

For example, data protected by the TRE is encrypted in transit and at rest, and can only be accessed via virtualised workstations which are isolated from the internet, minimising the risk of data interception.

Connections to these virtual workstations is secured via a VPN service and 2-Factor authentication.

Each virtual workstation belongs solely to a single project, which further reduces the risk of interception: only the researchers working on that project can access the corresponding data.

The TRE is the only data analytics resource at The University of Manchester that offers a connection to the NHS’s Health and Social Care Network (HSCN, formerly known as N3), a secure, private network that allows researchers quick and efficient access to NHS data, and which allows The University of Manchester to share data and web-based services with staff at the NHS.

Some of the TRE’s data storage, virtual workstations and application servers are hosted on the HSCN, which makes it possible to conduct data processing and analytics without the need to remove the data from the HSCN.

By incorporating some of the TRE infrastructure within the HSCN, researchers can access higher quality, more detailed data. For example, Patient Identifiable Data can be shared using the HSCN, allowing researchers a wider scope of data. Moreover, the TRE board oversees all applications to check that each project has necessary permissions to access this data, referring to the ‘Five safes’: in order to be granted access to data, the project, people involved, data, facility settings and project outputs must all be deemed safe, ethical and responsible.