health transformation institute (hti)

institute for health strategy, digital health

and continuous health transformation

Joaquim Cardoso MSc

Chief Research and Strategy Officer (CRSO)

May 18, 2023

Source:

Towards Expert-Level Medical Question Answering with Large Language Models

Arxiv

Karan Singhal∗,1, Tao Tu∗,1, Juraj Gottweis∗,1, Rory Sayres∗,1 , Ellery Wulczyn1 , Le Hou1 , Kevin Clark1 , Stephen Pfohl1 , Heather Cole-Lewis1 , Darlene Neal1 , Mike Schaekermann1 , Amy Wang1 , Mohamed Amin1 , Sami Lachgar1 , Philip Mansfield1 , Sushant Prakash1 , Bradley Green1 , Ewa Dominowska1 , Blaise Aguera y Arcas1 , Nenad Tomasev2 , Yun Liu1 , Renee Wong1 , Christopher Semturs1 , S. Sara Mahdavi1 , Joelle Barral1 , Dale Webster1 , Greg S. Corrado1 , Yossi Matias1 , Shekoofeh Azizi†,1, Alan Karthikesalingam†,1 and Vivek Natarajan†,1

1Google Research, 2DeepMind,

16 May 2023

Originally published at https://arxiv.org

Recent artificial intelligence (AI) systems have reached milestones in “grand challenges” ranging from Go to protein-folding.

- The capability to retrieve medical knowledge, reason over it, and answer medical questions comparably to physicians has long been viewed as one such grand challenge.

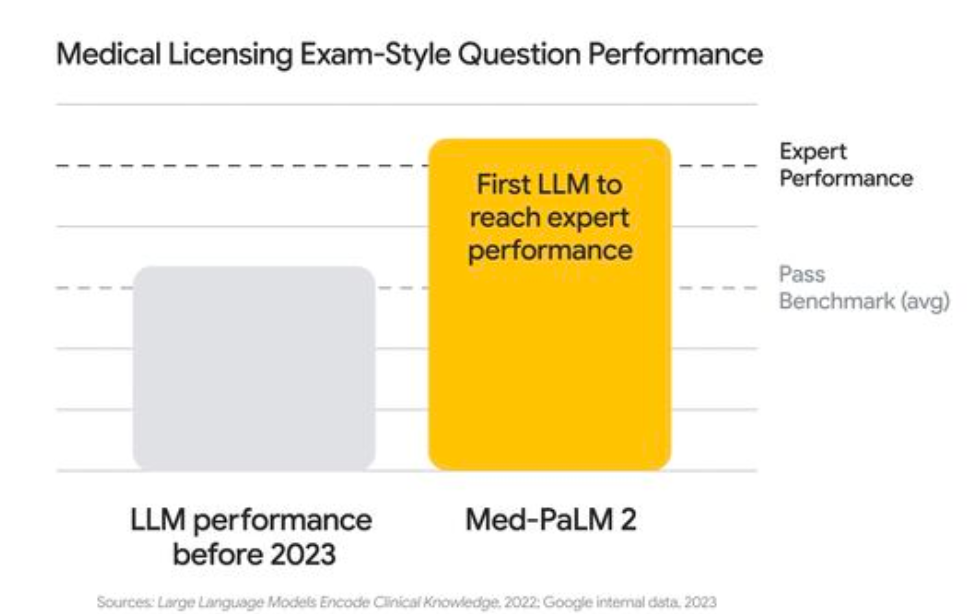

Large language models (LLMs) have catalyzed significant progress in medical question answering; …

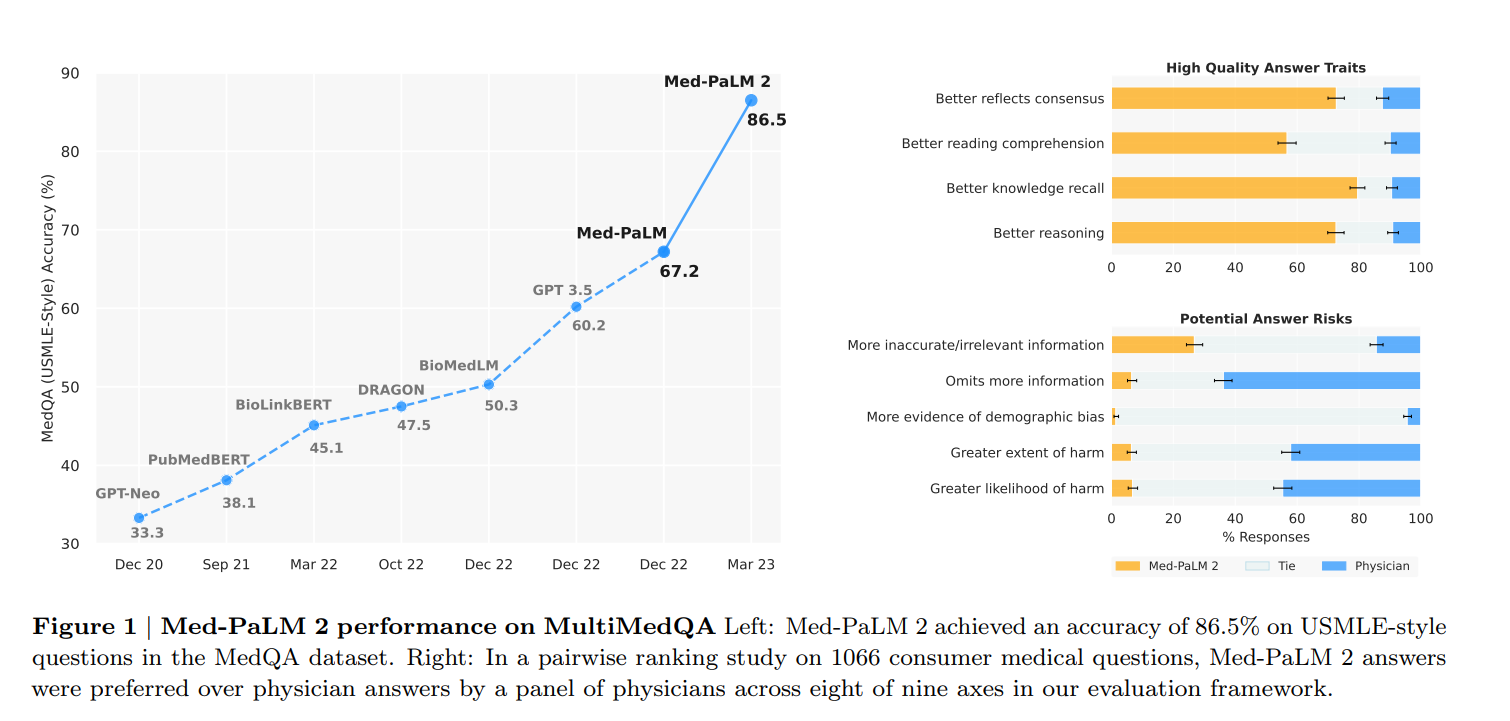

- … MedPaLM was the first model to exceed a “passing” score in US Medical Licensing Examination (USMLE) style questions with a score of 67.2% on the MedQA dataset.

However, this and other prior work suggested significant room for improvement, especially when models’ answers were compared to clinicians’ answers.

Here the authors present Med-PaLM 2, which bridges these gaps by leveraging a combination of base LLM improvements (PaLM 2), medical domain finetuning, and prompting strategies including a novel ensemble refinement approach.

- Med-PaLM 2 scored up to 86.5% on the MedQA dataset, improving upon Med-PaLM by over 19% and setting a new state-of-the-art.

- We also observed performance approaching or exceeding state-of-the-art across MedMCQA, PubMedQA, and MMLU clinical topics datasets.

- We performed detailed human evaluations on long-form questions along multiple axes relevant to clinical applications.

- In pairwise comparative ranking of 1066 consumer medical questions, physicians preferred Med-PaLM 2 answers to those produced by physicians on eight of nine axes pertaining to clinical utility