the

healthtransformation

.foundation

Joaquim Cardoso MSc

January 29, 2024

This executive summary is based on the article “Segment anything in medical images”, published by Nature and written by Jun Ma, Yuting He, Feifei Li, Lin Han, Chenyu You & Bo Wang on January 22, 2024.

What is the message?

Medical image segmentation is a critical aspect of clinical applications, aiding in disease diagnosis, treatment planning, and monitoring.

While deep learning models have shown promise, task-specific limitations hinder their widespread clinical use.

The article introduces MedSAM, a foundation model designed for universal medical image segmentation, showcasing remarkable performance across diverse tasks.

ONE PAGE SUMMARY

What are the key points?

Current Limitations: Many existing medical image segmentation models are task-specific, hindering their generalizability to new tasks or imaging data.

Segmentation Foundation Models: Inspired by advances in natural image segmentation, MedSAM is introduced as a foundation model, aiming to address the limitations by providing versatility across various segmentation tasks.

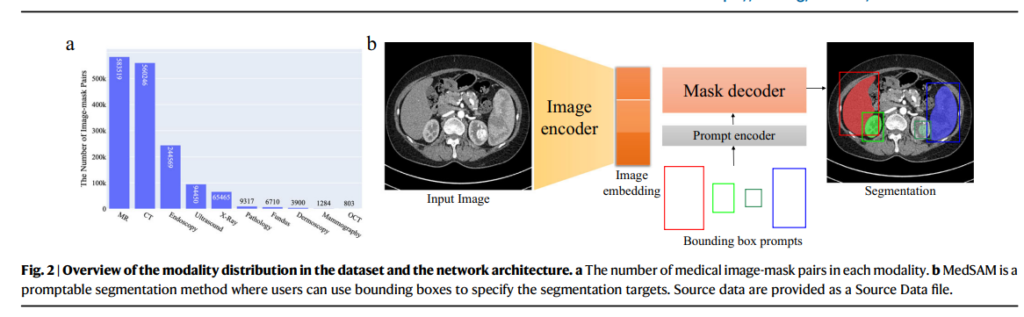

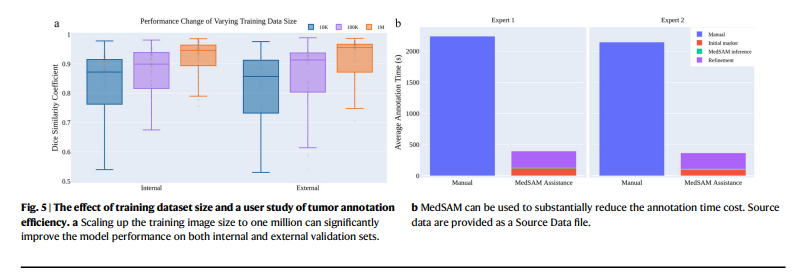

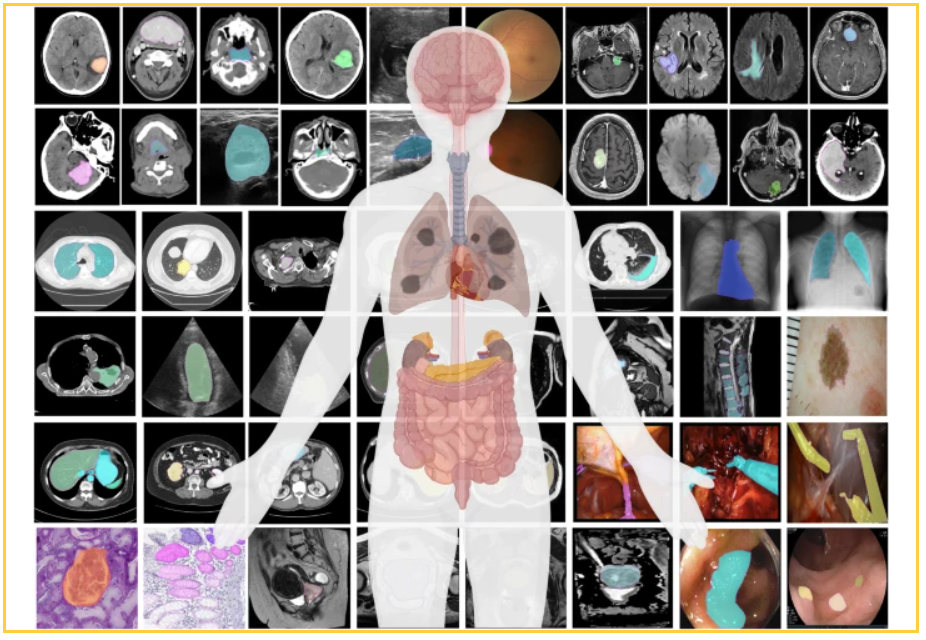

Dataset and Architecture: MedSAM is trained on a large-scale dataset comprising over one million medical image-mask pairs, covering diverse modalities, anatomical structures, and pathological conditions. The model employs a promptable 2D segmentation approach, enhancing adaptability to user-specified tasks.

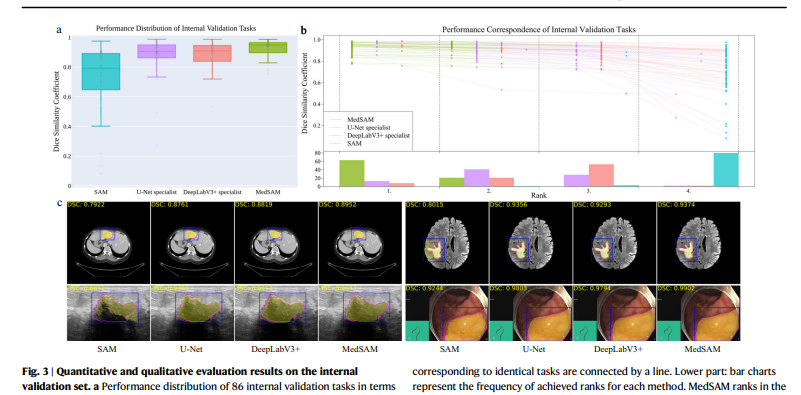

Performance Evaluation: MedSAM is extensively evaluated on internal and external validation tasks, consistently outperforming state-of-the-art segmentation models and rivaling specialist models for specific modalities.

Generalization Ability: MedSAM demonstrates robust generalization abilities, excelling in tasks involving unseen targets and modalities, showcasing its potential as a versatile tool for medical image segmentation.

What are the key statistics?

Dataset: The training dataset comprises 1,570,263 medical image-mask pairs, spanning 10 imaging modalities, over 30 cancer types, and diverse imaging protocols.

Performance: MedSAM consistently outperforms the state-of-the-art segmentation foundation model and specialist models on both internal (86 tasks) and external (60 tasks) validation sets.

Annotation Efficiency: MedSAM substantially reduces annotation time, achieving an 82.37% to 82.95% reduction in a human annotation study for tumor segmentation.

What are the key examples?

Task Diversity: MedSAM successfully handles segmentation tasks involving diverse anatomical structures, such as liver tumors in CT images, brain tumors in MR images, breast tumors in ultrasound images, and polyps in endoscopy images.

Modalities: The model excels across various imaging modalities, including CT, MRI, endoscopy, ultrasound, pathology, fundus, dermoscopy, mammography, and optical coherence tomography (OCT).

Conclusion

MedSAM emerges as a groundbreaking foundation model for medical image segmentation, showcasing unprecedented versatility and performance across diverse tasks and modalities.

Its potential to accelerate diagnostic and therapeutic tools and contribute to enhanced patient care positions it as a transformative tool in the field of medical imaging analysis.

Despite certain limitations, MedSAM’s ability to learn from a large-scale dataset and adapt to new tasks highlights its significance in advancing universal medical image segmentation.

To read the original publication, click here.

INFOGRÁFICO