Health Strategy Institute (HSI)

multidisciplinary institute for

health strategy and digital health

Joaquim Cardoso MSc

Chief Research and Editor;

Chief Strategy Officer (CSO), and

Senior Advisor for Boards and C-Level

June 20, 2023

Key takeaways:

Robot surgeons, particularly in keyhole surgery, have transformed medicine and are used in hospitals worldwide.

- Surgeons using robotic systems experience less physical strain and require less training time.

- Robots enable surgeons to perform more complex operations that may have required open surgery.

The combination of robotics, AI, and other technologies aims to surpass the skills of human surgeons and produce more consistent results with fewer errors.

- The Smart Tissue Autonomous Robot (Star) demonstrated the potential for robotic autonomy by suturing the ends of a severed intestine using keyhole surgery.

- Surgical robotics provides an opportunity to collect vast amounts of data, which can be used to improve outcomes and standardize results.

The introduction of AI-specific regulations poses a challenge for manufacturers in the development and approval process of autonomous surgical robots.

- Telesurgery, while possible in research environments, faces challenges such as unstable networks and potential harm to patients in real-world scenarios.

- Technological progress in the operating room is expected to come in the form of minor enhancements to the existing model of practice.

Surgical robots can be cost-prohibitive, but their shorter recovery times may reduce overall costs for hospitals.

- Robotic systems have made it easier for surgeons to perform complex procedures while minimizing scarring for patients, leading to faster recovery times.

DEEP DIVE

Robot surgeons provide many benefits, but how autonomous should they be?

Keyhole surgery using robotic arms has transformed medicine. But the next generation of advanced robotics might be able to surpass the skills of surgeons

The Guardian

Charlie Metcalfe

Sun 18 Jun 2023

Neil Thomas wished he could have been awake during the operation to remove a 6 cm cancerous tumour from his colon. He was one of the first people to go under the scalpel of Cardiff and Vale University Health Board’s new robotic systems in June 2022. And, as the founder of a software company, the technology interested him.

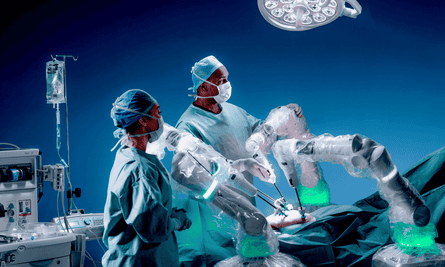

Thomas’s surgeon, James Ansell, would once have stooped over his patient’s body to perform the operation. Instead, he stood behind a console on another side of the theatre wearing 3D glasses. His hands grasped two joysticks, which controlled the four robotic arms that huddled around Thomas’s unconscious body.

“My colleague said to me the other day that this feels like cheating,” Ansell says. “We’ve done it for so many years: stood at the bedside at an awkward angle, sweating because it’s really physically demanding surgery. [Now,] sitting down, there’s no pressure on the surgeon. It’s very straightforward.”

Robots have revolutionised the practice of surgery since their introduction to operating theatres in 2001. They can now be found in hospitals all across the world. The most prolific device, the Da Vinci, is used in 1.5m operations every year, according to its California-based manufacturer Intuitive Surgical.

Now, combined with AI and other novel technologies, engineers are developing advanced robotics to herald another new era for surgery — and this time, the surgeon’s role in the operating theatre may change altogether.

Although robots are put to a variety of tasks in surgery, their use as a tool in performing laparoscopy — otherwise known as keyhole surgery — has attracted the most attention within and outside medicine. Keyhole surgery reduces the time patients need to recover by operating through smaller incisions. This subsequently reduces the chance that patients catch infections, and so accelerates their recoveries.

Surgeons are physically limited by their bodies. Their minds are limited in their capacity to learn and improve

Without robots, keyhole surgery requires a very high level of skill. Surgeons need to operate at awkward angles, moving their hands in the opposite direction to that in which they want their instruments to move inside the body. With robots, surgeons can perform more complex operations that might otherwise have demanded open surgery, they suffer less physical strain, and they require less training time. Moreover, they are getting better at using the robots.

“Some of those patients who have ultra-advanced diseases involving blood vessels at the back of the pelvis might still get an open operation,” says Deena Harji, a colorectal surgeon in Manchester, “but we’re starting to see some very early case studies coming out where they’re starting to have robotic approaches applied to them, at least in part. When robotics started 20 years ago, that group would not have been eligible for robotic operation. But as we have developed experience and knowledge, we can offer really complex patients robotic surgery.”

The Da Vinci Xi surgical robot being used in a practice exercise. Photograph: Dpa Picture Alliance/Alamy

Surgeons are limited by their physical capacity, and their minds are limited in their potential to learn and improve. That’s why engineers are hoping robotic systems combined with AI might be able to surpass the skills of human surgeons to produce more consistent results, with fewer errors.

Last year, engineers at Johns Hopkins University in the US came one step closer to realising that goal. In what they described as one of the most delicate procedures in the practice of surgery, their Smart Tissue Autonomous Robot (Star) sutured the ends of a severed intestine together in four pigs — while they were under anaesthetic. According to the engineers, it performed better than a human surgeon would have. “Our findings show that we can automate one of the most intricate and delicate tasks in surgery,” Axel Krieger, an assistant professor of mechanical engineering, and the project’s director, said at the time.

The Star’s procedure was not the first time a robot had performed with a level of autonomy in surgery. The TSolution-One device (formerly called RoboDoc), for example, is FDA-approved to prepare human limbs for joint replacements according to a surgeon’s plan. What makes the Star’s procedure special was that it performed its task using keyhole surgery — a world first.

Surgical robotics presents a good opportunity for engineers to introduce autonomy because of the vast volume of data that devices can collect.

An intelligent system, once developed, can use this data to teach itself. In theory, it could become better with each operation that it performs as it gathers more and more data. This could help healthcare organisations “standardise” the results of operations.

Mark Slack, the chief medical officer at CMR Surgical, which manufactures another surgical robot, Versius, says that manufacturers have failed to exploit this data until now. That’s why they and researchers such as those involved in the Star project are scrambling to collect and process as much as possible. “Data, data, data,” Slack says. “This data has had significant untapped potential.”

Despite the Star team’s success, it’s still too early to forecast autonomous surgery in hospitals any time soon. Engineers talk about “levels of autonomy”. For a robotic device, the question is not whether it is autonomous or not; the question is how autonomous it can be. And the Star system performed only a small section of a complete surgery without human help. In fact, it even needed humans to apply a fluorescent marker to guide its movements. “You’re not supposed to call it autonomous surgery,” Tamás Haidegger, an associate professor of intelligent robotics at Óbuda University in Budapest, says. “This is automating one particular surgical subtask.”

Haidegger makes what he believes is another important distinction — between the kind of complexity required for a system like the Star and the devices used in hospitals. Standard laboratory best practice in research environments often falls short of the safety and design standards of clinical settings, he says.

For use in clinical environments, manufacturers need to be able to explain exactly how their devices work, which continues to prove a challenge for people who develop AI. There’s also the impending introduction of AI-specific regulation that governments across the world, including the UK and EU, are developing. Autonomous surgical robots will need to comply with those too.

According to Haidegger, this all amounts to a very expensive process for manufacturers to prove that their devices meet the regulatory requirements.

Each device needs to gain approval for each new field of surgery, one at a time, which has already decelerated the adoption of the human-operated robots used today. It will take a lot more work for a commercial manufacturer to decide that the potential profit justifies the cost of research and development. “It’s not going to radically change medical devices overnight,” Haidegger says.

Surgeons and engineers alike often say that surgical robotics was born of a US military ambition to perform operations on injured frontline soldiers without placing the surgeons in harm’s way. Decades later, healthcare networks are yet to adopt telesurgery as a common method of practice. For research purposes, however, it has been done. In 2001, for example, a doctor in New York operated on a patient in France in what has become known as the Lindbergh Operation. But such a procedure relies on a wired-line or similarly robust connection — one that soldiers would lack access to on the battlefield. If the connection was lost, or even so much as slowed down, the robot might harm the patient.

There is some hope that faster networks might reduce this risk.

And in 2019, a Chinese hospital claimed to have successfully performed the world’s first telesurgical operation over a wireless 5G network. But according to Jin Kang, a professor of electrical and computer engineering at Johns Hopkins University, the speed or bandwidth of the network makes little difference. “The communications, the internet, the power source — many things could be unstable,” he says. “I think that’s always an issue.”

CMR Surgical’s Versius robot. Photograph: cmrsurgical.com

For now, it’s probable that technological progress in the operating room will come in the form of minor enhancements to the existing model of practice. Both Haidegger and Kang, who worked on the Star project, believe that machines will help to improve patient outcomes in the shorter term.

Current robots use cameras to provide surgeons with a 3D image, which they can view through a headset or console. Newer devices are enhancing that information with augmented reality visuals. The latest Da Vinci robots, for example, offer a secondary “ultrasound” view. With AI, the robot may even be able to identify and highlight important information that the surgeon might have missed, as is already happening in radiology.

For organisations with strict budgets, including the NHS, the cost of surgical robots has remained a prohibitive factor.

The Da Vinci costs about £1.6m (the company would not confirm a specific price, stating that it depends on the buyer’s individual requirements.)

CMR Surgical’s Versius costs between £1.2 and £1.5m.

This has changed little over the past two decades, and does not include the added cost of training and maintenance, which can be 10% of the initial investment every year.

More trusts are beginning to buy them though, with the belief that the shorter recovery times associated with robotic surgery can reduce overall costs for hospitals. Jason Dorsett, chief finance officer at Oxford University Hospitals, says that this benefit is particularly pronounced for patients with head and neck cancers, who might otherwise require long post-operative hospital stays. The NHS’s health economics unit is continuing to evaluate this.

Whether they prove cost-effective or not, surgeons agree that robotic systems have made it easier for them to perform more complex procedures, while minimising scarring for their patients. Neil Thomas, the former tech entrepreneur with a tumour that was removed from his colon in June 2022, was able to leave hospital only two days after his operation.

Thomas had been training for an Ironman triathlon at the time of the diagnosis. Three months after the operation (on doctor’s orders), he was able to return to training. First a one-mile run, and then a few more three days later. The robot used in his operation had left only a small collection of almost imperceptible scars across his abdomen. “You can’t see a thing,” he says. “And recovery, I thought, was excellent.”

Originally published at https://www.theguardian.com on June 18, 2023.

Names mentioned

- Neil Thomas — Founder of a software company

- James Ansell — Surgeon at Cardiff and Vale University Health Board

- Deena Harji — Colorectal surgeon in Manchester

- Axel Krieger — Assistant professor of mechanical engineering at Johns Hopkins University

- Tamás Haidegger — Associate professor of intelligent robotics at Óbuda University in Budapest

- Mark Slack — Chief medical officer at CMR Surgical

- Jin Kang — Professor of electrical and computer engineering at Johns Hopkins University

- Jason Dorsett — Chief finance officer at Oxford University Hospitals