The Economist

This is a republication of the article above, publised on The Economist in 2015.

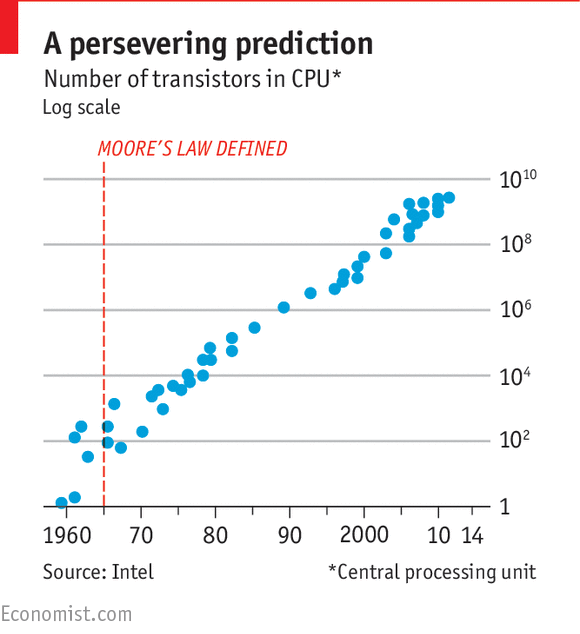

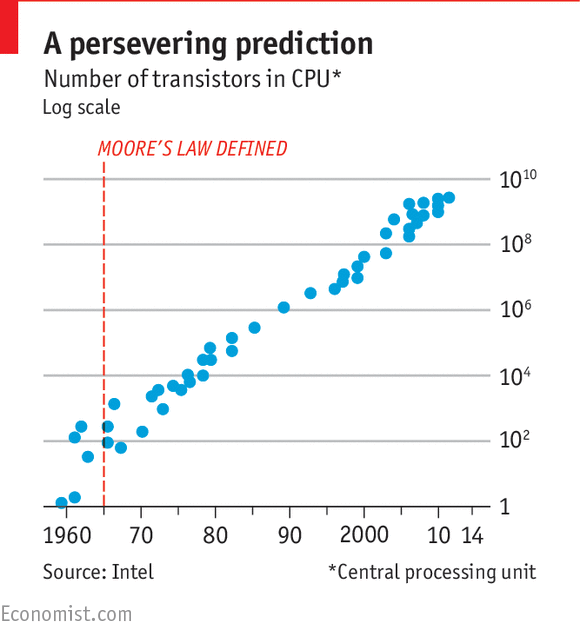

The number of transistors that can be put on a microchip doubles every year or so-and so doubles its performance.

Many people are vaguely familiar with Moore’s law; we recognise that it has something to do with the tumbling cost of computing power, for instance.

Yet even 50 years after Gordon Moore (pictured), co-founder of Intel, a chipmaker, made his famous prediction, its precise nature and implications remain hard to grasp. What is Moore’s law?

Mr Moore’s prognostications were focused on the microchip (also called an integrated circuit), the computational workhorse of modern computing.

Among the key components on a chip are transistors: tiny little electronic switches from which the chip’s logic circuits are constructed, allowing it to process information.

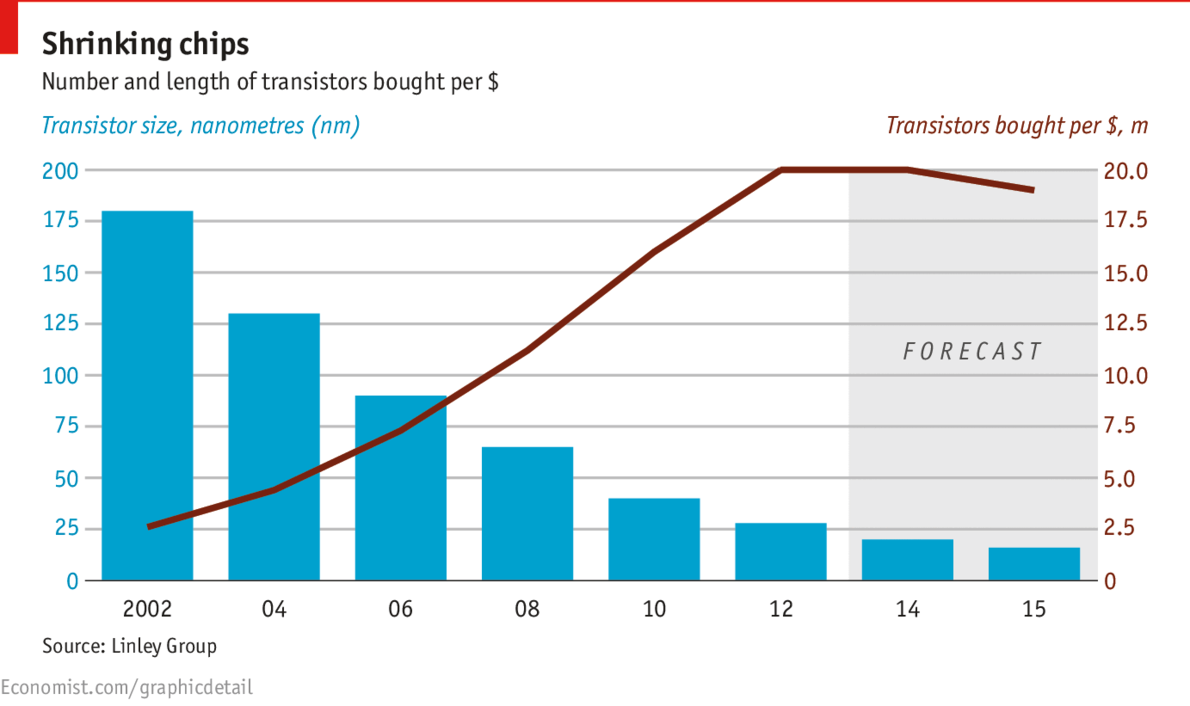

In a paper published on April 19th, 1965 Mr Moore predicted that by shrinking transistors, engineers would be able to double the number that fit on a chip each year.

(He subsequently amended his forecast to once every two years, which is how the “law” is usually stated.)

A doubling at regular intervals is exponential growth: not an easy concept to grasp for linear-thinking humans.

Intel reckons that the transistors it now produces run 90,000 times more efficiently and are 60,000 times cheaper than the first one it produced in 1971.

Were a car that uses 15 litres of petrol per 100km and costs $15,000 to improve its performance in similar fashion, it would consume less than two tenths of a millilitre of petrol per 100km and cost a quarter.

In a paper published on April 19th, 1965 Mr Moore predicted that by shrinking transistors, engineers would be able to double the number that fit on a chip each year.

(He subsequently amended his forecast to once every two years, which is how the “law” is usually stated.)

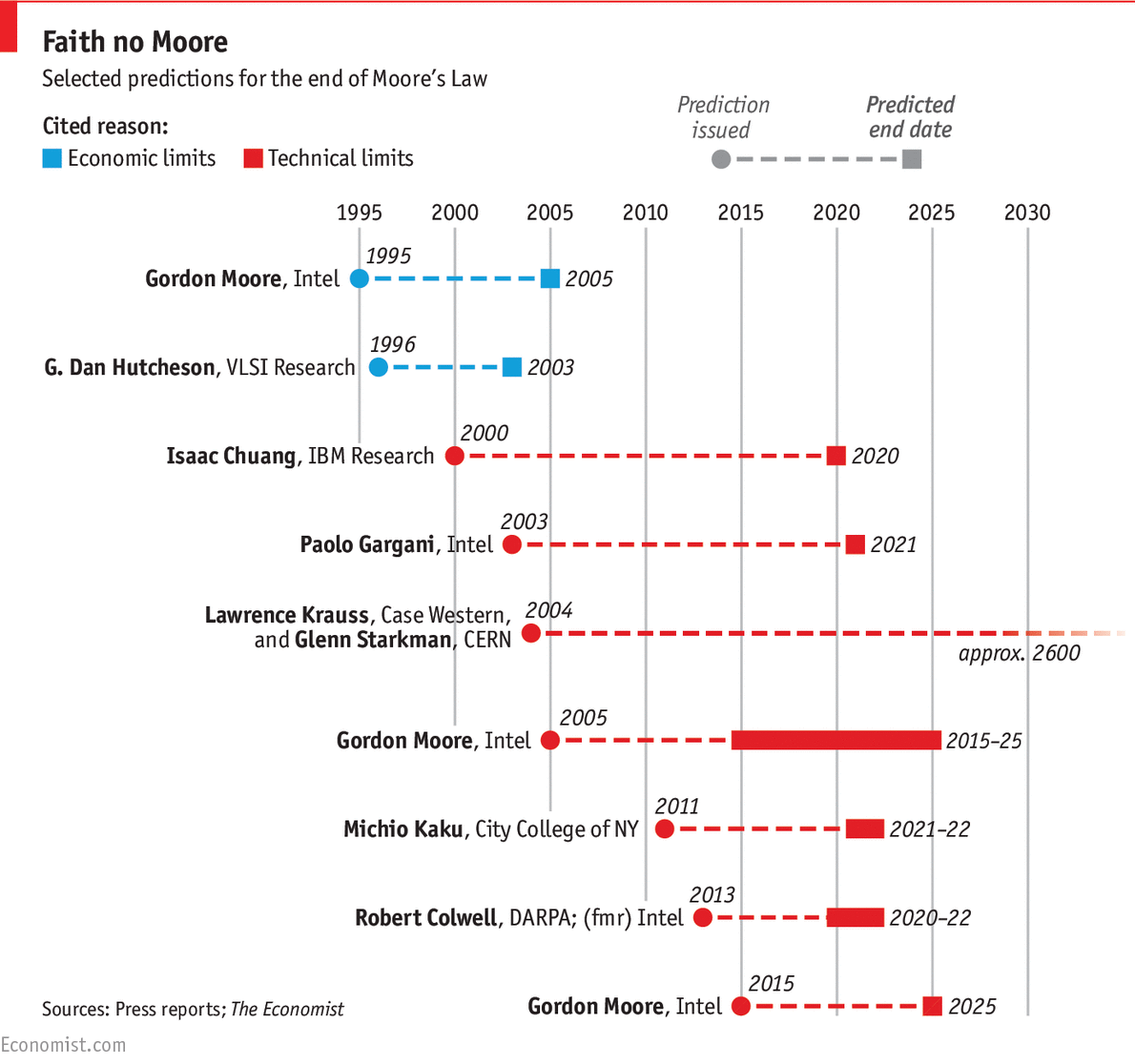

Yet in our physical world exponential growth eventually comes to an end.

Predictions of the death of Moore’s law are nearly as old as the forecast itself.

Still, the law has a habit of defying the sceptics, to the great good fortune of those of us enjoying tiny, powerful consumer electronics.

Signs are at last accumulating, however, which suggest the law is running out of steam. It is not so much that physical limits are getting in the way (though producing transistors only 14 nanometres, or billionths of a metre, wide-the current state of the art-can be quite tricky).

Intel says that it can keep the law going for at least another ten years, eventually slimming its transistors down to 5nm, about the thickness of a cell membrane.

And other strategies provide additional ways to boost performance: it has also started to stack components, for example, in effect building 3D chips. (Story continues below the chart.)

If Moore’s law has started to flag, it is mainly because of economics.

As originally stated by Mr Moore, the law was not just about reductions in the size of transistors, but also cuts in their price.

A few years ago, when transistors 28nm wide were the state of the art, chipmakers found their design and manufacturing costs beginning to rise sharply. New “fabs” (semiconductor fabrication plants) now cost more than $6 billion.

In other words: transistors can be shrunk further, but they are now getting more expensive.

And with the rise of cloud computing, the emphasis on the speed of the processor in desktop and laptop computers is no longer so relevant.

The main unit of analysis is no longer the processor, but the rack of servers or even the data centre.

The question is not how many transistors can be squeezed onto a chip, but how many can be fitted economically into a warehouse. Moore’s law will come to an end; but it may first make itself irrelevant.

The question is not how many transistors can be squeezed onto a chip, but how many can be fitted economically into a warehouse. Moore’s law will come to an end; but it may first make itself irrelevant.

Originally published at https://www.economist.com on April 19, 2015.