the health strategy

review

management, engineering and

technology review

Joaquim Cardoso MSc.

Senior Research and Strategy Officer (CRSO),

Chief Editor and Senior Advisor

January 22, 2023

In early 2017, Google research scientists, Ashish Vaswani and Jakob Uszkoreit, stumbled upon the idea of “self-attention” during a discussion on improving machine translation.

The concept involved analyzing entire sentences instead of word-by-word translation.

Inspired by the film Arrival, the researchers believed that this approach could enhance AI translation by providing better context and accuracy.

Through experimentation, they found their idea to be successful.

Noam Shazeer, a Google veteran, joined the collaboration after overhearing their conversation, and together, they created the transformer architecture described in the influential paper “Attention Is All You Need.”

The transformer has since become a cornerstone of AI development, …

… used in various cutting-edge applications, including Google Search, Translate, language models like ChatGPT and Bard, autocomplete, and speech recognition.

Its versatility extends beyond language, enabling pattern generation in images, computer code, and DNA.

The transformer’s intellectual capital has fueled innovation in the AI industry, becoming the foundation for generative AI companies and powering a wide range of consumer products.

However, Google faced challenges in retaining its talented scientists despite its deep learning and AI expertise.

This serendipitous discovery exemplifies how unexpected moments can lead to breakthroughs in science and underscores the challenges faced by large companies in nurturing entrepreneurialism and launching new products swiftly.

The transformer’s impact on AI development serves as a testament to the significance of chance encounters and collaborative efforts in advancing technology and reshaping industries.

This is an Executive Summary of the article “The Serendipitous Discovery that Revolutionized Artificial Intelligence”, authored by “Marcin Frąckiewicz”, and published on ts2.

Names mentioned

- Ashish Vaswani and

- Jakob Uszkoreit

- Noam Shazeer,

Reference publication

Attention Is All You Need

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

Abstract

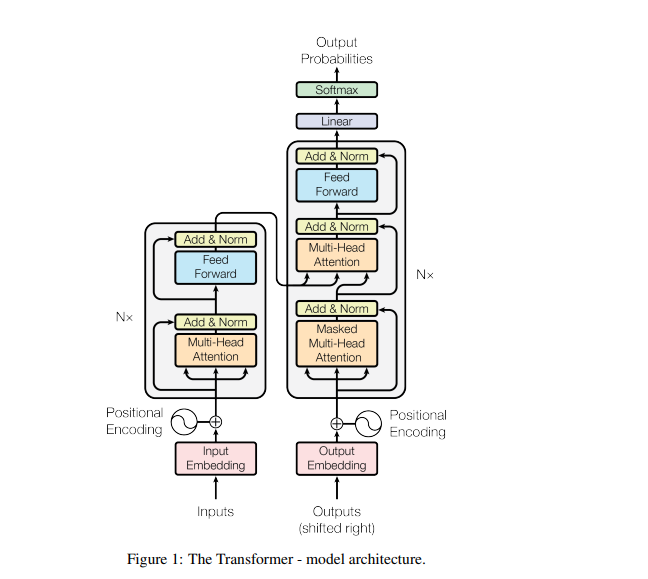

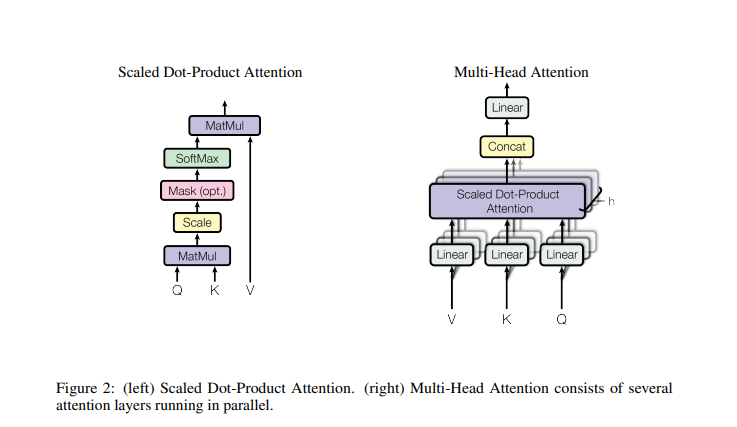

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration.

- The best performing models also connect the encoder and decoder through an attention mechanism.

We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.

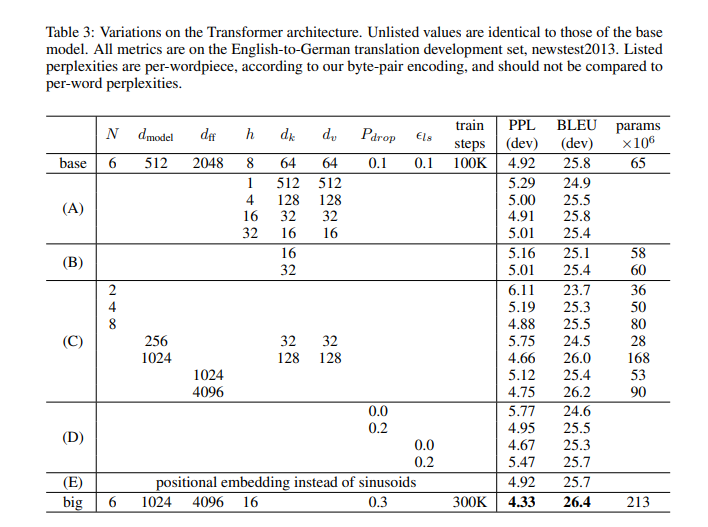

- Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train.

Our model achieves 28.4 BLEU on the WMT 2014 English-to-German translation task, improving over the existing best results, including ensembles by over 2 BLEU.

- On the WMT 2014 English-to-French translation task, our model establishes a new single-model state-of-the-art BLEU score of 41.8 after training for 3.5 days on eight GPUs, a small fraction of the training costs of the best models from the literature.

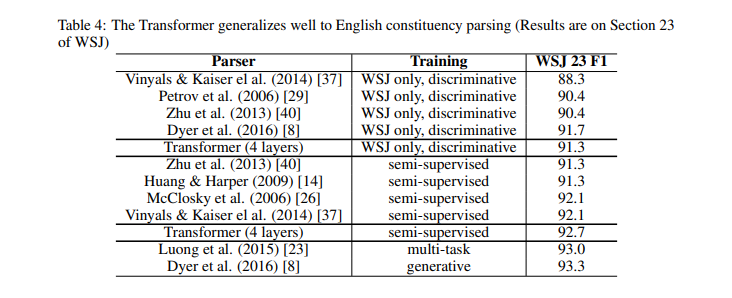

We show that the Transformer generalizes well to other tasks by applying it successfully to English constituency parsing both with large and limited training data.

Selected images