the health strategist

research and strategy institute — for continuous transformation

in value based health, care and tech

Joaquim Cardoso MSc

Chief Researcher & Editor

March 28, 2023

EXECUTIVE SUMMARY

This article discusses the issue of the declining mental health of teenagers, and the role of big tech companies in this problem.

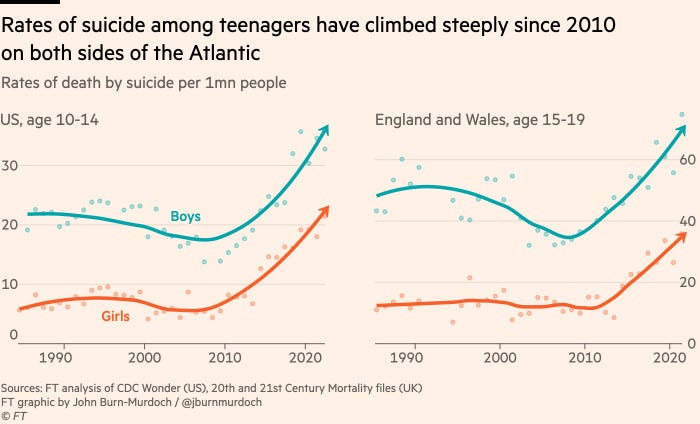

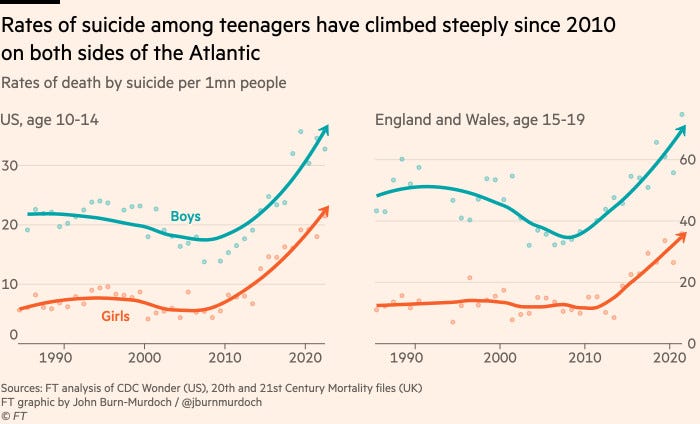

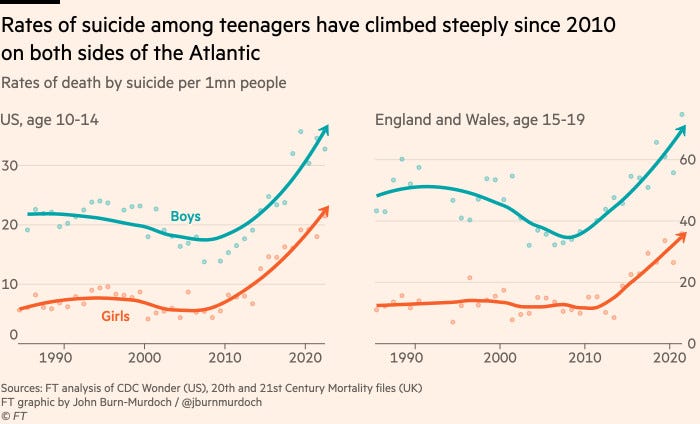

- Suicide rates among teenagers aged 10 to 19 years in the US increased by 45.5% between 2010 and 2020, with a survey last month showing that nearly one in three teenage girls had seriously considered suicide.

- There is a growing body of research that suggests that the increasing use of smartphones, high-speed internet, and social media apps is rewiring children’s brains and driving an increase in eating disorders, depression, and anxiety.

- While some argue that the teenage mental health crisis is more nuanced and the evidence is not yet definitive, many parents and legislators blame social media companies, which they say are developing highly addictive products that expose young people to harmful material with real-world consequences.

The article also discusses the 147 product liability lawsuits filed in the US against major social media platforms, as well as increased attention from lawmakers.

- It makes the point that the stakes for social media companies are high as increased regulation could threaten their ad-driven business models, which rely on a large and captivated young audience to thrive.

- The personal injury lawsuits filed in the federal court in Oakland, California, against social media companies including Meta, TikTok, Snapchat, and YouTube, argue that they are aware of the harm their platforms are causing children’s mental health and are suppressing that information.

The plaintiffs allege that these platforms use behavioural and neurobiological techniques similar to those used by the tobacco and gambling industries to get children addicted to their products.

- They are claiming that these techniques cause mental and physical harm to children.

- These features include algorithmically generated endless feeds, intermittent variable rewards, metrics and graphics to exploit social comparison, inadequate age verification protocols, and deficient tools for parents.

- The plaintiffs argue that these platforms promote disconnection, disassociation and resulting mental and physical harms.

Social media companies are loosely regulated and can get away with invasive techniques to attract young users.

- The court documents allege that some Meta employees were aware of the harmful effects of their products but ignored the information or sought to undermine it.

- Furthermore, Meta’s CEO, Mark Zuckerberg, was warned that the company was falling behind in addressing wellbeing issues, including excessive use, bullying and harassment, which would increase regulatory risk if left unaddressed.

- Meta and other social media companies claim that they are trying to ensure a safe and positive experience for young users, and they have built several tools including parental controls, features that encourage teenagers to take breaks, and age-verification technology.

Many social media companies, including Snap and YouTube, have introduced parental controls and developed wellbeing hubs for users.

- As the threat of regulation gathers momentum, social media companies are taking action to address concerns.

SELECTED IMAGE(S)

DEEP DIVE

The teen mental health crisis: a reckoning for Big Tech

Financial Times

The last minutes of 16-year-old Ian Ezquerra’s life were spent on Snapchat.

Outwardly, the teenager, who had excelled in school and was a member of its swimming team, was a happy young man. But as his social media use increased, so did his anxiety.

His mother, Jennifer Mitchell, was unaware Ian was viewing dangerous content across a variety of platforms that would be unsuitable for adults, let alone impressionable young people.

“There were subtle changes in his behaviour that I didn’t necessarily pick up on at the time. One of these things was him saying something like, ‘I am such a burden,’” says Mitchell, of New Port Richey, Florida.

Help is available

Anyone in the UK affected by the issues raised in this article can contact the Samaritans for free on 116 123

Then, in August 2019, Ian was found dead. The police recorded his death as a suicide, but Mitchell says her son died playing a dark, online challenge pushed his way by powerful algorithms.

Ian’s story adds to the evidence of the worrying decline in the mental health of children. Suicide among those aged between 10 and 19 years old in the US surged by 45.5 per cent between 2010 and 2020, according to the Centers for Disease Control and Prevention. A survey last month from the same government agency found nearly one in three teenage girls had seriously considered taking their own life, up from one in five in 2011.

The reasons for this deterioration in mental wellbeing, however, are less conclusive.

Many parents and legislators put the blame at the door of social media companies who, they say, are developing highly addictive products that expose young people to harmful material that has real-world consequences. Platforms push back, arguing their technology allows people to build relationships that are beneficial for mental health.

But some academics point to a growing body of research that they say is hard to ignore: that the proliferation of smartphones, high-speed internet and social media apps are rewiring children’s brains and driving an increase in eating disorders, depression and anxiety.

“Multiple juries are in. They’re all reaching the same conclusion,” says Jonathan Haidt, a social psychologist and professor at New York University Stern School of Business. “When social media or high-speed internet came in, [studies] all find the same story, which is mental health plummets, especially for girls.”

Other academics argue the evidence is not yet definitive and say the teenage mental health crisis is more nuanced.

A lack of consensus has not alleviated mounting scrutiny on Big Tech. Ian’s family are behind one of 147 product liability lawsuits collectively filed in the US against major social media platforms — Facebook, Instagram, TikTok, Snapchat and YouTube.

The looming litigation compounds increased attention from lawmakers. President Joe Biden has accused the industry of running experiments on “our children for profit” and wants Congress to pass laws preventing companies from collecting personal data on underage users.

On Capitol Hill on Thursday, TikTok CEO Shou Zi Chew faced pressure over the safeguarding of young users. Looking on was the grieving family of 16-year-old Chase Nasca who say the platform exposed their loved one to self-harm videos before his death. “Mr Chew, your company destroyed their lives,” accused one senator.

In the UK, ministers are considering amending an online safety bill to include criminal sanctions — including possible jail time — on social media executives who fail to protect children’s safety.

The stakes for social media companies are high as increased regulation could threaten their ad-driven business models, which rely on a large and captivated young audience to thrive.

‘Depression, eating disorders, self-harm’

Hackensack Meridian Health’s Carrier Clinic in New Jersey, a sprawling campus with a residential mental health unit for teenagers, is on the frontline of this crisis.

Waiting lists have increased over the past three years, exacerbated by the pandemic, leading to months-long delays in accessing beds in out-of-home care units. A nearby hospital experienced a 49 per cent increase in paediatric emergency room psychiatric consultations in 2022 compared with the previous year.

“We are seeing a lot of depression, anxiety, suicidal ideation, eating disorders and self-harm,” says Dr Thomas Ricart, chief of adolescent services at Carrier.

“Kids do come in and talk about bullying on social media and using a lot of social media and its impact on them,” he adds. “There is also the pandemic, an increase in stress in general and better screening for mental health.”

While professionals such as Ricart keep an open mind, in the decades since Facebook launched in 2004, the research exploring the link between social media and mental health is compelling.

In January, a report by experts in psychology and neuroscience at the University of North Carolina said teenagers who habitually checked their social media accounts experienced changes in how their brains — which do not fully develop until around 25 years old — responded to the world, including becoming hypersensitive to feedback from their peers.

A review of 68 studies related to the risk of social media use in young people published in August 2022 by the International Journal of Environmental Research and Public Health examined 19 papers dealing with depression, 15 with diet and 15 with psychological problems. The more time adolescents spend online, the higher the levels of depression and other adverse consequences, the report notes, especially in the most vulnerable.

But other researchers dispute the assessment of Haidt and his regular co-author Jean Twenge, professor of psychology at San Diego State University, that there is definitive evidence that social media is the primary cause of the youth mental health crisis.

They highlight a lack of scientific data proving a causal link between the rise in social media use and mental health conditions. Some studies, they say, show teenagers can benefit from using social media, if used in moderation.

“I’m not saying that social media is not part of this [crisis], but we don’t have the evidence yet,” says Dr Amy Orben, leader of the digital mental health group at the University of Cambridge. “It is a lot easier to blame companies than to blame very complex phenomena. But a very complex web of different factors influences mental health.”

Researchers wanting to delve deeper into the effects of social media say the answers might be found in closely guarded data held by the companies themselves who use it to improve their algorithms.

“We need the data,” adds Orben. Without it, she says, “there is no real public accountability”.

The addiction playbook

Though social media companies have so far avoided stringent regulation, a day of reckoning is approaching.

Plaintiffs in the personal injury cases filed in the federal court in Oakland, California, will argue that social media companies know their products are damaging children’s mental health and are suppressing that information.

They allege that Meta, the parent company that owns Facebook and Instagram, and its rivals TikTok, Snapchat and YouTube have borrowed heavily from the behavioural and neurobiological techniques used by the tobacco and gambling industries to get children addicted to their products.

These features, which vary platform to platform, include algorithmically generated, endless feeds to keep users scrolling; intermittent variable rewards that manipulate the dopamine delivery mechanism in the brain to intensify use; metrics and graphics to exploit social comparison; incessant notifications that encourage repetitive account checking; inadequate age verification protocols; and deficient tools for parents that create the illusion of control.

“Disconnected ‘likes’ have replaced the intimacy of adolescent friendships. Mindless scrolling has displaced the creativity of play and sport. While presented as ‘social’, defendants’ products have in myriad ways promoted disconnection, disassociation, and a legion of resulting mental and physical harms,” allege the plaintiffs.

Douglas Westwood, one of the parents bringing the lawsuit, says his daughter got hooked on Instagram within months of receiving her first smartphone, for safety purposes, at nine years old.

“I had no idea that a nine-year-old kid could access a social media site. There is supposed to be an age limit, a minimum of 13,” says Westwood. “There’s no verification. There’s no checking.”

The algorithm bombarded the child with harmful images and videos, including those promoting eating disorders, he says. Her mental health worsened and, by 14, she had entered residential care to receive treatment for anorexia.

His daughter, now 17, is recovering, but is still confronted with harmful content, says Westwood, adding that he does not want to stop her using her smartphone because it is how she communicates with her friends.

It is a frustrating catch-22 for parents now that smartphones and social media are so ubiquitous.

“When [social media platforms] worked out how to monetise attention, they went for it with a ruthlessness for which kids are the collateral damage,” says Baroness Beeban Kidron, chair of children’s digital rights charity 5Rights Foundation.

Because they are loosely regulated, she argues, platforms are able to get away with more invasive techniques to suck young users in.

Court documents allege some Meta employees are aware of the harmful effects of their products, but either disregarded the information or, in some cases, sought to undermine it.

“No one wakes up thinking they want to maximise the number of times they open Instagram that day,” one Meta employee wrote in 2021, according to the filing. “But that’s exactly what our product teams are trying to do.”

An internal study by Meta conducted around June 2020 found that 500,000 Instagram accounts each day involved “inappropriate interactions with children”. Yet child safety was still not considered a priority, the documents say, prompting one employee to remark that if “we do something here, cool. But if we can do nothing at all, that’s fine too.”

The court filing also alleges that Mark Zuckerberg, Meta’s chief executive, was warned the company was falling behind in addressing wellbeing issues, including excessive use, bullying and harassment. This would increase regulatory risk if left unaddressed, one employee said, according to the court documents.

Many of these concerns were highlighted by whistleblower Frances Haugen, a former Facebook employee who accused the company of prioritising profit over public safety. She says Meta continues to resist calls from public health authorities and researchers to collaborate on understanding the mental health issues associated with Instagram and body image, for example.

“Facebook is scared that if they are first movers and come in with all these safety features around compulsive use, around body image issues and depression, people will say, ‘Wow, Instagram is dangerous. Social media is dangerous,’” she says. “They don’t want to admit it.”

Road to regulation

Haugen is right in that Meta disputes the notion that social media is fuelling the teen mental health crisis.

“Mental health is complex, it’s individualised, it . . . [involves] many factors,” says Antigone Davis, Meta’s global head of safety.

Nevertheless, she acknowledges the need to ensure a “safe and positive experience” for young users, adding that the company had built about 30 tools including parental controls, features that encourage teenagers to take breaks and age-verification technology.

Meta says its platforms also remove content related to suicide, self-harm and eating disorders. These policies were enforced more vigorously following the death of 14-year-old British schoolgirl Molly Russell, who took her own life in 2017 after viewing thousands of posts about suicide. Meta has since launched a default setting for under-16s that reduces the amount of sensitive content they see and has paused plans for Instagram Kids, a product for under-13s.

Other social media giants are taking action as the threat of regulation gathers momentum.

Snap, the company behind Snapchat, has introduced parental controls and developed a wellbeing hub for users. Jacqueline Beauchere, Snap’s global head of platform safety, also says that it is different from other apps because it focuses less on passive scrolling: “We aren’t an app that encourages perfection or popularity.”

YouTube says it has also “invested heavily” in child-safe experiences, such as its YouTube Kids app for under-13s, which has parental controls.

TikTok, which has grappled with children accidentally harming or in some cases killing themselves as part of dangerous online viral challenges on its short-form video platform, says it has eliminated this type of content in search results. It also recently announced that the accounts of all users under the age of 18 will automatically be set to a 60-minute daily screen time limit, in addition to parental controls.

“Generally speaking, one of our most important commitments is promoting the safety and wellbeing of teens, and we recognise this work is ongoing,” TikTok says.

The changes have not impressed Jennifer Mitchell, who now campaigns to boost awareness of the dangers posed by social media since her son’s death. Only age restrictions backed up with effective verification will protect children, she says. “We have age restrictions on almost everything here in the US,” Mitchell adds. “But if a child wants to open up their own social media account, there’s no verification and it’s as simple as them clicking a button.”

Others, such as Haidt, believe social media accounts should be completely banned for those under 16 and enforced with better age-verification software, such as Clear, which requires an official document, such as a passport or driving licence.

“We know that they make no effort to keep kids under 13 off [their platforms],” adds Haidt. “And that’s where I think their greatest legal liability is.”

Social media companies dispute this. Meta says it removed 1.7mn Instagram accounts and 4.8mn Facebook profiles in the second half of 2021 for failing to meet minimum age requirements. Snapchat removed 700 underage accounts between April 2021 and April 2022, compared to around 2mn by TikTok, according to data reported by Reuters. Snap says the figures were taken out of context and “misrepresented” its work to keep under-13s off the platform.

It also remains unclear whether these users simply set up new accounts.

Bipartisan support

What could tip the balance in parents’ favour is the upcoming legal battle taking on the social media giants.

Any ruling which finds the companies liable for deaths and injuries caused to children could force the mass removal of online content and damage revenues.

In 2022, revenues at Meta and Snap grew at their slowest pace since the companies went public in 2012 and 2017 and TikTok slashed its global revenue target by almost $2bn.

The social media companies intend to rely on a 1996 law, known as Section 230, that provides broad immunity from claims over harmful content posted by users. The plaintiffs, however, are arguing that is it the design features of these platforms and not user-generated content that drives addiction.

Meta’s Davis warns that if this shield is removed, it could create “real problems” for the internet with ramifications for free speech.

Even if the lawsuit does not succeed, it has focused public attention on mental health and internet safety, one of the few political issues with bipartisan support in the US and elsewhere.

California governor Gavin Newsom last September signed a bill called the California Age-Appropriate Design Code Act. It requires social media platforms to establish the age of child users with a “reasonable level of certainty”, among other measures, but is being challenged in court.

Legislators in Minnesota have proposed a bill that would ban social media companies from targeting users under-18 with algorithms that curate content that a user might like or interact with. Each violation would carry a $1,000 penalty.

On Thursday, Utah passed a bill into law that imposes age verification for all users and requires parental consent to use social media for under-18s starting in 2024. Similar proposals are being put forward in states including Texas and Ohio.

Bereaved parents such as Mitchell say they will not stop campaigning until tougher regulation is enforced. “If I can help another child, and another parent from experiencing my horror,” she says. “Then I’ve done what I need to do.”

Anyone in the UK affected by the issues raised in this article can contact the Samaritans for free on 116 123

Originally published at https://www.ft.com on March 26, 2023.