the health strategist

institute for continuous health systems transformation

and digital health

Joaquim Cardoso MSc.

Founder and CEO

Education:

Engineering (BSc Post Graduation), Administration (MSc.), Technology (MSc thesis)

Experience:

Researcher, Professor, Editor and Consultant (Senior Advisor)

What is the message?

Radiologists deliver fewer false-positive results than advanced AI models

Health Imaging

Chad Van Alstin

September 26, 2023

A recent retrospective study from Denmark sheds light on the limitations of artificial intelligence (AI) when assisting radiologists in interpreting chest radiographs. The research compared the diagnostic accuracy of AI tools to human radiologists in detecting lung diseases, revealing that radiologists may hold an advantage in certain scenarios.

Study Details:

Researchers analyzed four commercially available AI tools alongside 72 radiologists, evaluating 2,040 consecutive adult chest X-rays taken over a two-year period in four Danish hospitals during 2020.

The patient group had a median age of 72 years, and 32.8% of the X-rays showed at least one target finding. Three common findings were assessed: airspace disease, pneumothorax, and pleural effusion.

Key Findings:

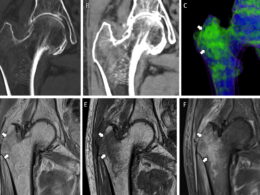

- AI Sensitivity Rates: The AI tools exhibited sensitivity rates ranging from 72% to 91% for airspace disease, 63% to 90% for pneumothorax, and 62% to 95% for pleural effusion.

- AI Performance with Multiple Findings: AI tools demonstrated high accuracy and specificity when examining chest X-rays with normal or single findings. However, their reliability dropped significantly when multiple findings were present on a single image.

- Effect of Finding Size: AI sensitivity was lower for vague airspace disease and smaller pneumothorax or pleural effusion compared to larger findings.

Implications:

The study highlights that AI falls short of trained radiologists in real-life scenarios involving diverse patient scans. While AI tools perform well in detecting diseases, they tend to produce more false-positive results than radiologists. False positives can lead to unnecessary imaging, increased radiation exposure, and higher healthcare costs.

Lead Researcher’s Insight:

Dr. Louis Plesner, the lead researcher, emphasized the importance of AI’s ability to exclude disease for accurate diagnosis. He noted that AI excels at finding diseases but struggles to identify their absence, particularly in complex chest X-rays.

Plesner also pointed out that previous studies showing AI outperforming radiologists often lacked the context of a patient’s medical history and other studies.

Radiologists, on the other hand, rely on a synthesis of various data points to make a diagnosis.

Plesner speculated that the next generation of AI tools could become more powerful if they could mimic this synthesis, but such systems are not yet available.

In summary, the study indicates that while AI has promise in radiology, it still lags behind human radiologists, particularly in cases with complex or multiple findings.

Further advancements in AI technology may be needed to bridge this gap in diagnostic accuracy.

Source:

Original paper:

Commercially Available Chest Radiograph AI Tools for Detecting Airspace Disease, Pneumothorax, and Pleural Effusion

Radiology

Louis Lind Plesner , Felix C. Müller, Mathias W. Brejnebøl, Lene C. Laustrup, Finn Rasmussen, Olav W. Nielsen, Mikael Boesen*, Michael Brun Andersen*

* M.B. and M.B.A. are co-senior authors.

Author Affiliations

- From the Department of Radiology, Herlev and Gentofte Hospital, Borgmester Ib, Juuls vej 1 Herlev, Copenhagen 2730, Denmark (L.L.P., F.C.M., M.W.B., L.C.L., M.B.A.);

- Faculty of Health Sciences, University of Copenhagen, Copenhagen, Denmark (L.L.P., M.W.B., O.W.N., M.B., M.B.A.);

- Radiological Artificial Intelligence Testcenter, RAIT.dk, Capital Region of Denmark (L.L.P., F.C.M., M.W.B., M.B., M.B.A.);

- Departments of Radiology (M.W.B., M.B.) and Cardiology (O.W.N.),

- Bispebjerg and Frederiksberg Hospital, Copenhagen, Denmark; and

- Department of Radiology, Aarhus University Hospital, Aarhus, Denmark (F.R.).

ABSTRACT

Background

Commercially available artificial intelligence (AI) tools can assist radiologists in interpreting chest radiographs, but their real-life diagnostic accuracy remains unclear.

Purpose

To evaluate the diagnostic accuracy of four commercially available AI tools for detection of airspace disease, pneumothorax, and pleural effusion on chest radiographs.

Materials and Methods

This retrospective study included consecutive adult patients who underwent chest radiography at one of four Danish hospitals in January 2020. Two thoracic radiologists (or three, in cases of disagreement) who had access to all previous and future imaging labeled chest radiographs independently for the reference standard. Area under the receiver operating characteristic curve, sensitivity, and specificity were calculated. Sensitivity and specificity were additionally stratified according to the severity of findings, number of findings on chest radiographs, and radiographic projection. The χ2 and McNemar tests were used for comparisons.

Results

The data set comprised 2040 patients (median age, 72 years [IQR, 58–81 years]; 1033 female), of whom 669 (32.8%) had target findings. The AI tools demonstrated areas under the receiver operating characteristic curve ranging 0.83–0.88 for airspace disease, 0.89–0.97 for pneumothorax, and 0.94–0.97 for pleural effusion. Sensitivities ranged 72%–91% for airspace disease, 63%–90% for pneumothorax, and 62%–95% for pleural effusion. Negative predictive values ranged 92%–100% for all target findings. In airspace disease, pneumothorax, and pleural effusion, specificity was high for chest radiographs with normal or single findings (range, 85%–96%, 99%–100%, and 95%–100%, respectively) and markedly lower for chest radiographs with four or more findings (range, 27%–69%, 96%–99%, 65%–92%, respectively) (P < .001). AI sensitivity was lower for vague airspace disease (range, 33%–61%) and small pneumothorax or pleural effusion (range, 9%–94%) compared with larger findings (range, 81%–100%; P value range, > .99 to < .001).

Conclusion

Current-generation AI tools showed moderate to high sensitivity for detecting airspace disease, pneumothorax, and pleural effusion on chest radiographs.

However, they produced more false-positive findings than radiology reports, and their performance decreased for smaller-sized target findings and when multiple findings were present.