the health strategist

institute, portal & consulting

for workforce health & economic prosperity

Joaquim Cardoso MSc.

Servant Leader, Chief Research & Strategy Officer (CRSO),

Editor in Chief and Senior Advisor

January 4, 2024

This executive summary is based on the article “Google researchers deal a major blow to the theory AI is about to outsmart humans”, published by Business Insider and written by Hasan Chowdhury, on November 7, 2023.

What is the message?

Researchers have delivered a significant setback to the pursuit of Artificial General Intelligence (AGI) by highlighting limitations in transformer technology, which powers large language models (LLMs) like ChatGPT.

The study indicates that transformers struggle with generalizing tasks beyond their pre-training data, questioning the feasibility of imminent AGI.

ONE PAGE SUMMARY

What are the key points?

Transformer Limitations:

The study reveals that transformers, while proficient in tasks related to their training data, exhibit various failure modes and degraded generalization when faced with out-of-domain tasks or extrapolation challenges.

AGI Ambitions Checked:

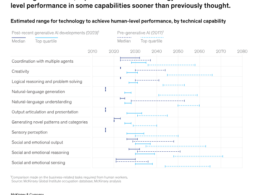

The findings suggest that achieving AGI, a goal representing AI’s ability to perform any task humans can, may be more distant than anticipated. The technology’s struggle with even simple extrapolation tasks indicates a need for caution in predicting imminent AGI.

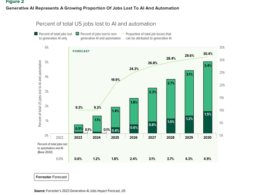

Investment and Expectations:

The paper challenges the collective belief in the potential of Large Language Models (LLMs) and calls for a reassessment of the limits of current AI technologies. Investors and tech enthusiasts aiming for AGI may need to recalibrate their expectations and investment strategies.

What are the key statistics?

The paper underscores the struggle of transformers in handling tasks beyond their pre-training data, indicating a gap in their ability to generalize.

The study highlights the need to temper expectations about the timeline for achieving AGI, suggesting that the current technology might not be as advanced as previously believed.

What are the key examples?

The research focuses on transformers’ failure modes and limitations in generalization, challenging the collective belief in the potential of AI, especially LLMs.

OpenAI’s CEO, Sam Altman, expressing the desire to “build AGI together” with Microsoft, contrasts with the study’s findings, emphasizing the challenges in achieving generalized AI capabilities.

Conclusion

While transformers have demonstrated prowess in specific tasks, the research suggests that the current state of AI technology, particularly in dealing with extrapolation and out-of-domain challenges, calls for a more measured approach.

The findings prompt a reevaluation of AGI expectations and highlight the complexity of developing AI systems that can generalize across diverse domains.

Investors, researchers, and industry players may need to recalibrate their strategies in light of these insights.

To read the original publication, click here.