Oxipit have truly made a bold step forward, daring to go beyond where others have yet to tread.

The practical realities, legal ramifications and questions still remain however.

Hardian Health

DR HUGH HARVEY

Downloaded on April 26, 2022

Key messages

Summarized by Joaquim Cardoso MSc.

Health Revolution Intitute

AI Health Unit

April 26, 2022

Oxipit have truly made a bold step forward, daring to go beyond where others have yet to tread.

- They have achieved a breakthrough IIb regulatory approval for autonomous AI, although technically only fully autonomous for normal CXR cases, not abnormal CXR cases.

- This should not be underestimated, and my congratulations go out to their team. Lithuania should be proud!

The practical realities, legal ramifications and questions still remain however.

- With so many different legislative frameworks out there, from GDPR to IR(ME)R to EURATOM to the CQC, they will still have a battle ahead before we see this technology fully embraced by healthcare systems.

- By carefully arguing the merits of reducing human reporting workload (of which they are claiming a 10–40% reduction) as well as the presumed financial and safety impacts, there may well be a case for legislative change.

For now, while the first autonomous AI is finally available — and I’m certain that others will quickly follow suit — how fast the relevant laws can be circumnavigated or changed remains to be seen.

This news of autonomous AI is good — but not quite true just yet…

By carefully arguing the merits of reducing human reporting workload (of which they are claiming a 10–40% reduction) as well as the presumed financial and safety impacts, there may well be a case for legislative change.

For now, while the first autonomous AI is finally available — and I’m certain that others will quickly follow suit — how fast the relevant laws can be circumnavigated or changed remains to be seen.

This news of autonomous AI is good — but not quite true just yet…

ORIGINAL PUBLICATION (full version)

Autonomous AI — too good to be true?

Hardian Health

DR HUGH HARVEY

Downloaded on April 26, 2022

Table of Contents (TOC)

- INTRODUCTION

- WHAT DOES AUTONOMOUS MEAN?

- CAN AUTONOMOUS AI REALLY BE REGULATORY APPROVED?

- IS IT SAFE?

- WHO IS MEDICO-LEGALLY LIABLE?

- IS IT LEGAL?

- TOO GOOD TO BE TRUE?

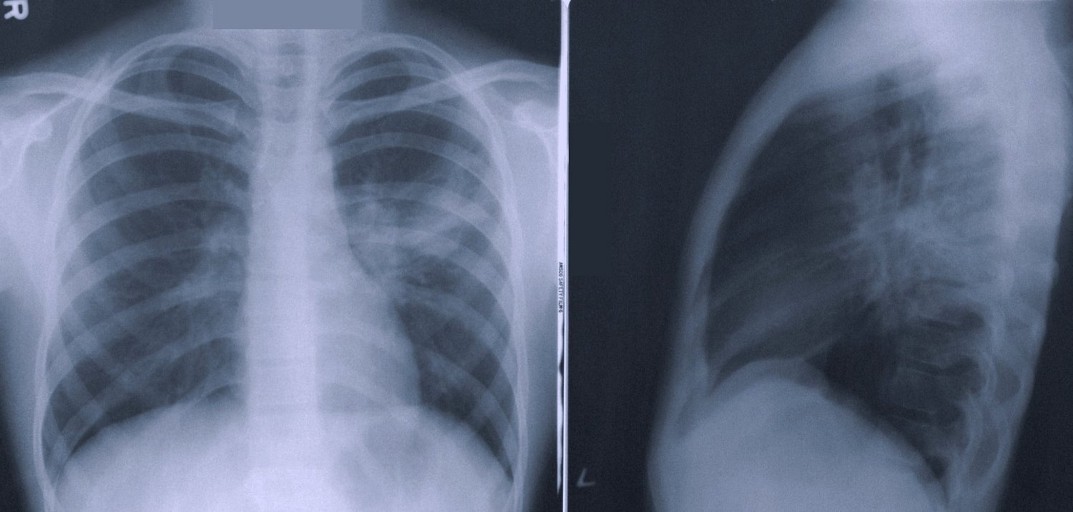

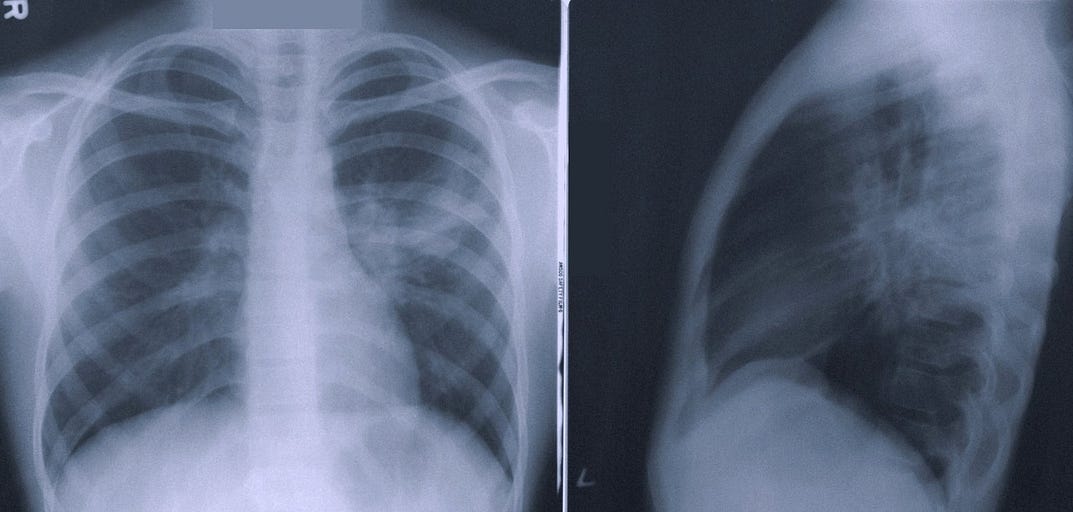

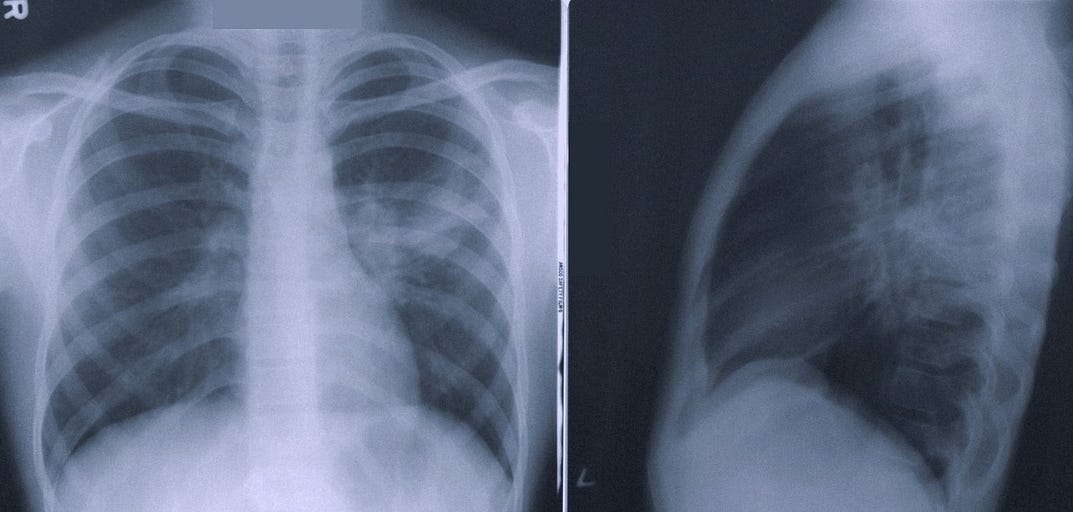

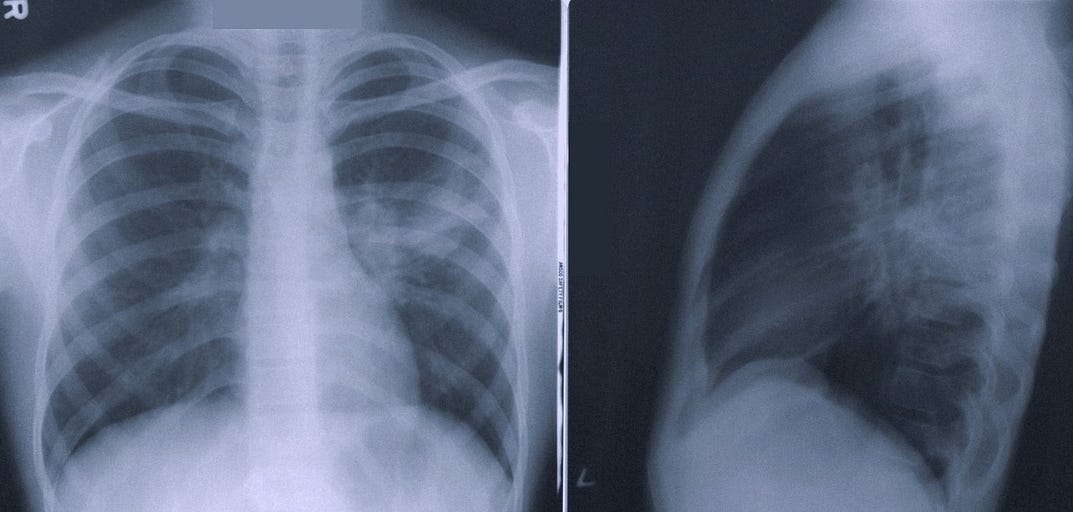

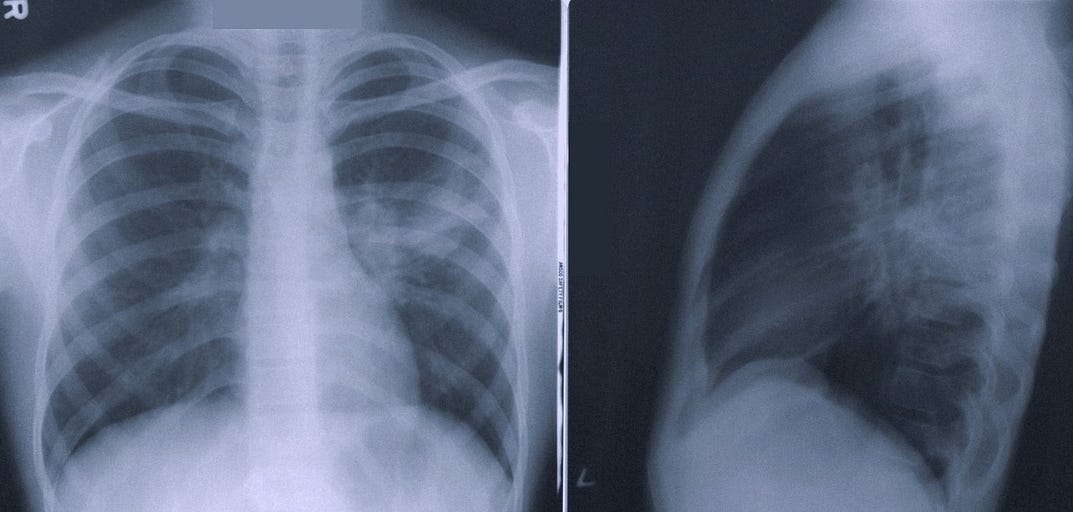

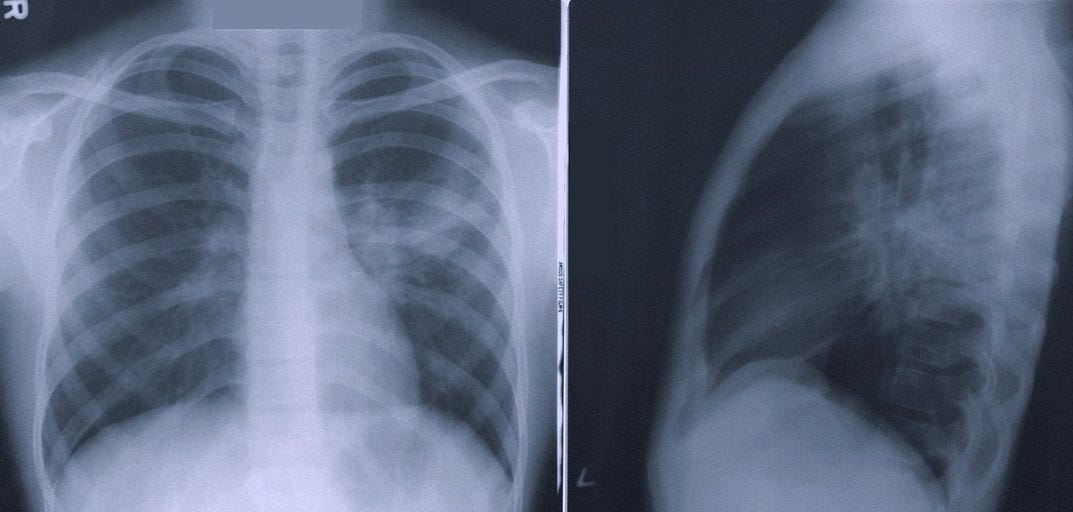

One of the ‘low-hanging fruits’ of radiology AI has long been assumed to be separating normal from abnormal CXR studies.

Indeed, Dr Nicola Strickland, former President of the UK Royal College of Radiologists has often cited this very example as something being of particular use to the NHS, given the piling up of national backlogs of CXRs awaiting a report.

Several academics, AI startups, and even behemoth Google, have been hard at work addressing this very problem, and NICE have even assessed a few of them.

None have yet dared cross the boundary into full reporting autonomy however, instead remaining firmly in the ‘triage’ camp.

Well, here we are only a couple of years later, and apparently AI autonomy is now a reality, at least according to Lithuanian start-up Oxipit, who announced a breakthrough class IIb regulatory approval for ‘ autonomous AI’.

The news has certainly made waves in the radiology community, and raised several eyebrows as well as questions — as all giant steps forward should.

Well, here we are only a couple of years later, and apparently AI autonomy is now a reality, at least according to Lithuanian start-up Oxipit, who announced a breakthrough class IIb regulatory approval for ‘ autonomous AI’.

We were told there would be disruptors — but were we ready to actually be disrupted? What does this all mean, and can it really be true? Let’s take a look…

What does autonomous mean?

Oxipit’s claim of autonomy stems from its intended use:

ChestLink automatically and autonomously (without the involvement of a radiologist) evaluates a chest X-ray study. The software is indicated to automatically detect actionable radiological findings on chest radiographs. After this analysis, one of two actions is performed:

(1) If ChestLink is confident that a study has no actionable radiological findings, a report is automatically generated. The study is not reported on by a trained radiologist.

(2) If ChestLink cannot confidently rule out the presence of actionable radiological findings, the study is directed to be reported on by a radiologist.

Now, there is a nuance here that is important to clarify. All current radiology AI systems on market already ‘autonomously’ analyse medical images without the involvement of a radiologist.

That is nothing new, indeed most systems do this in a matter of seconds, providing a report, overlay or triage notification to a radiologist after the analysis.

What is different here is the specific claim that the ChestLink AI product can so confidently predict a ‘normal’ CXR study that a radiologist does not have to check or verify the AI output.

Instead, Oxipit claims to autonomously report a normal study directly back to the referrer by pushing a complete report to PACS. It is this production of a final report without human review that boldly pushes the boundaries into AI autonomy.

Of particular note, Oxipit can not claim full autonomy for all CXR reporting for all findings — according to their website and intended use, any suspicion of abnormality is left for a radiologist to decide, despite their marketing stating this ‘is the first certificate issued for a fully autonomous AI’.

I’ll put this down to slightly exaggerated marketing speak, although technically I would prefer to see this stated as partial autonomy, or perhaps fully autonomous normal reporting — which is still in itself a significant breakthrough to be proud of.

Can autonomous AI really be regulatory approved?

There was some confusion as to the validity of their initial announcements of CE marking as they incorrectly published their QMS certificate as evidence instead of their declaration of conformity (which I have now seen and can confirm is correct).https://joaquimcardoso.blog/media/978dc1d807f27f2323f4779b1f6a5c9b

The simple answer to whether automous AI can be approved is yes, it can, and Oxipit are indeed the first in radiology AI to achieve class IIb certification for this intended use.

There is significant precedent for autonomous medical devices right across healthcare, from drug pumps that auto-adjust dosages (smart infusion pumps) to blood film readers that count cells to implantable pacemakers that shock patients without any doctor’s input.

In order to receive regulatory approval for such an AI device, one must first look at the regulatory risk classification rules, specifically Annex VIII, rule 11 of the EU MDR which explains how software is classified:

Software intended to provide information which is used to take decisions with diagnosis or therapeutic purposes is classified as class IIa, except if such decisions have an impact that may cause:

– death or an irreversible deterioration of a person’s state of health, in which case it is in class III; or

– a serious deterioration of a person’s state of health or a surgical intervention, in which case it is classified as class IIb.

Oxipit must have successfully managed to create a logical argument for being classified under class IIb as a device that can diagnose ‘normality’.

I imagine (but cannot confirm as I do not have visibility on their complete technical file) that they presented to their Notified Body that the highest risk that a missed abnormality could cause is a serious deterioration, but not death of a patient (which would make it class III, as per rule 11 above).

This makes sense, and is all in good keeping with the regulations, and the IMDRF risk classification matrix (below) which all international regulators now use.

You may be wondering why this device is not class III — well, it would be hard to argue that if a CXR was waiting in a backlog pile for 3 months that any finding was in fact ‘critical’.

Even in an emergency setting, it is hard to argue that total reliance on just a CXR for any suspected critical finding would be clinically acceptable, and such patients should be heading for a CT. (One mild criticism I do have though is that nowhere is it publicly specified if this system is intended for use on CXRs from a specific provenance — e.g. outpatient vs emergency.

This may be stated in their full Instructions For Use, but this document is not publicly available. If it is only intended for outpatient use, then class IIb is undoubtedly adequate).

If ChestLink finds an abnormal film (or, more accurately, can not with confidence call ‘normal’) then the image is reviewed by a radiologist, which is a well-established class IIa function.

However, medical devices are always classed as per their highest perceived risk, so Oxipit have correctly been designated IIb for the entire system.

Other CXR AI software on market that can differentiate normal from abnormal has, in general, only been certified as class IIa for ‘triage’ (CADt) or decision support (CADe), rather than diagnosis (CADx) (and some are still incorrectly on market under class I).

If those companies wish to also claim autonomy for normal vs abnormal reporting, they will have to re-certify and up-class their CE marks to IIb.

And by the way, if they are already claiming autonomy and that normal CXRs do not need to be read after their AI review, then they should expect a rude visit from the regulators as it is illegal to market a product outside of its certified intended use.

On a side note, Oxipit are not the first to receive a class IIb certification for CXR AI — there are at least three others who have the same designation, however they do not claim autonomy.

Hardian Health is clinical digital consultancy focussed on leveraging technology into healthcare markets through clinical strategy, scientific validation, regulation, health economics and intellectual property.

Is it safe?

This is a logical next question — and the answer will lie in the data.

Unfortunately at the time of writing there are no peer-reviewed publications from Oxipit or any third-party evaluators assessing the performance of the device in question.

However, the company has said one will be published soon.

Unfortunately at the time of writing there are no peer-reviewed publications from Oxipit or any third-party evaluators assessing the performance of the device in question.

However, the company has said one will be published soon.

So, what do we know about its performance?

Only what the manufacturer tells us, which is that “the sensitivity metric of 99% has translated to zero clinically relevant errors at our deployment institutions during the application piloting stage”.

This means that the True Negative rate for ‘normal’ was close to, or equal to, 99 out of 100, which is certainly impressive.

However, what we need to know in order to contextualise this claim is how they ground-truthed ‘normal’ — ideally it would be through consensus of a number of blinded qualified radiologists, perhaps even with follow up CT scans.

Only a paper outlining their methodology and results will clarify.

Radiologists will want to know the size of training data set, the demographics of the validation population, and the statistical confidence intervals and powering of the study.

For now we have to assume the regulatory auditors were content with what they were presented.

So, what do we know about its performance?

Only what the manufacturer tells us, which is that “the sensitivity metric of 99% has translated to zero clinically relevant errors at our deployment institutions during the application piloting stage”.

The fact that such data is not available is not a fault of the manufacturer — the regulations do not mandate sharing of such data publicly.

However, it is widely considered best practice to share this information for transparency and to engender trust.

Many guidelines have been produced stating that model facts cards or TRIPOD statements should be provided alongside any new device.

I would certainly expect prospective purchasers to request these, as per the ECLAIR guidelines!

The fact that such data is not available is not a fault of the manufacturer — the regulations do not mandate sharing of such data publicly.

What we can say with confidence is that the Oxipit system (just as for any CE marked system) has undergone regulatory scrutiny (by TUV Rheinland, a notoriously strict Notified Body) and the auditors were satisfied with the benefits and risks, clinical evaluation and any risk mitigations laid out in the documentation provided.

Additionally, Oxipit state that the system should not be deployed immediately in an autonomous fashion — rather a three stage framework of retrospective audit, supervised operation and then autonomous reporting should be used, which seems eminently sensible.

What we can say with confidence is that the Oxipit system (just as for any CE marked system) has undergone regulatory scrutiny (by TUV Rheinland, a notoriously strict Notified Body) and the auditors were satisfied

Additionally, Oxipit state that the system should not be deployed immediately in an autonomous fashion — rather a three stage framework of retrospective audit, supervised operation and then autonomous reporting should be used, which seems eminently sensible.

The device does what it says on the tin, will be audited and verified before use, and will be followed up post market, as is mandatory.

On paper, this is as safe as any other device on market — at least from a regulatory perspective.

Oxipit have now registered their device on EUDAMED (and will also need to register with the UK MHRA via a UKRP).

They will also need to update their clinical evaluation report annually with post market data to prepare for repeat audits.

Who is medico-legally liable?

The question of legal liability in the context of medical AI has been widely discussed.

The primary focus is on negligence and tort law, where, at least according to the American Law Institute, negligence is defined as “ conduct which falls below the standard established by law for the protection of others against unreasonable risk of harm”.

Indeed, it has been suggested that from a legal perspective an AI diagnostic tool cannot make a diagnosis. On the other hand, perhaps it is the legal system itself that is outdated, and AI companies need to start lobbying for changes.

Indeed, it has been suggested that from a legal perspective an AI diagnostic tool cannot make a diagnosis.

On the other hand, perhaps it is the legal system itself that is outdated, and AI companies need to start lobbying for changes.

By claiming full autonomy by an AI with no human intervention for normal cases, Oxipit are essentially directly addressing this practically unexplored area of tort law and stating that they are confident that ChestLink can provide medical care non-inferior to standard care.

This is a bold position, and one that falls outside the remit of the regulatory approval itself, which only certifies the product as safe and performant — regulations say nothing about legal liability after all, except for article 10(16) of the general obligations which states that “ manufacturers shall, in a manner that is proportionate to the risk class, type of device and the size of the enterprise, have measures in place to provide sufficient financial coverage in respect of their potential liability underDirective 85/374/EEC”.

I can only assume that Oxipit are ready to tackle this head on, and take full medico-legal liability should their system cause a patient harm, which, according to their own data should happen in fewer than 1/100 cases, as per their publicised accuracy metrics.

By providing direct diagnosis, and usurping a radiologist from the loop, ChestLink also raises the question of clinical governance of radiology practice.

In the UK, the Care Quality Commission (CQC) oversees the practice of healthcare delivery as an independent regulator — and one would imagine that they would be very interested in ensuring that autonomous AI systems have just as much oversight and assurance as human practice, and may well want UK hospitals using the system to be audited accordingly.

This will place a necessary extra regulatory burden on both the company and its customers.

By providing direct diagnosis, and usurping a radiologist from the loop, ChestLink also raises the question of clinical governance of radiology practice.

Is it legal?

Regulatory approval does not necessarily mean that use of an approved product is legal — only safe and performant.

The legal frameworks that surround the delivery of radiology practice also include laws on radiation exposure in medical settings.

In the EU, the European Directive 2013/59/Euratom legislation, and in the UK the Ionising Radiation (Medical Exposure) Regulations (IR(ME)R) are designed to protect against harms from ionising radiation.

They set out the responsibilities of duty holders (the employer, referrer, practitioner and operator) for radiation protection and the basic safety standards that duty holders must meet.

Regulatory approval does not necessarily mean that use of an approved product is legal — only safe and performant.

The legal frameworks that surround the delivery of radiology practice also include laws on radiation exposure in medical settings.

Of particular note within EURATOM is the definition of ‘clinical evaluation’ after an exposure to ionising radiation for medical purposes (i.e. the process of ‘reading’ the acquired image, and documenting the findings for the medical record).

Firstly, schedule 3 paragraph 1 and regulation 17 state that operators and practitioners should have relevant training in radiation physics, diagnostic radiology, anatomy and more.

These roles must be performed by a “registered health care professional” meaning a person who is a member of a profession regulated by a body mentioned in section 25 of the National Health Service Reform and Health Care Professions Act 2002.

As a diagnostic system ChestLink could technically be classed as a practitioner or operator by both these legislative rules, and may therefore be required to register with a relevant professional body.

The two legislations differ slightly in their definition of “clinical evaluation” however.

In the definitions section of EURATOM “clinical responsibility” means responsibility of a practitioner for individual medical exposures, in particular, justification; optimisation; and the clinical evaluation of the outcome.

The definition “clinical responsibility” does not appear in IR(ME)R, but instead clinical evaluation is defined under “ evaluation” meaning the interpretation of the outcome and implications of, and of the information resulting from, an exposure to radiation, as well as “ practical aspect” meaning the physical conduct of a medical exposure and any supporting aspects, including clinical evaluation.

In either case, the laws are clear — the responsibility for the clinical evaluation of a medical exposure to ionising radiation (a CXR) lies with an appropriately qualified and trained human operator.

In either case, the laws are clear — the responsibility for the clinical evaluation of a medical exposure to ionising radiation (a CXR) lies with an appropriately qualified and trained human operator.

Laws can be changed though. Is it now time to consider allowing a final report of a clinical evaluation to be performed by an adequately trained and verified computer system?

Oxipit (and many other vendors), will be arguing yes, as would many radiologists — including, one would imagine, the leadership of radiology academies who are already on record as asking for exactly this type of AI autonomy to break free from the shackles of crippling backlogs.

The RCR for instance may want to consider changing its standards for interpretation and reporting of imaging investigations (below) to allow for autonomous AI systems to produce a report without human validation.

Currently these standards state that a human should always be checking an AI output.

Laws can be changed though. Is it now time to consider allowing a final report of a clinical evaluation to be performed by an adequately trained and verified computer system?

Currently these standards state that a human should always be checking an AI output.

Finally, we must consider the patients — are the general public happy to accept AI autonomy in their diagnosis?

General sympathy towards an AI-driven healthcare system may be positive, but there is much work to be done to actually get there.

Vendors and purchasers need to convince the public too — they are after all the very reason anyone is doing this in the first place.

Currently under GDPR patients have to give explicit consent for their data to be used for automated decision making (unless allowed for under domestic law, which it currently isn’t in this case) and they should be offered simple ways for them to request human intervention or challenge a decision made by an AI system.

This will certainly make practical implementation of autonomous systems more complicated, but not impossible.

Finally, we must consider the patients — are the general public happy to accept AI autonomy in their diagnosis?

Currently under GDPR patients have to give explicit consent for their data to be used for automated decision making … and they should be offered simple ways for them to request human intervention or challenge a decision made by an AI system.

This will certainly make practical implementation of autonomous systems more complicated, but not impossible.

Too good to be true?

Oxipit have truly made a bold step forward, daring to go beyond where others have yet to tread. They have achieved a breakthrough IIb regulatory approval for autonomous AI, although technically only fully autonomous for normal CXR cases, not abnormal CXR cases. This should not be underestimated, and my congratulations go out to their team. Lithuania should be proud!

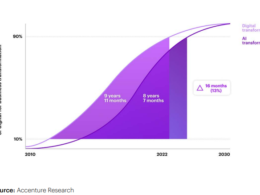

The practical realities, legal ramifications and questions still remain however. With so many different legislative frameworks out there, from GDPR to IR(ME)R to EURATOM to the CQC, they will still have a battle ahead before we see this technology fully embraced by healthcare systems. By carefully arguing the merits of reducing human reporting workload (of which they are claiming a 10–40% reduction) as well as the presumed financial and safety impacts, there may well be a case for legislative change.

For now, while the first autonomous AI is finally available — and I’m certain that others will quickly follow suit — how fast the relevant laws can be circumnavigated or changed remains to be seen. This news of autonomous AI is good — but not quite true just yet…

Originally published at https://hardianhealth.com on April 8, 2022.

Hardian Health is clinical digital consultancy focussed on leveraging technology into healthcare markets through clinical strategy, scientific validation, regulation, health economics and intellectual property.

Disclaimer: Neither the author nor Hardian Health have any financial or commercial affiliation with Oxipit. This article has not been reviewed or vetted by Oxipit or any of its employees.

Names mentioned

Dr Nicola Strickland, former President of the UK Royal College of Radiologists

TAGS

GDPR