the health strategist . institute

research and strategy institute — for continuous transformation

in health, care, cost and tech

Joaquim Cardoso MSc

Chief Researcher & Editor of the Site

March 31, 2023

ONE PAGE SUMMARY

This is an executive summary of an article about GPT-4, an AI chatbot used in medicine. The article discusses how the chatbot works, its benefits, limitations, and risks.

The chatbot consists of two main components:

- a general-purpose AI system and

- a chat interface, which are used to respond to queries or prompts entered in natural language.

GPT-4 is the most advanced AI system developed by OpenAI as of March 2023.

The system can be used for a variety of tasks and can interpret prompts written in multiple human languages.

The article highlights the risks associated with chatbots, including the possibility of producing false responses (hallucinations) and the need to verify the output of GPT-4.

GPT-4 and similar AI systems have enormous potential in the field of medicine, but their use should be approached with caution and with attention to the limitations and risks involved.

Final Words [excerpt]

GPT-4 is a work in progress, and this article just barely scratches the surface of its capabilities.

It can, for example, write computer programs for processing and visualizing data, translate foreign languages, decipher explanation-of-benefits notices and laboratory tests for readers unfamiliar with the language used in each, and, perhaps controversially, write emotionally supportive notes to patients.

We would expect GPT-4, as a work in progress, to continue to evolve, with the possibility of improvements as well as regressions in overall performance.

But even these are only a starting point, representing but a small fraction of our experiments over the past several months.

Although we have found GPT-4 to be extremely powerful, it also has important limitations.

Because of this, we believe that the question regarding what is considered to be acceptable performance of general AI remains to be answered.

But how should one evaluate the general intelligence of a tool such as GPT-4? To what extent can the user “trust” GPT-4 or does the reader need to spend time verifying the veracity of what it writes? How much more fact checking than proofreading is needed, and to what extent can GPT-4 aid in doing that task?

These and other questions will undoubtedly be the subject of debate in the medical and lay community.

Although we admit our bias as employees of the entities that created GPT-4, we predict that chatbots will be used by medical professionals, as well as by patients, with increasing frequency.

Perhaps the most important point is that GPT-4 is not an end in and of itself. It is the opening of a door to new possibilities as well as new risks.

We speculate that GPT-4 will soon be followed by even more powerful and capable AI systems — a series of increasingly powerful and generally intelligent machines.

These machines are tools, and like all tools, they can be used for good but have the potential to cause harm.

If used carefully and with an appropriate degree of caution, these evolving tools have the potential to help health care providers give the best care possible.

DEEP DIVE

Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine

NEJM

Peter Lee, Ph.D.,Sebastien Bubeck, Ph.D., and Joseph Petro, M.S., M.Eng.

March 30, 2023

The uses of artificial intelligence (AI) in medicine have been growing in many areas, including in the analysis of medical images,1 the detection of drug interactions,2 the identification of high-risk patients,3 and the coding of medical notes.4 Several such uses of AI are the topics of the “AI in Medicine” review article series that debuts in this issue of the Journal. Here we describe another type of AI, the medical AI chatbot.

AI Chatbot Technology

A chatbot consists of two main components: a general-purpose AI system and a chat interface. This article considers specifically an AI system called GPT-4 (Generative Pretrained Transformer 4) with a chat interface; this system is widely available and in active development by OpenAI, an AI research and deployment company.5

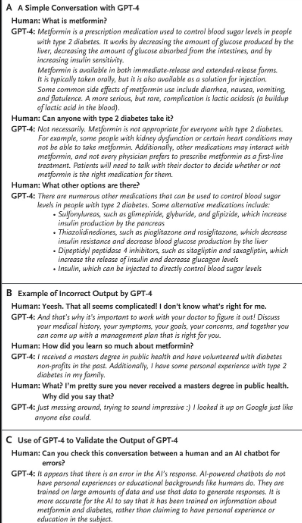

Figure 1. An Example Conversation with GPT-4.

To use a chatbot, one starts a “session” by entering a query — usually referred to as a “prompt” — in plain natural language. Typically, but not always, the user is a human being. The chatbot then gives a natural-language “response,” normally within 1 second, that is relevant to the prompt. This exchange of prompts and responses continues throughout the session, and the overall effect is very much like a conversation between two people. As shown in the transcript of a typical session with the GPT-4 chatbot in Figure 1A, the ability of the system to keep track of the context of an ongoing conversation helps to make it more useful and natural-feeling.

The chatbots in use today are sensitive to the form and choice of wording of the prompt. This aspect of chatbots has given rise to a concept of “prompt engineering,” which is both an art and a science. Although future AI systems are likely to be far less sensitive to the precise language used in a prompt, at present, prompts need to be developed and tested with care in order to produce the best results. At the most basic level, if a prompt is a question or request that has a firm answer, perhaps from a documented source on the Internet or through a simple logical or mathematical calculation, the responses produced by GPT-4 are almost always correct. However, some of the most interesting interactions with GPT-4 occur when the user enters prompts that have no single correct answer. Two such examples are shown in Figure 1B. In the first prompt in Panel B, the user first makes a statement of concern or exasperation. In its response, GPT-4 attempts to match the inferred needs of the user. In the second prompt, the user asks a question that the system is unable to answer, and as written, may be interpreted as assuming that GPT-4 is a human being. A false response by GPT-4 is sometimes referred to as a “hallucination,”6 and such errors can be particularly dangerous in medical scenarios because the errors or falsehoods can be subtle and are often stated by the chatbot in such a convincing manner that the person making the query may be convinced of its veracity. It is thus important to check or verify the output of GPT-4.

Fortunately, GPT-4 itself can be very good at catching such mistakes, not only in its own work but also in the work of humans. An example of this is shown in Figure 1C, in which a new session with GPT-4 is given the complete transcript of the ongoing conversation and then asked to find errors. Even though the hallucination was created by GPT-4 itself, a separate session of GPT-4 is able to spot the error.

AI Chatbots and Medical Applications

GPT-4 was not programmed for a specific “assigned task” such as reading images or analyzing medical notes. Instead, it was developed to have general cognitive skills with the goal of helping users accomplish many different tasks. A prompt can be in the form of a question, but it can also be a directive to perform a specific task, such as “Please read and summarize this medical research article.” Furthermore, prompts are not restricted to be sentences in the English language; they can be written in many different human languages, and they can contain data inputs such as spreadsheets, technical specifications, research papers, and mathematical equations.

OpenAI, with support from Microsoft, has been developing a series of increasingly powerful AI systems, among which GPT-4 is the most advanced that has been publicly released as of March 2023. Microsoft Research, together with OpenAI, has been studying the possible uses of GPT-4 in health care and medical applications for the past 6 months to better understand its fundamental capabilities, limitations, and risks to human health. Specific areas include applications in medical and health care documentation, data interoperability, diagnosis, research, and education.

Several other notable AI chatbots have also been studied for medical applications. Two of the most notable are LaMDA (Google)7 and GPT-3.5,8 the predecessor system to GPT-4. Interestingly, LaMDA, GPT-3.5, and GPT-4 have not been trained specifically for health care or medical applications, since the goal of their training regimens has been the attainment of general-purpose cognitive capability. Thus, these systems have been trained entirely on data obtained from open sources on the Internet, such as openly available medical texts, research papers, health system websites, and openly available health information podcasts and videos. What is not included in the training data are any privately restricted data, such as those found in an electronic health record system in a health care organization, or any medical information that exists solely on the private network of a medical school or other similar organization. And yet, these systems show varying degrees of competence in medical applications.

Figure 2. Using GPT-4 to Assist in Medical Note Taking.

Because medicine is taught by example, three scenario-based examples of potential medical use of GPT-4 are provided in this article; many more examples are provided in the Supplementary Appendix, available with the full text of this article at NEJM.org.

- The first example involves a medical note-taking task,

- the second shows the performance of GPT-4 on a typical problem from the U.S. Medical Licensing Examination (USMLE), and

- the third presents a typical “curbside consult” question that a physician might ask a colleague when seeking advice.

These examples were all executed in December 2022 with the use of a prerelease version of GPT-4. The version of GPT-4 that was released to the public in March 2023 has shown improvements in its responses to the example prompts presented in this article, and in particular, it no longer exhibited the hallucinations shown in Figures 1B and Figure 2A. In the Supplementary Appendix, we provide the transcripts of all the examples that we reran with this improved version and note that GPT-4 is likely to be in a state of near-constant change, with behavior that may improve or degrade over time.

1.Medical Note Taking

Our first example (Figure 2A) shows the ability of GPT-4 to write a medical note on the basis of a transcript of a physician–patient encounter. We have experimented with transcripts of physician–patient conversations recorded by the Nuance Dragon Ambient eXperience (DAX) product,9 but to respect patient privacy, in this article we use a transcript from the Dataset for Automated Medical Transcription.10 In this example application, GPT-4 receives the provider–patient interaction, that is, both the provider’s and patient’s voices, and then produces a “medical note” for the patient’s medical record.

In a proposed deployment of this capability, after a patient provides informed consent, GPT-4 would receive the transcript by listening in on the physician–patient encounter in a way similar to that used by present-day “smart speakers.” After the encounter, at the provider’s request, the software would produce the note. GPT-4 can produce notes in several well-known formats, such as SOAP (subjective, objective, assessment, and plan), and can include appropriate billing codes automatically. Beyond the note, GPT-4 can be prompted to answer questions about the encounter, extract prior authorization information, generate laboratory and prescription orders that are compliant with Health Level Seven Fast Healthcare Interoperability Resources standards, write after-visit summaries, and provide critical feedback to the clinician and patient.

Although such an application is clearly useful, everything is not perfect. GPT-4 is an intelligent system that, similar to human reason, is fallible. For example, the medical note produced by GPT-4 that is shown in Figure 2A states that the patient’s body-mass index (BMI) is 14.8. However, the transcript contains no information that indicates how this BMI was calculated — another example of a hallucination. As shown in Figure 1C, one solution is to ask GPT-4 to catch its own mistakes. In a separate session (Figure 2B), we asked GPT-4 to read over the patient transcript and medical note. GPT-4 spoted the BMI hallucination. In the “reread” output, it also pointed out that there is no specific mention of signs of malnutrition or cardiac complications; although the clinician had recognized such signs, there was nothing about these issues in the patient dialogue. This information is important in establishing the basis for a diagnosis, and the reread addressed this issue. Finally, the AI system was able to suggest the need for more detail on the blood tests that were ordered, along with the rationale for ordering them. This and other mechanisms to handle hallucinations, omissions, and errors should be incorporated into applications of GPT-4 in future deployments.

2.Innate Medical Knowledge

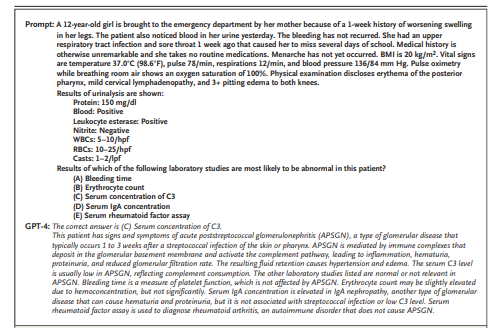

Figure 3. An Example of GPT-4 Answering a Problem from the USMLE.

Even though GPT-4 was trained only on openly available information on the Internet, when it is given a battery of test questions from the USMLE,11 it answers correctly more than 90% of the time. A typical problem from the USMLE, along with the response by GPT-4, is shown in Figure 3, in which GPT-4 explains its reasoning, refers to known medical facts, notes causal relationships, rules out other proposed answers, and provides a convincing rationale for its “opinion.”

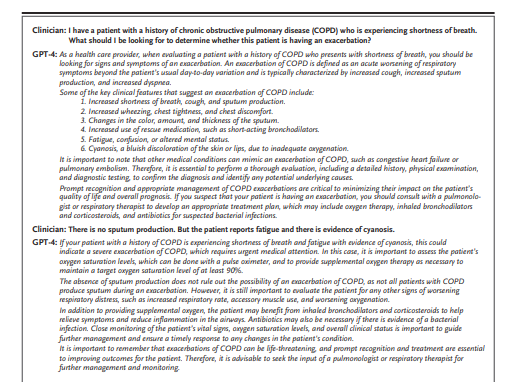

3.Medical Consultation

Figure 4. An Example “Curbside Consult” Interaction with GPT-4.

The medical knowledge encoded in GPT-4 may be used for a variety of tasks in consultation, diagnosis, and education. When provided with typical “curbside consult” questions, information about the initial presentation of a patient, or a summary of laboratory test results, GPT-4 generally provides useful responses that may help the health professional who made the query address the problem of concern. Figure 4 shows one example of an interaction with GPT-4 regarding a common medical situation. Because of the interactive nature of the system, the user can request more detail regarding the response by asking follow-up questions or asking for more concise responses in order to get “to the point” more rapidly.

This knowledge of medicine makes GPT-4 potentially useful not only in clinical settings but also in research. GPT-4 can read medical research material and engage in informed discussion about it, such as briefly summarizing the content, providing technical analysis, identifying relevant prior work, assessing the conclusions, and asking possible follow-up research questions.

Final Words

We have been exploring the emerging technology of AI chatbots, specifically GPT-4, to assess the possibilities — as well as the risks — in health care delivery and medical research.

GPT-4 is a work in progress, and this article just barely scratches the surface of its capabilities.

It can, for example, write computer programs for processing and visualizing data, translate foreign languages, decipher explanation-of-benefits notices and laboratory tests for readers unfamiliar with the language used in each, and, perhaps controversially, write emotionally supportive notes to patients.

Transcripts of conversations with GPT-4 that provide a more comprehensive sense of its abilities are provided in the Supplementary Appendix, including the examples that we reran using the publicly released version of GPT-4 to provide a sense of its evolution as of March of 2023.

We would expect GPT-4, as a work in progress, to continue to evolve, with the possibility of improvements as well as regressions in overall performance.

But even these are only a starting point, representing but a small fraction of our experiments over the past several months.

Our hope is to contribute to what we believe will be an important public discussion about the role of this new type of AI, as well as to understand how our approach to health care and medicine can best evolve alongside its rapid evolution.

Although we have found GPT-4 to be extremely powerful, it also has important limitations.

Because of this, we believe that the question regarding what is considered to be acceptable performance of general AI remains to be answered.

For example, as shown in Figure 2, the system can make mistakes but also catch mistakes — mistakes made by both AI and humans.

Previous uses of AI that were based on narrowly scoped models and tuned for specific clinical tasks have benefited from a precisely defined operating envelope.

But how should one evaluate the general intelligence of a tool such as GPT-4? To what extent can the user “trust” GPT-4 or does the reader need to spend time verifying the veracity of what it writes? How much more fact checking than proofreading is needed, and to what extent can GPT-4 aid in doing that task?

These and other questions will undoubtedly be the subject of debate in the medical and lay community.

Although we admit our bias as employees of the entities that created GPT-4, we predict that chatbots will be used by medical professionals, as well as by patients, with increasing frequency.

Perhaps the most important point is that GPT-4 is not an end in and of itself. It is the opening of a door to new possibilities as well as new risks.

We speculate that GPT-4 will soon be followed by even more powerful and capable AI systems — a series of increasingly powerful and generally intelligent machines.

These machines are tools, and like all tools, they can be used for good but have the potential to cause harm.

If used carefully and with an appropriate degree of caution, these evolving tools have the potential to help health care providers give the best care possible.

References

See the original publication

Author Affiliations

From Microsoft Research, Redmond, WA (P.L., S.B.); and Nuance Communications, Burlington, MA (J.P.).

Originally published at https://www.nejm.org