the health strategist

knowledge platform

health management strategy, engineering, and technology

for continuous transformation

Joaquim Cardoso MSc.

Chief Research Officer (CSO), Chief Editor

Chief Strategy Officer (CSO) and Senior Advisor

August 4, 2023

What is the message?

The conversational AI-powered chatbots that have come to Internet search engines, such as Google’s Bard and Microsoft’s Bing, look increasingly set to change scientific search too. Scopus, Dimensions and Web of Science are introducing conversational AI search.

Key takeaways:

- The field of scientific search is experiencing a transformative shift as major science search engines incorporate conversational AI-powered chatbots. Dutch publishing giant Elsevier has released Scopus AI, a ChatGPT-powered AI interface for some users of its Scopus database, aimed at helping researchers quickly get summaries of unfamiliar research topics.

- The AI generates fluent summary paragraphs about research topics, along with cited references and further exploration options. However, concerns about the reliability of large language models (LLMs) for scientific search persist, as they may produce factual errors, biases, or even non-existent references.

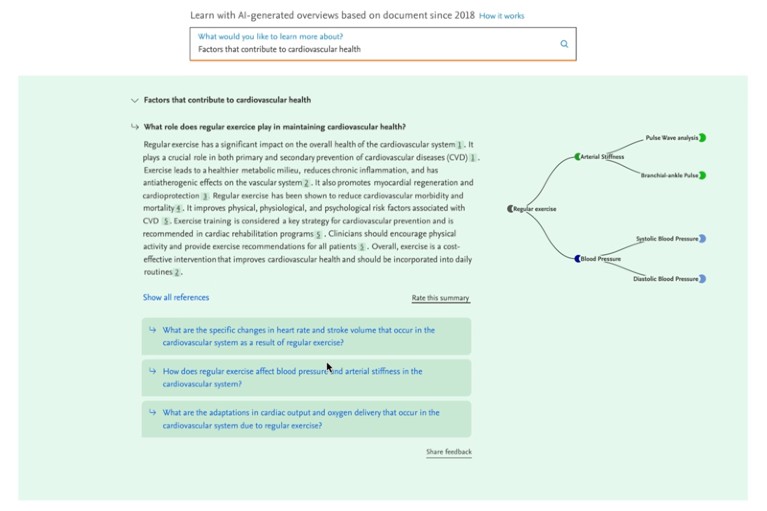

- To address these concerns, Scopus AI is constrained to generate answers only by referencing a limited number of research abstracts. The AI doesn’t find abstracts itself; instead, a conventional search engine returns relevant abstracts after the user’s query. The AI is also set to return information only from articles published since 2018 to ensure recent data and minimize mistakes.

- Elsevier has taken measures to minimize unpredictability by choosing a low setting for the bot’s ‘temperature,’ which controls how often it deviates from the most plausible words in its response.

- While Elsevier is taking steps to prevent misuse, there is a possibility of users plagiarizing the AI-generated paragraphs for their own papers. The company provides guidance to researchers to use the summaries responsibly, similar to guidance issued by funders and publishers regarding LLM usage in academic work.

- Digital Science, another major firm, announced the introduction of an AI assistant for its Dimensions scientific database. Like Scopus AI, this assistant retrieves relevant articles through a search engine and uses an OpenAI GPT model to generate summary paragraphs around the top-ranked abstracts.

- Both Elsevier and Digital Science are gradually introducing these AI tools and are working with researchers, funders, and users to build trust and assess the usefulness of LLMs in scientific search. Full-text analysis using LLMs is anticipated, as it could provide more detailed information about research papers.

- Clarivate, the company behind Web of Science, has also partnered with AI21 Labs and is working on adding LLM-powered search to its database, though no specific timeline has been given for its release.

- The adoption of conversational AI search in major science databases promises to enhance the research experience by providing quick and informative summaries of complex scientific topics.

- However, continuous monitoring and improvement of AI reliability and ethical usage guidelines will be essential to ensure the responsible and effective integration of these technologies into the scientific community.

DEEP DIVE

ChatGPT-like AIs are coming to major science search engines

Richard Van Noorden

August 2, 2023

The conversational AI-powered chatbots that have come to Internet search engines, such as Google’s Bard and Microsoft’s Bing, look increasingly set to change scientific search too. On 1 August, Dutch publishing giant Elsevier released a ChatGPT-powered AI interface for some users of its Scopus database, while British firm Digital Science announced a closed trial of an AI large language model (LLM) assistant for its Dimensions database. Meanwhile, US firm Clarivate says it’s also working on bringing LLMs to its Web of Science database.

LLMs for scientific search aren’t new: start-up firms such as Elicit, Scite, and Consensus already have such AI systems, which help to summarize a field’s findings or identify top studies, relying on free science databases or (in Scite’s case) access to paywalled research articles through partnerships with publishers. But firms who own large proprietary databases of scientific abstracts and references are now joining the AI rush.

Elsevier’s chatbot, called Scopus AI and launched as a pilot, is intended as a light, playful tool to help researchers quickly get summaries of research topics they’re unfamiliar with, says Maxim Khan, an Elsevier executive in London who oversaw the tool’s development. In response to a natural-language question, the bot uses a version of the LLM GPT-3.5 to return a fluent summary paragraph about a research topic, together with cited references, and further questions to explore.

A concern about LLMs for search — especially scientific search — is that they are unreliable. LLMs don’t understand the text they produce; they work simply by spitting out words that are stylistically plausible. Their output can contain factual errors and biases and, as academics have quickly found, can make up non-existent references.

The Scopus AI is therefore constrained: it has been prompted to generate its answer only by reference to five or ten research abstracts. The AI doesn’t find those abstracts itself: rather, after the user has typed in a query, a conventional search engine returns them as relevant to a question, explains Khan.

Fake facts

Many other AI search engine systems adopt a similar strategy, notes Aaron Tay, a librarian at Singapore Management University who follows AI search tools. This is sometimes termed retrieval-augmented generation, because the LLM is limited to summarizing relevant information that another search engine retrieves. “The LLM can still occasionally hallucinate or make things up,” says Tay, pointing to research on Internet search AI chatbots, such as Bing and Perplexity, that use a similar technique.

Elsevier has limited its AI product to search only for articles published since 2018, so as to pick up recent papers, and has instructed its chatbot to appropriately cite the returned abstracts in its reply, to avoid unsafe or malicious queries, and to state if there’s no relevant information in the abstracts it receives. This can’t avoid mistakes, but minimizes them. Elsevier has also cut down the unpredictability of its AI by picking a low setting for the bot’s ‘temperature’ — a measure of how often it chooses to deviate from the most plausible words in its response.

Might users simply copy and paste the bot’s paragraphs into their own papers, effectively plagiarizing the tool? That’s a possibility, says Khan. Elsevier has so far tackled this with guidance that asks researchers to use the summaries responsibly, he says. Khan points out that funders and publishers have issued similar guidance, asking for transparent disclosure if LLMs are used in, for instance, writing papers or conducting peer reviews, or in some cases stating that LLMs shouldn’t be used at all.

For the moment, the tool is being rolled out only to around 15,000 users, a subset of Scopus subscribers, with other researchers invited to contact Elsevier if they want to try it. The firm says it expects a full launch in early 2024.

Full-text analysis

Also on 1 August, Digital Science announced that it was introducing an AI assistant for its large Dimensions scientific database, at the moment only for selected beta testers. Rather as with Scopus AI, after a user types in their question, a search engine first retrieves relevant articles, and an Open AI GPT model then generates a summary paragraph around the top-ranked abstracts that have been retrieved.

“It’s remarkably similar, funnily enough,” says Christian Herzog, the chief product officer for the firm. (Digital Science is part of Holtzbrinck Publishing Group, the majority shareholder in Nature’s publisher, Springer Nature.)

Dimensions also uses the LLM to provide some more details about relevant papers, including short rephrased summaries of their findings.

Herzog says the firm hopes to release its tool more widely by the end of the year, but is for the moment working with scientists, funders and others who use Dimensions to test where LLMs might be useful — which still remains to be seen. “This is about gradually easing into a new technology and building trust,” he says.

Tay says he’s looking forward to tools that use LLMs on the full text of papers, and not just abstracts. Sites such as Elicit already let users use LLMs to answer detailed questions about a paper’s full text — when the bots have access to it, as with some open-access articles, he notes.

At Clarivate, meanwhile, Bar Veinstein, president of what the firm calls its ‘academia and government segment’, says the company is “working on adding LLM-powered search in Web of Science”, referring to a strategic partnership signed with AI21 Labs, based in Tel Aviv, Israel, that the firms announced in June. Veinstein did not give a timeline for the release of an LLM-based Web of Science tool, however.

Mentioned names

Richard Van Noorden (reporter for Nature)

Bar Veinstein (president of Clarivate)

Christian Herzog (chief product officer for Digital Science)

Maxim Khan (Elsevier executive)

Aaron Tay (librarian at Singapore Management University)

Originally published at https://www.nature.com