This is a republication of the article” How Foundation Models Can Advance AI in Healthcare”, published by HAI Stanford. The post is preceded by an Executive Summary by the Editor of the Site.

transformhealth . institute

institute for continuous health transformation

Joaquim Cardoso MSc

Chief Researcher, Editor and

Senior Advisor

January 13, 2023

EXECUTIVE SUMMARY

There are several problems with the current approach to using AI in healthcare. These include:

- (1) Difficulties in transitioning from impressive tech demos to deployed AI

- (2) Custom data pulls, ad hoc training sets and manual maintenance

- (3) Relying on large amounts of manually labeled data

- (4) Limited adaptability and generalizability of models

- (5) High cost of developing, deploying and maintaining AI in hospitals

The problem with the current paradigm of doing “AI in healthcare,” where developing, deploying, and maintaining a classifier or predictive model for a single clinical task can cost upward of $200,000, is unsustainable.

The authors suggest that foundation models, which are trained on large amounts of unlabeled data and are highly adaptable to new applications, may provide a solution to these problems by providing a better paradigm for “AI in healthcare.”

A foundation model is a type of AI model that is trained on large amounts of unlabeled data and is highly adaptable to new applications.

The benefits that can be provided ty Foundation Models are significant:

- (1) improved adaptability with fewer manually labeled examples, modular and reusable AI,

- (2) making multimodality the new normal,

- (3) new interfaces for human-AI collaboration, and

- (4) easing the cost of developing, deploying, and maintaining AI in hospitals.

- By lowering the time and energy required to train models, we can (i) focus on ensuring that their use leads to fair allocation of resources, (ii) with the potential to meaningfully improve clinical care and efficiency and (iii) create a new, supercharged framework for AI in healthcare.

The authors also discuss the challenges in transitioning from tech demos to deployed AI in healthcare …

… and the potential for foundation models to provide a better paradigm for “AI in healthcare.”

Outline of the publication

- What Is a “Foundation Model”?

- Why Should Healthcare Care?

- What Are the Benefits of Foundation Models?

- Making Multimodality the New Normal

- New Interfaces for Human-AI Collaboration

- Easing the Cost of Developing, Deploying, and Maintaining AI in Hospitals

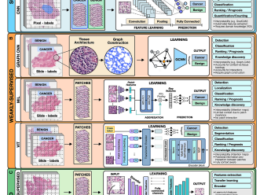

INFOGRAPHIC

DEEP DIVE

How Foundation Models Can Advance AI in Healthcare

This new class of models may lead to more affordable, easily adaptable health AI.

HAI Stanford

Jason Fries, Ethan Steinberg, Scott Fleming, Michael Wornow, Yizhe Xu, Keith Morse, Dev Dash, Nigam Shah

Dec 15, 2022

The past year has seen a dazzling array of advancements in the development of artificial intelligence (AI) for text, image, video, and other modalities.

GPT-3, BLOOM, and Stable Diffusion have captured the public imagination with their ability to write poems, summarize articles, solve math problems, and translate textual descriptions into images and even video.

AI systems such as ChatGPT can answer complex questions with surprising fluency, and CICERO performs as well as humans in Diplomacy, a game which requires negotiating and strategizing with other players using natural language.

These examples highlight the growing role of foundation models — AI models trained on massive, unlabeled data and highly adaptable to new applications — in underpinning AI innovations.

In fact, the Economist observed that the rise of foundation models is shifting AI into its “industrial age” by providing general-purpose technologies that drive long-term productivity and growth.

In fact, the Economist observed that the rise of foundation models is shifting AI into its “industrial age” by providing general-purpose technologies that drive long-term productivity and growth.

However, in healthcare, transitioning from impressive tech demos to deployed AI has been challenging.

Despite the promise of AI to improve clinical outcomes, reduce costs, and meaningfully improve patient lives, very few models are deployed.

For example, of the roughly 593 models developed for predicting outcomes among COVID-19 patients, practically none are deployed for use in patient care.

Deployment efforts are further hampered by the approach of creating and using models in healthcare by relying on custom data pulls, ad hoc training sets, and manual maintenance and monitoring regimes in healthcare IT.

Despite the promise of AI to improve clinical outcomes, reduce costs, and meaningfully improve patient lives, very few models are deployed.

For example, of the roughly 593 models developed for predicting outcomes among COVID-19 patients, practically none are deployed for use in patient care.

In this post we discuss the opportunities foundation models offer in terms of a better paradigm of doing “AI in healthcare.”

First, we outline what foundation models are and their relevance to healthcare.

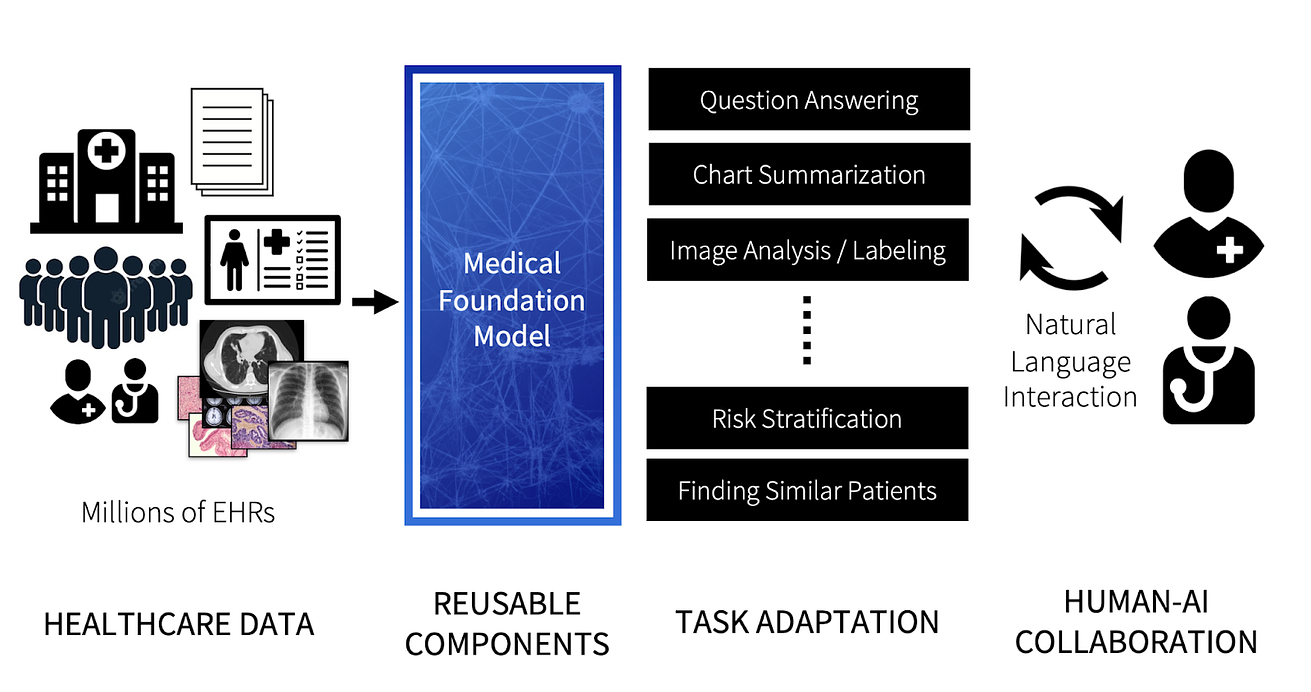

Then we highlight what we believe are key opportunities provided by the next generation of medical foundation models, specifically:

- AI Adaptability with Fewer Manually Labeled Examples

- Modular, Reusable, and Robust AI

- Making Multimodality the New Normal

- New Interfaces for Human-AI Collaboration

- Easing the Cost of Developing, Deploying, and Maintaining AI in Hospitals

What Is a “Foundation Model”?

“Foundation model” is a recent term, coined in 2021 by Bommasani et al., to identify a class of AI models that draw on classic ideas from deep learning with two key differences:

1.Learning from large amounts of unlabeled data:

Prior deep learning approaches required learning from large, manually labeled datasets to reach high classification accuracy.

For example, early deep learning models for skin cancer and diabetic retinopathy classification required almost 130,000 clinician-labeled images.

Prior deep learning approaches required learning from large, manually labeled datasets to reach high classification accuracy.

For example, early deep learning models for skin cancer and diabetic retinopathy classification required almost 130,000 clinician-labeled images.

Foundation models use advances in self-supervised learning in a process called “pretraining,” …

… which involves a simple learning objective such as predicting the next word in a sentence or reconstructing patches of masked pixels in an image.

While this simplicity enables training foundation models with billions of learnable parameters, it also requires large, unlabeled datasets and substantial computational resources.

GPT-3, for instance, was trained using 45TB of text, and BLOOM took 1 million GPU hours to train using the Jean Zay supercomputer — the equivalent of over 100 years for one Nvidia A100 GPU.

While this simplicity enables training foundation models with billions of learnable parameters, it also requires large, unlabeled datasets and substantial computational resources.

2.Adaptability with better sample efficiency:

Foundation models learn useful patterns during pretraining and encode that information into a set of model weights.

This pretrained model then serves as the foundation for rapidly adapting the model for new tasks via transfer learning.

The process may not be new, but it makes foundation models far more sample efficient for transfer learning than prior AI approaches.

This means models can be rapidly adapted to new tasks using fewer labeled examples, which is critical in many medical settings where diseases or outcome of interest may be rare or complicated to label at scale.

Why Should Healthcare Care?

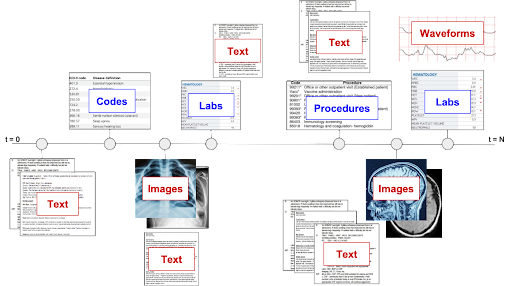

It is widely held that information contained within electronic health records (EHRs) can be used to learn classification, prediction, or survival models that assist in diagnosis or enable proactive intervention.

… — in both coded forms such as International Classification of Diseases (ICD) and Current Procedural Terminology (CPT) codes as well as unstructured forms such as text and images —

The promise is clear.

However, despite good predictive performance, models trained on EHR data do not translate into clinical gains in the form of better care or lower cost, leading to a gap referred to as an AI chasm.

There are also concerns that current models are not useful, reliable, or fair and that these failures remain hidden until errors due to bias or denial of healthcare services incite public outcry.

What’s more, both the creation and the management of models that guide care remain artisanal and costly.

Learning models require custom data extracts that cost upward of $200,000 and end-to-end projects cost over $300,000, with each model and project incurring downstream maintenance expenses that are largely unknown and unaccounted for.

Simply put, the total cost of ownership of “models” in healthcare is too high and likely rising due to new reporting guidelines, regulation, and practice recommendations — for which adherence rates remain low.

Learning models require custom data extracts that cost upward of $200,000 and end-to-end projects cost over $300,000, with each model and project incurring downstream maintenance expenses that are largely unknown and unaccounted for.

If we can reduce the time and energy spent on training models, we can then focus on creating model-guided care workflows and ensuring that models are useful, reliable, and fair — and informed by the clinical workflows in which they operate.

If we can reduce the time and energy spent on training models, we can then focus on creating model-guided care workflows and ensuring that models are useful, reliable, and fair — and informed by the clinical workflows in which they operate.

What Are the Benefits of Foundation Models?

Foundation models provide several advantages that can help narrow the AI chasm in healthcare.

1.Adaptability with Fewer Labeled Examples

2.Modular, Reusable, and Robust AI

1.Adaptability with Fewer Labeled Examples

In healthcare, a model is typically trained for a single purpose like sepsis prediction and distributed as install-anywhere software.

These models are trained to perform a classification or prediction task using a mix of biological inputs (such as laboratory test results, which are more stable across patient populations) and operational inputs (such as care patterns, which are variable and tend to be hospital-specific).

But models often have poor generalization, which in turn limits their use.

Epic, a top EHR vendor, recently began retraining their sepsis model on a hospital’s local data before deployment after the algorithm was widely criticized for poor performance.

The need to retrain every model for the specific patient population and hospital where it will be used creates cost, complexity, and personnel barriers to using AI.

This is where foundation models can provide a mechanism for rapidly and inexpensively adapting models for local use.

Rather than specializing in a single task, foundation models capture a wide breadth of knowledge from unlabeled data.

The need to retrain every model for the specific patient population and hospital where it will be used creates cost, complexity, and personnel barriers to using AI. This is where foundation models can provide a mechanism for rapidly and inexpensively adapting models for local use.

Rather than specializing in a single task, foundation models capture a wide breadth of knowledge from unlabeled data.

Then, instead of training models from scratch, practitioners can adapt an existing foundation model, a process that requires substantially less labeled training data.

For example, the current generation of medical foundation models have reported reducing training data requirements by 10x when adapting to a new task.

For clinical natural language extraction tasks, variations of large foundation models like GPT-3 can achieve strong performance using only a single training example.

2.Modular, Reusable, and Robust AI

Andrej Karpathy’s idea of Software 2.0 anticipated transitioning parts of software development away from writing and maintaining of code to using AI models.

In this paradigm, practitioners codify desired behaviors by designing datasets and then training commodity AI models to replace critical layers of a software stack.

We have seen the benefits of Software 2.0 in the form of model hubs from companies like Hugging Face, which have made sharing, documenting, and extending pretrained models easier than ever.

Since foundation models are expensive to train but easily adaptable to new tasks, sharing models empowers a community of developers to build upon existing work and accelerate innovation.

Using shared foundation models also allows the community to better assess those models’ limitations, biases, and other flaws.

We are already seeing this approach being explored in medical settings, with efforts in NLP such as GatorTron, UCSF BERT, and others.

We are already seeing this approach being explored in medical settings, with efforts in NLP such as GatorTron, UCSF BERT, and others.

Medical foundation models also provide benefits beyond improved classification performance and sample efficiency.

In our group’s research using CLMBR, a foundation model for structured EHR data, we found that adapted models demonstrate improved temporal robustness for tasks such as ICU admissions, where performance decays less over time.

In our group’s research using CLMBR, a foundation model for structured EHR data, we found that adapted models demonstrate improved temporal robustness for tasks such as ICU admissions, where performance decays less over time.

Making Multimodality the New Normal

Today’s medical AI models often make use of a single input modality, such as medical images, clinical notes, or structured data like ICD codes.

However, health records are inherently multimodal, containing a mix of provider’s notes, billing codes, laboratory data, images, vital signs, and increasingly genomic sequencing, wearables, and more.

The multimodality of EHR is only going to grow, having jumped twenty fold from 2008 to 2015.

The multimodality of EHR is only going to grow, having jumped twenty fold from 2008 to 2015.

No modality in isolation provides a complete picture of a person’s health state.

Analyzing pixel features of medical images frequently requires consulting structured records to interpret findings, so why should AI models be limited to a single modality?

Foundation models can combine multiple modalities during training. Many of the amazing, sci-fi abilities of models like Stable Diffusion are the product of learning from both language and images.

The ability to represent multiple modalities from medical data not only leads to better representations of patient state for use in downstream applications, but also opens up more paths for interacting with AI.

Clinicians can query databases of medical imaging using natural language descriptions of abnormalities or use descriptions to generate synthetic medical images with counterfactual pathologies.

New Interfaces for Human-AI Collaboration

Current healthcare AI models typically generate output that is presented to clinicians who have limited options to interrogate and refine a model’s output.

Foundation models present new opportunities for interacting with AI models, including natural language interfaces and the ability to engage in a dialogue.

By building collections of natural language instructions, we can fine-tune models via instruction tuning to improve generalization.

In medicine, we don’t yet have a good mechanism to systematically collect the types of questions clinicians generate while interacting with EHRs.

However, adopting foundation models in medicine will put these types of human-AI collaborations front and center.

Training on high-quality instruction datasets seems to be the secret sauce behind many of the surprising abilities of ChatGPT and smaller, open language models.

In fact OpenAI has put out job listings for Expert AI Teachers who can help teach specialized domain knowledge to the next generation of GPT models.

Training on high-quality instruction datasets seems to be the secret sauce behind many of the surprising abilities of ChatGPT and smaller, open language models.

In fact OpenAI has put out job listings for Expert AI Teachers who can help teach specialized domain knowledge to the next generation of GPT models.

Easing the Cost of Developing, Deploying, and Maintaining AI in Hospitals

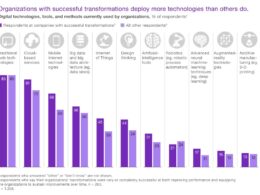

The current paradigm of doing “AI in healthcare,” where developing, deploying, and maintaining a classifier or predictive model for a single clinical task can cost upward of $200,000, is unsustainable.

Commercial solutions also fall short because vendors typically charge health systems either on a per model or per prediction basis.

It is clear that we need a better paradigm where instead of a one model, one datapull, one project per use-case mindset, we focus on creating models that are cheaper to build, have reusable parts, can handle multiple data types, and are resilient to changes in the underlying data.

The current paradigm of doing “AI in healthcare,” where developing, deploying, and maintaining a classifier or predictive model for a single clinical task can cost upward of $200,000, is unsustainable.

Analogous to how the healthcare sector has focused on standardizing patient level data access via FHIR and other EHR APIs, …

… making foundation models available via an API to support the development of subsequent models for specific tasks can significantly alter the cost structure of training models in healthcare.

Specifically, we need a way to amortize (and hence reduce) the cost of prototyping, validating, and deploying a model for any given task (such as identifying patients with undiagnosed peripheral artery disease) across many other tasks in order to make such development viable.

Foundation models, shared widely via APIs, have the potential to provide that ability as well as the flexibility to examine emergent behaviors that have driven innovation in other domains.

Foundation models, shared widely via APIs, have the potential to provide that ability as well as the flexibility to examine emergent behaviors that have driven innovation in other domains.

By lowering the time and energy required to train models, we can

- focus on ensuring that their use leads to fair allocation of resources

- with the potential to meaningfully improve clinical care and efficiency

- and create a new, supercharged framework for AI in healthcare.

Adopting the use of foundation models is a promising path to that end vision.

Adopting the use of foundation models is a promising path to that end vision.

Originally published at https://hai.stanford.edu