MIT Sloan Management Review

Elizabeth M. Renieris, David Kiron, and Steven D. Mills

July 12, 2022

Executive Summary by:

Joaquim Cardoso MSc.

health transformation . institute

ai health care unit

July 29, 2022

Key messages:

- RAI actually enables AI-related growth and innovation — or at least the kind of innovation they want, although their rationales for why this is so differ.

- For organizations seeking to leverage RAI to promote AI-related innovation, we recommend the following: (1) Don’t allow the hype to become a barrier; (2) Focus on the upside of RAI; (3) Not all innovation is created equal

What is the question?

- RAI is often portrayed as creating friction that slows the pace of product development and stifles innovation.

- The authors wanted to understand whether the leaders charged with actually developing AI solutions have experienced this phenomenon.

What are the findings?

- In fact, the experts overwhelmingly asserted that RAI actually enables AI-related growth and innovation — or at least the kind of innovation they want, although their rationales for why this is so differ.

- The authors also conducted a global survey of more than 1,000 executives on the topic of RAI and found analogous support for the view that RAI efforts promote better business outcomes, including accelerated innovation.

- (1) Nearly 40% of managers in companies with at least $100 million in annual revenues reported that they are already experiencing accelerated innovation as a result of their RAI efforts,

- (2) while 64% of managers in organizations with mature RAI programs (RAI Leaders) described a similar boost.

- (3) More than 40% of survey respondents observed improved products and services as a result of their RAI efforts.

- (4) That number jumps to 70% among RAI Leaders.

Key points:

- 1.AI May Be Neutral, but RAI Is Not

- 2.RAI Puts AI in Context

- 3.RAI Improves Rather Than Constrains Innovation

Recommendations

In sum, for organizations seeking to leverage RAI to promote AI-related innovation, we recommend the following:

- 1.Don’t allow the hype to become a barrier

- 2.Focus on the upside of RAI

- 3.Not all innovation is created equal

ORIGINAL PUBLICATION (full version)

MIT Sloan Management Review and Boston Consulting Group (BCG) have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide.

RAI is often portrayed as creating friction that slows the pace of product development and stifles innovation.

We wanted to understand whether the leaders charged with actually developing AI solutions have experienced this phenomenon.

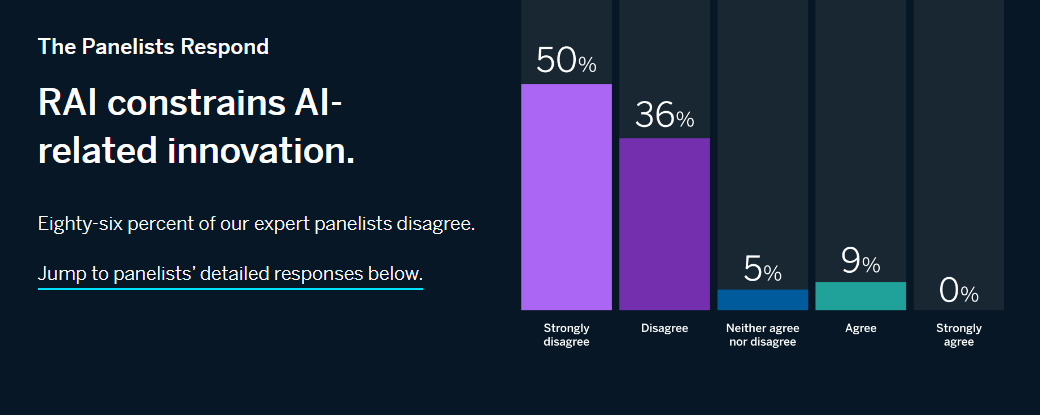

This month, we asked our panelists to react to the following provocation: RAI constrains AI-related innovation.

The results were nearly unanimous, with 86% (19 out of 22) of our panelists disagreeing or strongly disagreeing with the statement.

In fact, our experts overwhelmingly asserted that RAI actually enables AI-related growth and innovation — or at least the kind of innovation they want, although their rationales for why this is so differ.

Leaders are the most mature of three maturity clusters identified by analyzing the survey results.

An unsupervised machine learning algorithm (K-mean clustering) was used to identify naturally occurring clusters based on the scale and scope of the organization’s RAI implementation. Scale is defined as the degree to which RAI efforts are deployed across the enterprise (e.g., ad hoc, partial, enterprisewide). Scope includes the elements that are part of the RAI program (e.g., principles, policies, governance) and the dimensions covered by the RAI program (e.g., fairness, safety, environmental impact). Leaders were the most mature in terms of both scale and scope.

We also conducted a global survey of more than 1,000 executives on the topic of RAI and found analogous support for the view that RAI efforts promote better business outcomes, including accelerated innovation.

- Nearly 40% of managers in companies with at least $100 million in annual revenues reported that they are already experiencing accelerated innovation as a result of their RAI efforts,

- while 64% of managers in organizations with mature RAI programs (RAI Leaders) described a similar boost.

- More than 40% of survey respondents observed improved products and services as a result of their RAI efforts.

- That number jumps to 70% among RAI Leaders.

Below, we share insights from our panelists.

We also draw on our own observations and experience working on RAI initiatives to offer recommendations for organizations seeking to leverage RAI in support of AI-related growth and innovation.

Key points:

- 1.AI May Be Neutral, but RAI Is Not

- 2.RAI Puts AI in Context

- 3.RAI Improves Rather Than Constrains Innovation

1.AI May Be Neutral, but RAI Is Not

Industry and policy experts frequently portray technology ethics and regulations as hurdles to innovation. For example, those who perceive an AI arms race between the U.S. and China often cite AI ethics as an obstacle to novel deployments of the technology. 1 Our panelists reject this view and push back on what innovation means in the context of RAI. They distinguish between innovations in AI technology itself and sociotechnical innovations that enable AI to empower humans. 2 In their view, RAI enables beneficial societal progress — what we can deem to constitute true innovation — and constrains the potentially harmful impacts of advancements in AI. As Richard Benjamins, chief AI and data strategist at Telefónica, contends, “Artificial intelligence, by itself, is neither responsible nor irresponsible. It’s the application of AI to specific use cases that makes it responsible or not” — and that’s where RAI comes into play. Essentially, if AI innovation asks what is possible, RAI innovation asks what should be.

Strongly disagree

“The practice of responsible AI is not at odds with innovation at all. The two can and must coexist. Innovation is not only about unleashing imagination and achieving what once wasn’t possible; it’s about doing so with ethics, values, and respect for rights. When innovation and responsibility combine, we see the greatest potential of technology and human (including emotional) intelligence, and the two — together — will achieve what cannot succeed alone.”

Katia Walsh, Levi Strauss & Co.

Our panelists acknowledge that, as with any technology, AI holds the potential for both positive and negative social outcomes.

For many of our experts, RAI is about maximizing the upside — and minimizing the downside — of AI applications. As Paula Goldman, chief ethical and humane use officer at Salesforce, observes, “AI has the power to transform the way we live and work in profound ways, but we’ve also seen it exacerbate social inequalities.” She adds, “Investing in principles and guardrails can unleash the positive potential of AI by mitigating harms and building trust.” For Benjamins, “Responsible AI is a mindset and methodology that — by design — helps focus on innovations that maximize positive impacts and minimize negative impacts.” Riyanka Roy Choudhury, a fellow at Stanford University, agrees, arguing that “responsible AI creates a guideline to mitigate AI risks” and that “when adopted universally, responsible AI can open up new opportunities leading to fairer and more sustainable AI.” Creating these types of guardrails can actually accelerate product teams by creating a safe space for them to experiment and innovate with less fear about unintended negative societal consequences.

2.RAI Puts AI in Context

To achieve a net positive impact, we must distinguish between AI’s evolution as a technology and RAI-enabled progress as a social phenomenon. While AI advances are mathematical, computational, or technical, RAI considers how their application affects humans at the individual and societal levels. This is perhaps the more important dimension to consider, since it is often the pairing of humans plus AI that drives the greatest value. For example, David Hardoon, chief data and AI officer at UnionBank of the Philippines, frames the distinction in a question: “Does RAI constrain AI-related innovation? In the context of AI’s mathematical innovation, yes, to some extent. In the context of the application of AI — it must.” Similarly, Oarabile Mudongo, a researcher at the Center for AI and Digital Policy in Johannesburg, urges us to examine “the sociohistorical context of technological innovation … [as] AI developments will cause far-reaching disruptions in society” and cannot be assessed in isolation.

While there is no question that AI will change society, it’s the constraints we impose that will determine how. As Belona Sonna, a Ph.D. candidate in the Humanising Machine Intelligence program at the Australian National University, observes, “RAI is the way to couple the computational power of AI with the social dimension needed to improve people’s lives.” She challenges us to ask ourselves, “What really matters? Demonstrating the computational power of models, or putting that computational power to the benefit of humans?” Our panelists overwhelmingly agree that the kind of AI-related innovation that matters is the kind that benefits people, businesses, and society as a whole, and constraints are the way to achieve those benefits.

Strongly disagree

“We’re building artificial intelligence technology for the benefit of mankind, so AI constraints need to be part of the design. RAI allows us to pose more interesting research questions and learn how to collect data correctly while also ensuring that we are not marginalizing groups and communities. It doesn’t limit innovation; rather, it creates more, which requires additional expertise.”

Jeremy King, Pinterest

3.RAI Improves Rather Than Constrains Innovation

Carnegie Mellon associate professor of business ethics Tae Wan Kim observes, “Responsible AI constraints are currently major forces of AI-related innovation.” Our experts point to ample evidence of the ways RAI promotes innovations that benefit individuals, business outcomes, and society at large. Philip Dawson, a fellow at the Schwartz Reisman Institute for Technology & Society, argues, “RAI is accelerating time to market for organizations seeking to realize the benefits of AI for their businesses, and as a result, it has led to the innovation of an entire new industry,” citing “the emergence of an increasingly large and expanding market of RAI SaaS providers.” Linda Leopold, head of responsible AI and data at H&M Group, agrees that businesses benefit from RAI, noting that “ethically and responsibly designed AI solutions are also better AI solutions in the sense that they are more reliable, transparent, and created with the end user’s best interests in mind.” Moreover, she argues that for businesses, RAI “creates a competitive advantage, as it reduces risk and increases trust.”

Alongside promoting beneficial outcomes from AI applications, RAI helps prevent or mitigate potential harms. Tshilidzi Marwala, vice chancellor of the University of Johannesburg, argues, “A focus on regulation, ethics, and cultural aspects of AI … does not hamper innovation holistically but addresses concerns around harmful innovation.” While this might mean slowing or even forgoing certain applications of AI, that is actually a desirable outcome, from an RAI standpoint. As Simon Chesterman, senior director of AI governance at AI Singapore, puts it, “The purpose of RAI is to reap the benefits of AI while minimizing or mitigating the risk [by] designing, developing, and deploying AI in a manner that helps rather than harms humans.” Similarly, Hardoon observes, “RAI constrains AI-related innovation as much as traffic regulations constrain the maximum speed at which a car can be driven on roads.”

Recommendations

RAI enables AI-related innovation because it addresses the real-world application and impact of AI, and that’s the only context in which innovation really matters. As Suhas Manangi, product head of Airbnb’s AI/ML Defense Platform team, sums it up, “Can we build for an equitable world by building an equitable AI? And isn’t that the innovation, and the only innovation, we want? If yes, then responsible AI helps in that innovation but does not constrain it.”

In sum, for organizations seeking to leverage RAI to promote AI-related innovation, we recommend the following:

- 1.Don’t allow the hype to become a barrier

- 2.Focus on the upside of RAI

- 3.Not all innovation is created equal.

1.Don’t allow the hype to become a barrier

RAI does not constrain AI-related innovation, and there is ample evidence to support the opposite. Don’t let it become an excuse to delay RAI initiatives within your organization.

2.Focus on the upside of RAI

When scaling an RAI program, stay focused on the big picture: RAI enables clear business benefits, builds trust with customers, and promotes beneficial societal outcomes.

3.Not all innovation is created equal.

Remember that not everything that can be done should be done, and that is true for AI. Consider the legacy you want to leave behind, and use RAI to shape that legacy toward the good of humanity.

About the Panel

MIT Sloan Management Review and Boston Consulting Group assembled an international panel of more than 20 industry practitioners, academics, researchers, and policy makers to share their views on core issues pertaining to responsible AI.

Over the course of five months, we will ask the panelists to answer a question about responsible AI and briefly explain their response.

Readers can see all panelist responses and comments in the panel at the bottom of each article and continue the discussion in AI for Leaders, a LinkedIn community designed to foster conversation among like-minded technology experts and leaders.

About the Authors

Elizabeth M. Renieris is guest editor for the MIT Sloan Management Review Responsible AI Big Idea program and founding director of the Notre Dame-IBM Technology Ethics Lab.

David Kiron is editorial director of MIT Sloan Management Review.

Steven D. Mills is a managing director, partner, and chief AI ethics officer at Boston Consulting Group.

References

1. See, for example, A. Smith, “ How China Surpassed the U.S. in the Race for AI Supremacy,” USC Global Policy Institute, Jan. 6, 2022, https://uscgpi.com.

2. See, for example, N. Kokciyan, B. Srivastava, M.N. Huhns, et al., “ Sociotechnical Perspectives on AI Ethics and Accountability,” IEEE Internet Computing 25, no. 6 (November-December 2021): 5–6.

Originally published at https://sloanreview.mit.edu on July 12, 2022.