healthtransformation

.foundation

Joaquim Cardoso MSc

March 1, 2024

This summary is based on the article “Welcome to the Era of BadGPTs”, published by The Wall Street Journal and written by Belle Lin on February 28, 2024

What is the message?

The emergence of malicious AI chatbots, like “BadGPT” and “FraudGPT,” on the dark web poses a significant threat to businesses, leading to an increase in AI-generated email fraud and deepfake attacks.

These nefarious chatbots, leveraging the same technology as ChatGPT, are empowering cybercriminals to enhance their phishing emails and perpetrate sophisticated scams, causing concerns among cybersecurity leaders and experts.

ONE PAGE SUMMARY

What are the key points?

Rise of BadGPTs: Cybercriminals are leveraging manipulated versions of AI chatbots to craft more convincing phishing emails and deepfake content, exploiting vulnerabilities in AI models for malicious purposes.

Impact on Businesses: Incidents like the $25.5 million loss suffered by a multinational company due to an AI-generated deepfake conference call highlight the financial risks posed by these advanced cyber threats. Businesses, particularly public companies, face heightened vulnerability to contextualized spear-phishing attacks.

Utilization of AI Models: Dark web hacking tools often utilize open-source AI models or “jailbroken” versions from reputable vendors like OpenAI and Anthropic, bypassing built-in safety controls through techniques like prompt injection.

Challenges in Detection: Malware and phishing emails generated by AI are challenging to detect due to their ability to evade traditional detection methods. AI-powered cybersecurity defense software is being used to train models to create stealthier malicious content.

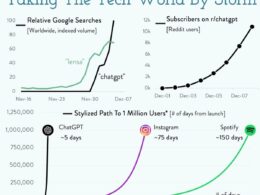

Escalation of Attacks: Phishing attacks surged by 1,265% within a year of ChatGPT’s release, averaging 31,000 attacks daily, underscoring the escalating threat landscape faced by organizations worldwide.

What are the key statistics?

Phishing emails grew by 1,265% within the first year of ChatGPT’s release, with an average of 31,000 attacks daily.

Businesses have witnessed an increase in AI-generated email attacks, particularly spear-phishing incidents targeting public companies.

What are the key examples?

A Hong Kong multinational company lost $25.5 million in an AI-generated deepfake conference call scam.

Security researchers have identified over 200 large-language model hacking services on the dark web since the public release of ChatGPT.

Conclusion

The proliferation of BadGPTs and similar malicious AI chatbots underscores the urgent need for enhanced cybersecurity measures and proactive defense strategies within businesses.

As cyber threats evolve, organizations must remain vigilant and adopt advanced AI-driven detection technologies to mitigate the risks posed by AI-enabled cybercrime.

To read the original publication, click here.