Linkedin

Sam Ransbotham

Professor at Boston College;

AI Editor at MIT Sloan Management Review;

Host of “Me, Myself, and AI” podcast

March 17, 2022

elementai

Key messages

Summarized by Joaquim Cardoso MSc.

Servant Leader of “The Health Strategy Institute”

@ “The AI Powered Health Care Unit”

March 17, 2022

AI applications involve many different levels — and types — of risk. How much risk should managers take?

- Too much risk and projects may fail.

- But too little risk and you may miss out on something big.

Thinking systematically about risk helps with AI projects

- Black and Decker categorizes their innovation projects into six levels, ranging from incremental improvements to radical innovations.

- The OECD.AI Network of Experts recently released their OECD Framework for Classifying AI Systems to help policy-makers, regulators, legislators, and others assess the opportunities and risks inherent in different types of AI systems.

Pioneers, organizations more advanced in using AI, embrace projects with greater risks.

- Importantly, these projects with greater risk yielded higher returns.

- Only 23% of organizations that invest primarily in low-risk projects reported value from AI projects.

Organizations may be able to reduce risk as well.

For example, “ Four Steps To Removing Risk In AI Applications” recommends

- identifying the biggest business model assumption,

- talking to customers or users,

- determining the biggest technology assumptions, and then

- outsourcing the development of the AI application prototypes.

What is your model for managing risks in AI projects?

ORIGINAL PUBLICATION (full version)

How Much Risk Makes Sense in AI Projects?

Linkedin

Sam Ransbotham

Professor at Boston College;

AI Editor at MIT Sloan Management Review;

Host of “Me, Myself, and AI” podcast

March 17, 2022

elementai

AI applications involve many different levels — and types — of risk. How much risk should managers take?

- Too much risk and projects may fail.

- But too little risk and you may miss out on something big.

In our recent “ Me, Myself, and AI” podcast episode, Shervin and I talked with Mark Maybury, the first chief technology officer at Stanley Black & Decker.

Mark described how the company categorizes their innovation projects into six levels, ranging from incremental improvements to radical innovations.

This explicit categorization helps Stanley Black & Decker balance its overall product development portfolio.

- For example, Mark describes robotic process automation as an off-the-shelf, low-risk, proven technology that they use to automate elements of finance and HR.

- But a new product like Pria, an automated medication dispenser they developed with Pillo Health, has a much greater risk.

A key is that, while the six levels help, Stanley Black & Decker goes further and, for example, characterizes the type of risk.

For example, a project may have technical risk, and they use technical readiness levels stemming from Mark’s background in the Air Force.

But for a new product like Pria, they expect user adoption risk and safety risk.

Thinking systematically about risk helps with AI projects.

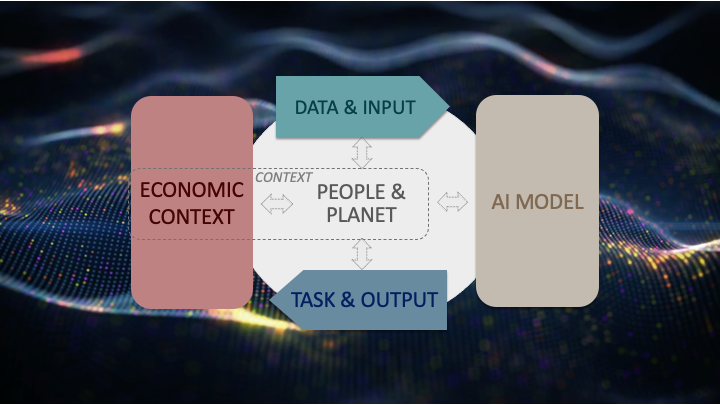

The OECD.AI Network of Experts recently released their OECD Framework for Classifying AI Systems to help policy-makers, regulators, legislators, and others assess the opportunities and risks inherent in different types of AI systems.

The OECD Framework for Classifying AI Systems

They intend that the framework helps users identify specific risks (such as bias, explainability, and robustness) that may be more salient in AI than in other projects.

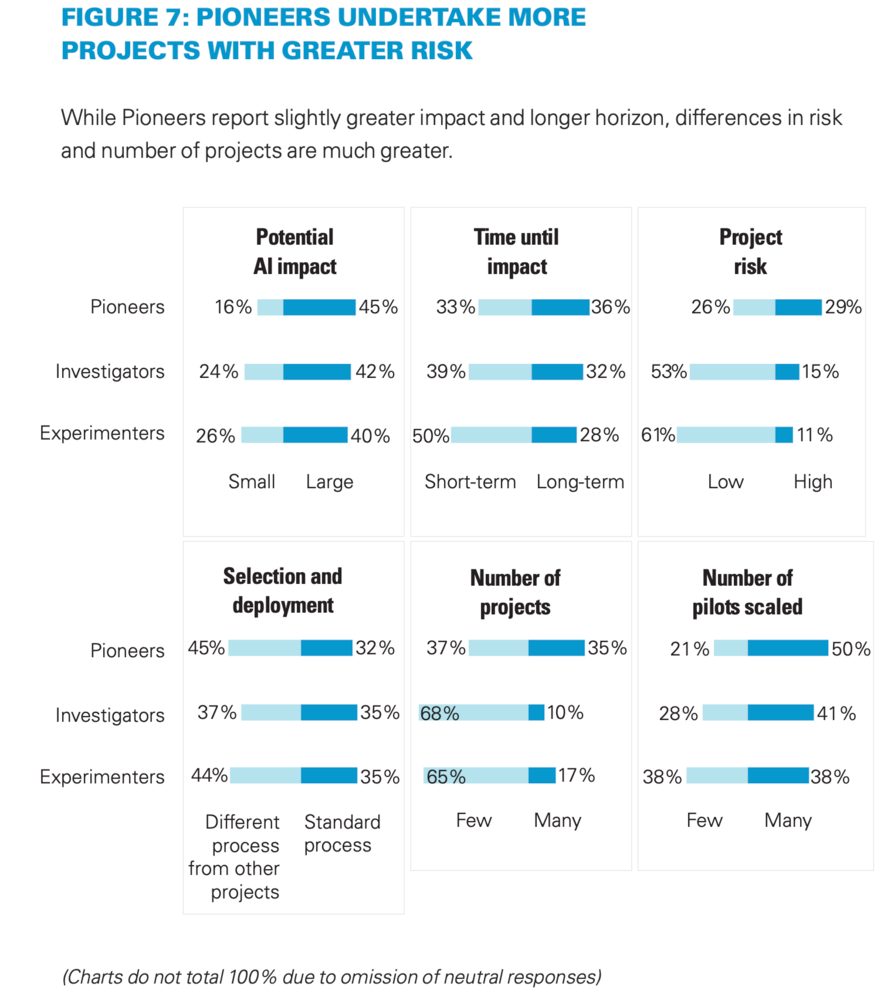

Our 2019 AI in Business research report found that Pioneers, organizations more advanced in using AI, embraced projects with greater risks.

Importantly, these projects with greater risk yielded higher returns.

Only 23% of organizations that invest primarily in low-risk projects reported value from AI projects.

Despite embracing projects with greater risk, the Pioneer group also was able to, on average, scale more projects than other firms.

Our 2019 AI in Business research report found that Pioneers, organizations more advanced in using AI, embraced projects with greater risks.

Importantly, these projects with greater risk yielded higher returns.

Only 23% of organizations that invest primarily in low-risk projects reported value from AI projects.

Organizations may be able to reduce risk as well.

For example, “ Four Steps To Removing Risk In AI Applications” recommends

- identifying the biggest business model assumption,

- talking to customers or users,

- determining the biggest technology assumptions, and then

- outsourcing the development of the AI application prototypes.

Levels like the ones Stanley Black & Decker uses or the OECD’s Framework help quantify and understand the remaining risks.

Originally published at https://www.linkedin.com.

Names mentioned

Mark Maybury, the first chief technology officer at Stanley Black & Decker.