Site editor:

Joaquim Cardoso MSc.

The Health Strategist — Journal

September 21, 2022

Key messages:

- Treating responsible AI strictly as a way to avoid AI failures is incomplete.

- Responsible AI leaders take a broader, more strategic approach that generates value for the organization and the world around them.

- By embracing a strategic approach to responsible AI, companies can more easily and less perilously scale their AI efforts-realizing important benefits and doing bigger, better things for their business and the world around it.

- In short, RAI is not just about being more responsible for a special technology.

- If you want to be an RAI Leader, focus on being a responsible company

ORIGINAL PUBLICATION (excerpt)

SEPTEMBER 20, 2022

By Steven Mills, Sean Singer, Abhishek Gupta, Franz Gravenhorst, François Candelon, and Tom Porter

A new study by BCG and MIT Sloan Management Review finds that treating responsible AI strictly as a way to avoid AI failures is incomplete.

Responsible AI leaders take a broader, more strategic approach that generates value for the organization and the world around them.

Artificial intelligence has made great strides in recent years-but sometimes it also makes headlines.

Biased hiring, unfair lending practices, incorrect medical diagnoses: AI failures happen, and in some instances they’ve had a significant, even life-altering impact on individuals and communities.

Biased hiring, unfair lending practices, incorrect medical diagnoses: AI failures happen, and in some instances they’ve had a significant, even life-altering impact on individuals and communities.

Little wonder, then, that businesses see responsible AI (RAI) as a way to mitigate the risks of AI.

But is this the best or most beneficial way to view RAI?

Our global survey of more than 1,000 executives reveals that it’s not.

As AI becomes more powerful and more prevalent, companies must ensure that they use the technology appropriately.

But those that lead in responsible AI, the survey finds, look beyond risk mitigation.

They treat RAI as an enabler that can bring tangible benefits to the business, support broader long-term goals such as corporate social responsibility (CSR), and help derive even more value from AI investments.

… those that lead in responsible AI, the survey finds, look beyond risk mitigation.

They treat RAI as an enabler that can bring tangible benefits to the business, support broader long-term goals such as corporate social responsibility (CSR), and help derive even more value from AI investments.

Today, leaders in responsible AI are few and far between.

Indeed, although most companies recognize the importance of RAI, the survey finds a large gap between aspirations and action.

An overwhelming majority of respondents-84%-say that RAI should be a top management priority. Yet just 16% of companies have fully mature RAI programs.

These responsible AI leaders see clear business benefits from RAI, in addition to the societal value of minimizing risk for individuals and communities.

Their experiences provide key insights and a high-level roadmap for the many organizations that are still just dipping their toes into RAI-or standing to the side of the pool.

Responsible AI Isn’t Just About Reducing Risk

Risk mitigation has long been RAI’s raison d’être.

The thinking: a technology like AI-with so much potential influence on operations, customer interactions, and product functionality-requires special precautions.

And there’s plenty to mitigate.

Nearly a quarter of participants in the survey report AI failures, ranging from technical glitches to bias in decision making and actions that raise privacy or safety concerns.

And there’s plenty to mitigate. Nearly a 25% … report AI failures- ranging from technical glitches to bias in decision making and actions that raise privacy or safety concerns.

Given that so many organizations have yet to adopt RAI (and may not even know whether they’ve had an AI failure), the actual number of such incidents is likely even higher.

But responsible AI leaders have demonstrated that they can reduce risk and gain other important benefits by approaching RAI in a more strategic way.

But responsible AI leaders have demonstrated that they can reduce risk and gain other important benefits by approaching RAI in a more strategic way.

Leaders share certain characteristics.

- They prioritize RAI,

- include a wide array of stakeholders (within and outside the organization) in its implementation, and

- make a firm material and philosophical commitment to RAI.

- Moreover, responsible AI is itself a source of business value for these organizations.

Leaders share certain characteristics: (1) They prioritize RAI, (2) include a wide array of stakeholders (within and outside the organization) in its implementation, (3) make a firm material and philosophical commitment to RAI, (4) Moreover, responsible AI is itself a source of business value for these organizations.

What kind of business value?

- PRODUCTS AND SERVICES: Half of responsible AI leaders report having developed better products and services as a result of their RAI efforts (only 19% of nonleaders say the same).

- BRAND DIFFERENTIATION: Nearly as many-48%-say that RAI has enhanced brand differentiation (compared to 14% of nonleaders).

- INNOVATION ACCELERATION: And 43% of leaders cite accelerated innovation (versus just 17% of nonleaders).

- BUSINESS BENEFITS IN GENERAL: Overall, leaders are twice as likely as nonleaders to realize business benefits from their responsible AI efforts.

Overall, leaders are twice as likely as nonleaders to realize business benefits from their responsible AI efforts.

RAI Comes First

But the survey also makes clear that timing-specifically, the sequence in which companies increase their RAI maturity and their AI maturity-counts, too.

For many companies, AI maturity comes first:

- 42% of respondents say that AI is a top strategic priority,

- but among this group, only 19% say that their organization has a fully implemented responsible AI program.

This, it turns out, is the wrong order.

For many companies, AI maturity comes first: (1) 42% of respondents say that AI is a top strategic priority, (2) but among this group, only 19% say that their organization has a fully implemented responsible AI program.

This, it turns out, is the wrong order.

As AI maturity grows, a company will deploy more AI applications, and those applications will be more complex, increasing the risk that something may go wrong.

Companies that prioritize scaling their RAI program over scaling their AI capabilities experience nearly 30% fewer AI failures.

And the failures they do have tend to reveal themselves sooner and have significantly less impact on the business and the communities it serves.

Focusing first on responsible AI enables companies to create and leverage a powerful synergy between AI and RAI.

Companies that prioritize scaling their RAI program over scaling their AI capabilities experience nearly 30% fewer AI failures.

Focusing first on responsible AI enables companies to create and leverage a powerful synergy between AI and RAI.

Connecting RAI and CSR

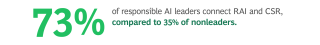

Most companies in the vanguard of RAI view it as part of their broader CSR efforts: 73% connect RAI and CSR, compared to 35% of nonleaders.

For responsible AI leaders, the alignment is natural.

Both efforts have many goals in common-including transparency, fairness, and bias prevention-so they can support and empower each other.

Indeed, the survey found that as their responsible AI maturity increases, organizations become more interested in aligning their AI with their values and take a broad, societal view of the impacts of AI on stakeholders.

as their responsible AI maturity increases, organizations become more interested in aligning their AI with their values and take a broad, societal view of the impacts of AI on stakeholders

Once again, responsible AI is not just about reducing risk. It’s also about creating value, for company and community alike.

Once again, responsible AI is not just about reducing risk. It’s also about creating value, for company and community alike.

Now’s the Time to Get Started

Responsible AI leaders don’t limit themselves to thinking about risks and regulations.

They also consider how RAI can advance their business goals and principles and how it can affect a broad array of stakeholders.

Crucially, they’ve already started charting a path that others can follow.

Taking that path is important.

Many companies are investing heavily in AI without committing corresponding resources or efforts to RAI. That leaves risk on the horizon and value on the table.

By embracing a strategic approach to responsible AI, companies can more easily and less perilously scale their AI efforts-realizing important benefits and doing bigger, better things for their business and the world around it.

By embracing a strategic approach to responsible AI, companies can more easily and less perilously scale their AI efforts-realizing important benefits and doing bigger, better things for their business and the world around it.

Originally published at https://www.bcg.com on September 15, 2022.

EXCERPT 1: Recommendations for Aspiring RAI Leaders

Clearly, there are compelling reasons for organizations to transform their own RAI aspirations into reality, including general corporate responsibility, the promise of a range of business benefits, and potentially better preparedness for new regulatory frameworks. How, then, should businesses begin or accelerate this process? These recommendations, inspired by lessons from current RAI leaders, will help organizations scale or mature their own RAI programs.

1.ditch the “check the box” mindset.

In the face of impending AI regulations and increasing AI lapses, RAI may help organizations feel more prepared. But a mature RAI program is not driven solely by regulatory compliance or risk reduction. Consider how RAI aligns with or helps to express your organizational culture, values, and broader CSR efforts.

2.zoom out.

Take a more expansive view of your internal and external stakeholders when it comes to your own use or adoption of AI, as well as your AI offerings, including by assessing the impact of your business on society as a whole. Consider connecting your RAI program with your CSR efforts if those are well established within the organization. There are often natural overlaps and instrumental reasons for linking the two.

3.start early.

Launch your RAI efforts as soon as possible to address common hurdles, including a lack of relevant expertise or training. It can take time — our survey shows three years on average — for organizations to begin realizing business benefits from RAI. Even though it might feel like a long process, we are still early in the evolution of RAI implementation, so your organization has an opportunity to be a powerful leader in your specific industry or geography.

4.walk the talk.

Adequately invest in every aspect of your RAI program, including budget, talent, expertise, and other human and nonhuman resources. Ensure that RAI education, awareness, and training programs are sufficiently funded and supported. Engage and include a wide variety of people and roles in your efforts, including at the highest levels of the organization.

EXCERPT 2: Conclusion

We are at a time when AI failures are beginning to multiply and the first AI-related regulations are coming online.

While both developments lend urgency to the efforts to implement responsible AI programs, we have seen that companies leading the way on RAI are not driven primarily by risks, regulations, or other operational concerns.

Rather, our research suggests that Leaders take a strategic view of RAI, emphasizing their organizations’ external stakeholders, broader long-term goals and values, leadership priorities, and social responsibility.

Leaders take a strategic view of RAI, emphasizing their organizations’ external stakeholders, broader long-term goals and values, leadership priorities, and social responsibility.

Even though there are unique properties of AI that require an organization to articulate ..

… specific cultural attitudes, priorities, and practices, similar strategic considerations might influence how an organization approaches the development or use of blockchain, quantum computing, or any other technology, for that matter.

Given the high stakes surrounding AI, and the clear business benefits stemming from RAI, organizations should consider how to mature their RAI efforts and even seek to become Leaders.

Philip Dawson, AI policy lead at the Schwartz Reisman Institute for Technology and Society, warns of liabilities for corporations that neglect to approach this issue strategically.

“Top management seeking to realize the long-term opportunity of artificial intelligence for their organizations will benefit from a holistic corporate strategy under its direct and regular supervision,” he asserts.

“Failure to do so will result in a patchwork of initiatives and expenditures, longer time to production, damages that could have been prevented, reputational damages, and, ultimately, opportunity costs in an increasingly competitive marketplace that views responsible AI as both a critical enabler and an expression of corporate values.”

“Failure to do so will result in : (1) a patchwork of initiatives and expenditures, (2) longer time to production, (3) damages that could have been prevented, (4) reputational damages, and, ultimately, (5) opportunity costs in an increasingly competitive marketplace — that views responsible AI as both a critical enabler and an expression of corporate values.”

On the flip side of those liabilities, of course, are the benefits that we have seen accrue to Leaders that adopt a more strategic view.

Leaders go beyond talking the talk to walking the walk, bridging the gap between aspirations and reality.

They demonstrate that responsible AI actually has less to do with AI than with organizational culture, priorities, and practices — how the organization views itself in relation to internal and external stakeholders, including society as a whole.

Leaders go beyond talking the talk to walking the walk, bridging the gap between aspirations and reality.

They demonstrate that responsible AI actually has less to do with AI than with organizational culture, priorities, and practices — how the organization views itself in relation to internal and external stakeholders, including society as a whole.

In short, RAI is not just about being more responsible for a special technology.

RAI Leaders see RAI as integrally connected to a broader set of corporate objectives, and to being a responsible corporate citizen.

If you want to be an RAI Leader, focus on being a responsible company

In short, RAI is not just about being more responsible for a special technology. If you want to be an RAI Leader, focus on being a responsible company

Names mentioned

Philip Dawson, AI policy lead at the Schwartz Reisman Institute for Technology and Society

To cite this report, please use:

Elizabeth M. Renieris, David Kiron, and Steven Mills, “To Be a Responsible AI Leader, Focus

on Being Responsible,” MIT Sloan Management Review and Boston Consulting Group,

September 2022